Today we’re going to create an automatic spam email detector capable of classifying emails as spam using Python and the scikit-learn library.

If you’ve ever used an email client like Gmail, Hotmail, or Yahoo, you know that your email client automatically marks some of the emails as spam emails. Most of the time, emails marked as spam are really spam emails. How does your email client know that a certain email contains spam content? They use automatic spam email detectors and today we’re going to build one ourselves.

Spam email detectors are computer programs that detect whether or not an email is spam based on a variety of features such as the email sender, email content and the keywords used in the email. Years ago, spam email detectors used a rule-based approach based on keyword spotting. However, with the availability of annotated data, the focus of research has shifted toward machine learning-based spam email detectors.

In this tutorial, you’ll create a very simple spam email detector based on machine learning algorithms using Python’s scikit-learn library for machine learning. We’re going to be using quite a few supplemental libraries, as well, so make sure you have them installed by running the following commands from your terminal:

pip install pandas pip install NumPy pip install matplotlib pip install seaborn pip install nltk pip install scikit-learn

Importing Libraries and Dataset

Let’s start by importing the required libraries and the dataset. The following script imports the required libraries.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline #comment this line out if not using Jupyter notebookThe dataset for this article can be downloaded freely in the CSV format from this kaggle link. Download the CSV file and save it somewhere on your computer. Then, import the CSV file containing the dataset into your application using a script like this:

dataset = pd.read_csv(r"C:\Datasets\email_dataset.csv")

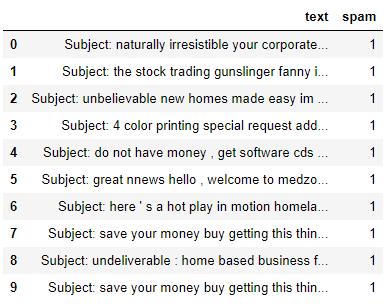

dataset.head(10)Output:

The output shows that the dataset has two columns: text and spam. The text column contains the email text while the spam column contains information regarding whether an email is a spam email or not. We’re going to use this to train our machine learning algorithm.

Visualizing the Dataset

For the sake of curiosity, let’s visualize our dataset. Execute the following script to display the total number of records in the dataset.

dataset.shapeOutput:

(5728, 2)The output shows that the dataset contains 5728 emails.

Lets’s see the number of spam emails. Run the script below:

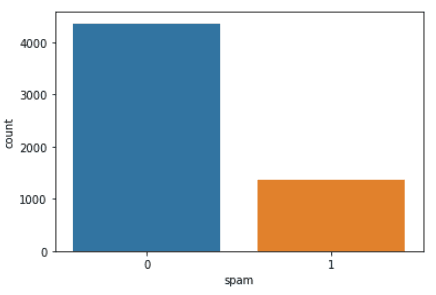

dataset.spam.value_counts()Output:

0 4360

1 1368

Name: spam, dtype: int64The above output shows that out of 5728 emails, 1368 emails are spam emails. Remember the entry 1 in the spam column of our dataset denotes a spam email.

Let’s plot a count plot which shows the total count of spam and ham (non-spam) emails in the form of bars.

sns.countplot(x='spam', data=dataset)Output:

Now that we know what our data looks like, let’s do a little preprocessing.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

Data Preprocessing

In the preprocessing step, you’ll need to remove all the special characters, digits and empty spaces from our dataset. However, before you can do that, you need to divide the dataset into a features and labels set. In machine learning models, a feature set is used to predict the labels set. The features are the descriptive attributes (the emails in the case), and the label is what you’re attempting to predict or forecast (whether or not the email is spam).

To divide your data into features and labels, run the following script:

messages = dataset["text"].tolist()

output_labels = dataset["spam"].valuesThe script below iterates through the text of our emails (the feature set) and removes special character, digits and empty spaces.

import re

processed_messages = []

for message in messages:

message = re.sub(r'\W', ' ', message)

message = re.sub(r'\s+[a-zA-Z]\s+', ' ', message)

message = re.sub(r'\^[a-zA-Z]\s+', ' ', message)

message = re.sub(r'\s+', ' ', message, flags=re.I)

message = re.sub(r'^b\s+', '', message)

processed_messages.append(message)Converting Text to Numeric Form

Machine learning algorithms are simply statistical algorithms that work with numbers. Since you’ll be using machine learning algorithms to develop spam detectors, you need to convert email texts into numeric form. There are many ways to do this, but we’ll be using the TFIDF approach since it’s simple yet effective.

Before that you’ll need to further divide your dataset into training and test sets. We’re going to train your machine learning algorithm with training set and then evaluate it using the test set. The test_size attribute in the following script divides the data into an 80% training set and a 20% test set.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(processed_messages, output_labels, test_size=0.2, random_state=0)For machine learning algorithms, it’s important to have the same form for training and test sets. Hence, the TFIDF vectorizer must be used to convert both the training data and the test data into numeric form.

scikit-learn has a built in TFIDF vectorizer, which we’ll use in this script to convert training and test sets into numeric format.

import nltk

nltk.download('stopwords')

from nltk.corpus import stopwords

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer = TfidfVectorizer(max_features=2000, min_df=5, max_df=0.75, stop_words=stopwords.words('english'))

X_train = vectorizer.fit_transform(X_train).toarray()

X_test = vectorizer.transform(X_test).toarray()That’s all the setup we need. We’re now ready to train and test our spam detector.

Training and Evaluating the Spam Detector Model

To train your spam detector model, you can use any supervised machine learning algorithm. In this tutorial, we’ll use the Random Forest classifier from the scikit-learn library.

To train a machine learning model, the training set containing features and labels is passed to the fit() method of the RandomForestClassifier class, as shown below:

from sklearn.ensemble import RandomForestClassifier

spam_classifier = RandomForestClassifier(n_estimators=200, random_state=42)

spam_classifier.fit(X_train, y_train)Once the model is trained, you can make predictions on the test set to see how good your model is. To make predictions, you need to pass the test set to the predict() method of the trained model. Look at the following script for reference.

y_pred = spam_classifier.predict(X_test)The final step is to see how well your model has performed on the test set. To evaluate the model, use accuracy as a metric. To find model accuracy on the test set, grab the accuracy_score class from the sklearn.metrics module. You need to pass the actual test labels and predicted labels to the accuracy_score class, like this:

from sklearn.metrics import accuracy_score

print(accuracy_score(y_test, y_pred))Output:

0.9825479930191972The output shows that our spam detector has correctly marked an email as spam a whopping 98.25% of the time! Impressive, no?

Full Python Application Code

To save you some trouble, here’s the full code for the scikit-learn spam detector model based on Python machine learning.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline #comment this line out if not using Jupyter notebook

dataset = pd.read_csv(r"C:\Datasets\email_dataset.csv")

dataset.head(10)

print("Data Visualization")

dataset.shape

dataset.spam.value_counts()

sns.countplot(x='spam', data=dataset)

print("Data Preprocessing")

messages = dataset["text"].tolist()

output_labels = dataset["spam"].values

import re

processed_messages = []

for message in messages:

message = re.sub(r'\W', ' ', message)

message = re.sub(r'\s+[a-zA-Z]\s+', ' ', message)

message = re.sub(r'\^[a-zA-Z]\s+', ' ', message)

message = re.sub(r'\s+', ' ', message, flags=re.I)

message = re.sub(r'^b\s+', '', message)

processed_messages.append(message)

print("Converting text to numbers")

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(processed_messages, output_labels, test_size=0.2, random_state=0)

import nltk

nltk.download('stopwords')

from nltk.corpus import stopwords

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer = TfidfVectorizer(max_features=2000, min_df=5, max_df=0.75, stop_words=stopwords.words('english'))

X_train = vectorizer.fit_transform(X_train).toarray()

X_test = vectorizer.transform(X_test).toarray()

print("Training and Evaluating the Spam Classifier Model")

from sklearn.ensemble import RandomForestClassifier

spam_classifier = RandomForestClassifier(n_estimators=200, random_state=42)

spam_classifier.fit(X_train, y_train)

y_pred = spam_classifier .predict(X_test)

from sklearn.metrics import accuracy_score

print(accuracy_score(y_test, y_pred))To practice more machine learning Python codes, try our scikit-learn sentiment analysis demo or run through some of our scikit-learn machine learning examples. After that, subscribe using the form below for me great Python tutorials like this one.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.