Python is one of the best programming languages you can use for web scraping, and the Python Scrapy library makes it exceptionally easy. Web scraping is the process of programmatically extracting data from websites. It’s particularly useful when you want to extract a large amount of data from a website or to automate a process that would be too tedious to perform manually.

In this tutorial, we’ll show you how to scrape names, links and movie ratings from IMDB. We’ll store our scraped data in a CSV file.

Installing Scrapy Library for Python

Before, you can perform web scraping with Scrapy, you need to install the Scrapy library. To do so, execute the following command on your command prompt:

$ pip install Scrapy

Alternatively, if you are using Anaconda’s distribution for Python, execute the following command on your Anaconda prompt:

$ conda install -c conda-forge scrapy

Scraping IMDB Movie Records with Python

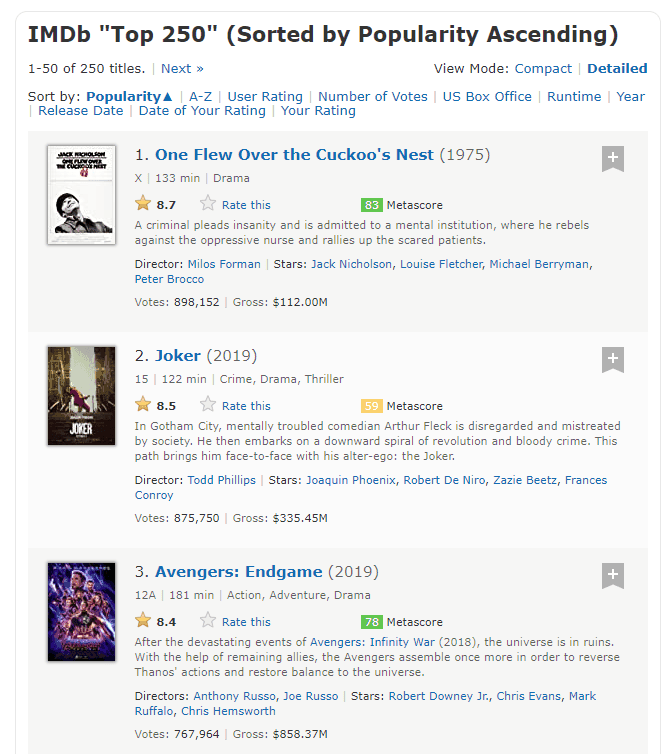

Data to be Scraped

We’re going to be scraping our data from IMDB’s list of top 250 movies.

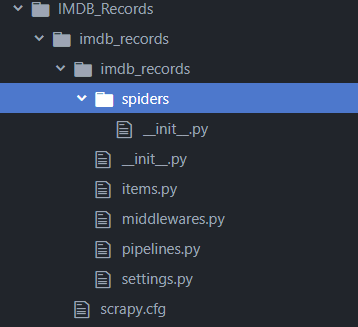

Creating Scrapy Project

To scrape data with Scrapy, you have to create a Scrapy project. To do so, execute the following command on your command prompt inside the directory where you want to create the project. The code below creates a Scrapy project named

scrapy startproject imdb_records

Once you execute the above script, you’ll see a message similar this one on your command prompt.

Output:

New Scrapy project 'imdb_records', using template directory 'c:\programdata\anaconda3\lib\site-packages\scrapy\templates\project', created in:

E:\IMDB_Records\imdb_records

You can start your first spider with:

cd imdb_records

scrapy genspider example example.com

Inside the directory where you created your

Creating Spiders for scraping

Once you’ve created a Scrapy project, the next step is to create a spider that will crawl the website you want to scrape. The script below creates a spider named

scrapy genspider imdb_spider www.imdb.com/search/title/?groups=top_250

If your spider is successfully created, you should see the following output:

Output:

Created spider 'imdb_spider' using template 'basic' in module: imdb_records.spiders.imdb_spider

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

Scraping Movie Records

Once you create a spider, a Python file named “imdb_spider” will appear in your “spiders” folder. The new Python script will look like this:

import scrapy

class ImdbSpiderSpider(scrapy.Spider):

name = 'imdb_spider'

allowed_domains = ['imdb.com']

start_urls = ["https://www.imdb.com/search/title/?groups=top_250"]

def parse(self, response):

passIn the above file, the variable parse() method.

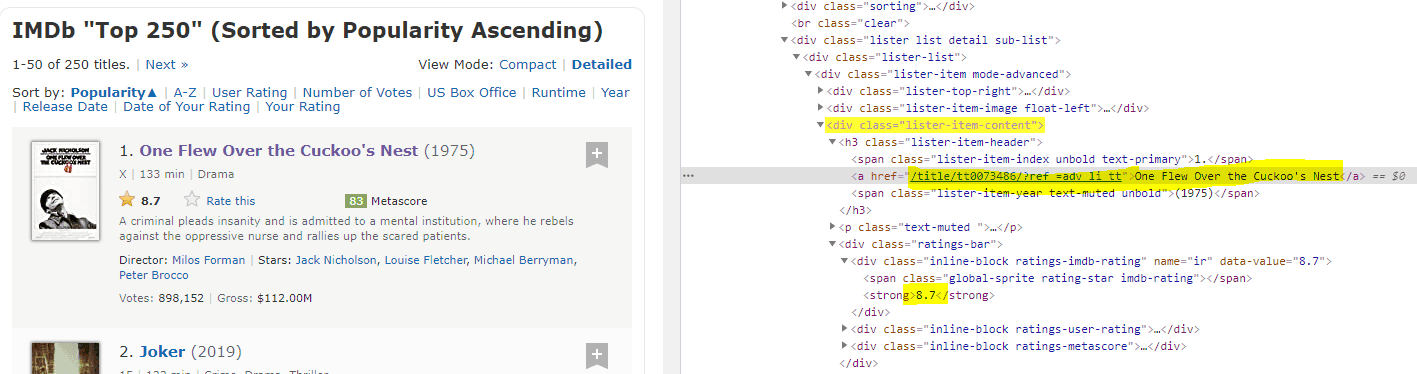

All the data in a webpage resides inside HTML tags. Scrapy basically searches HTML tags for your desired data, just like we showed you how to do in our VBA web scraping tutorial. Because Scrapy blindly crawls the HTML, you need to have a basic knowledge of HTML in order to know which HTML elements in a webpage contain your desired data.

One of the ways to familiarize yourself with the HTML elements in a website is to open the website in a browser, right click the webpage and click “Inspect”. For example, if you open the IMDB movie page we’re trying to scrape in Chrome, then “Right Click -> Inspect”, you’ll see HTML code like this:

From the HTML elements on the right, you can see that movie names are inside anchor tags, which are inside <h3> tags. These tags are further nested in a div tag with a class “lister-item-content”.

With Scrapy, there are two ways to search data inside an HTML page: You can either use CSS Selectors or you can use XPath Selectors. In this tutorial, we’ll use XPath selectors. Let’s briefly explain the functionality of each “XPATH” selector.

First, modify the “imdb_spider.py” file you generated earlier such that it looks like this:

import scrapy

class ImdbSpiderSpider(scrapy.Spider):

name = 'imdb_spider'

allowed_domains = ['imdb.com']

start_urls = ["https://www.imdb.com/search/title/?groups=top_250"]

def parse(self, response):

movies = response.xpath("//div[@class='lister-item-content']")

for movie in movies:

movie_name = movie.xpath(".//h3//a//text()").get()

movie_link = "https://www.imdb.com" + movie.xpath(".//h3//a//@href").get()

movie_rating = movie.xpath(".//div[@class='ratings-bar']//strong//text()").get()

yield{

'movie name':movie_name,

'movie link':movie_link,

'movie_rating':movie_rating

}In the above script the response object fetches all the <div> tags with class “lister-item-content”. Next, a loop iterates over the div tags. To fetch the movie name in each iteration of the loop, the div tag fetched by the response object is appended with .//h3//a//text(). These strange sequence of characters tells Scrapy that inside the h3 tag, search for the anchor tag and retrieve the text of the anchor tag which basically contains movie names. Similarly to fetch the title, the “XPATH” query .//h3//a//@href is appended with the div tag. Finally, ratings are retrieved by appending .//div[@class='ratings-bar']//strong//text() query with the div tag retrieved by the response object.

I know this sounds a little complicated, but once you’re comfortable with the patterns in the HTML layout of the webpage you’re trying to scrape, it will start to make more sense.

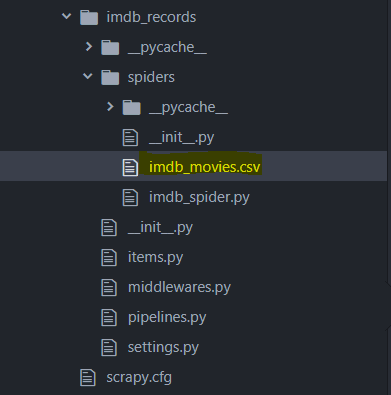

The last step is to execute the parse method of the “imdb_spider.py” file and store the scraped data in a CSV file. To do so, execute the following script.

scrapy crawl imdb_spider -o imdb_movies.csv

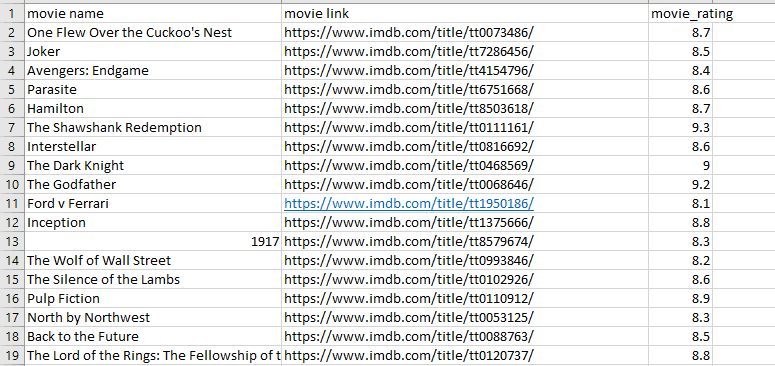

Once you execute the above script, you’ll see that a CSV file named imdb_movies.cs is created inside the “spiders” folder of the

If you open the CSV file in Excel or any spreadsheet software, you should see that it contains a clean list of all the names, links and movie ratings you just scraped from the website.

That’s all there is to it! As you can see, scrapy is one of the easiest and most reliable ways to rapidly extract data from a website using Python. It’s a whole lot easier than programming it with VBA! Join our team using the form below to see more creative ways you can use Python to handle data like we did in this tutorial.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.