These days, lots of websites offer public APIs so users can query the website in a variety of ways to receive well-structured data in return. Unfortunately, many websites do not offer these APIs or they don’t offer the exact information you need. When that happens, you can still scrape the data off the webpage by mastering a few VBA web scraping techniques.

Efficient web scraping truly requires a solid background in multiple VBA objects available for internet interaction, but in this tutorial we are going to focus on one object and one method of that object: GetElementsByTagName. Other methods may make your project easier, but GetElementsByTagName is practical enough that you can use it in almost any web scraping application.

Familiarizing yourself with how to use the VBA GetElementsByTagName method will help you with any web scraping method you need in the future.

- Setting up the Environment

- Setting up the Objects

- Getting to your Data

- Extracting info from the website

- Iterating through the HTML

- Quitting IE

- Full VBA Web Scraping Macro with GetElementsByTagName

- Troubleshooting

- Closing thoughts

Setting up the Environment

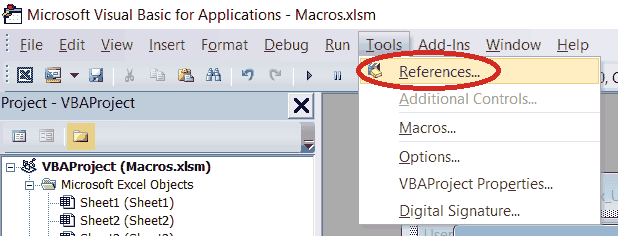

Before you can start web scraping with VBA, you need to set up the correct libraries, or References as they’re called in VBA. You will need to enable the Microsoft HTML Object Library and the Microsoft Internet Controls references before following the steps in this tutorial. These libraries can be found via Tools > References in the VBA editor:

Setting up the Objects

We’ve mentioned in other tutorials that using Intellisense is extremely helpful for learning. Recall that you only get Intellisense when you use early binding, which means you explicitly declare variable types and then set them. This is in contrast to no declaration and using late binding. Since we are learning here, we’re going to stick with early binding for this web scraping tutorial.

Dim ie As InternetExplorer

Dim webpage As HTMLDocument

Set ie = New InternetExplorerWe’ll play around with the ie.Visible = True. This is not required for scraping data from a website, but it might help you debug. I usually keep my windows visible until I know my code works, then I remove this line.

If you set your ie instance to visible, you will see a blank internet explorer window. Let’s remove the URL bar, just to show it’s manipulatable after we launch IE. Use ie.AddressBar = False to remove the URL bar. You’ll end up with a white box for the IE window. Again, this isn’t necessary but I just want to show you what it looks like before we load a website.

Getting to your Data

Next, we need to use the navigate() method of the

ie.navigate ("https://en.wikipedia.org/wiki/List_of_countries_and_dependencies_by_population")

Do While ie.Busy = True

LoopThere are many different versions of the Do-While loop to wait for a webpage to load. The point of it is to prevent VBA from trying to process the data before the website is fully loaded. Without this, or a similar line, you will get an error.

Like I said, there are a couple ways to force your code to loop until it’s done loading the website. This is definitely overkill but here’s the block of VBA code I usually use. Feel free to grab it for your own web scraping projects:

Do While ie.readyState = 4: DoEvents: Loop

Do Until ie.readyState = 4: DoEvents: Loop

While ie.Busy

DoEvents

WendContrary to our own recommendations in our VBA DoEvents tutorial, you’ll notice I use a ridiculous amount of DoEvents calls in the short snippet above. To each his own!

Okay, at this point, we should have an IE window loaded with the Wikipedia page on country and dependency populations. Depending on how you set up your macro, it may or may not be visible.

Make powerful macros with our free VBA Developer Kit Tutorials like this can be complicated. That’s why we created our free VBA Developer Kit and our Big Book of Excel VBA Macros to supplement this tutorial. Grab them below and you’ll be writing powerful macros in no time.

Finding what you need

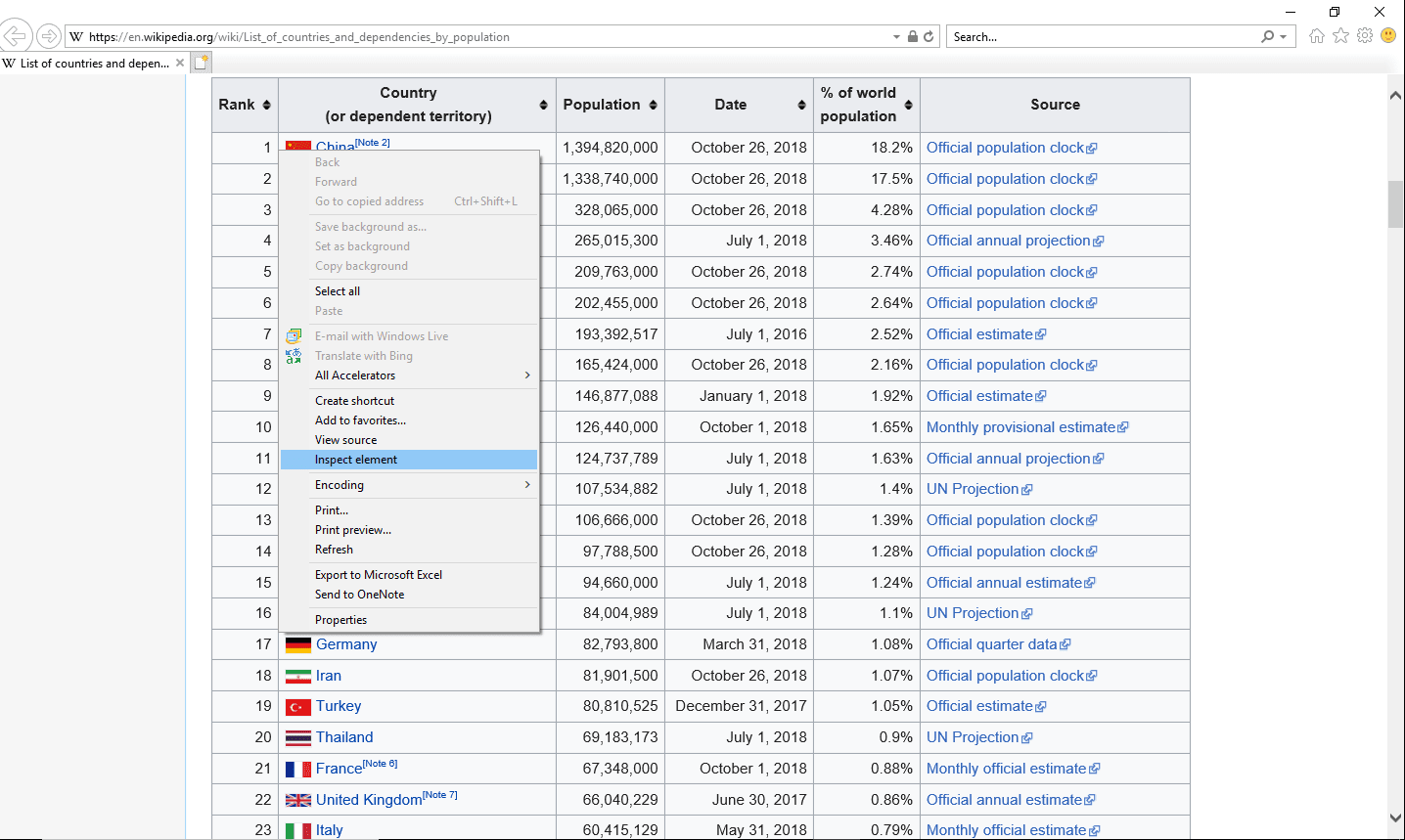

The next part of our web scraping tutorial requires a little understanding of web programming and HTML. If your IE window is visible, you can left click on the table where the number 1 appears under the Rank column.

Right-Click Menu after clicking on the Rank 1 Box.

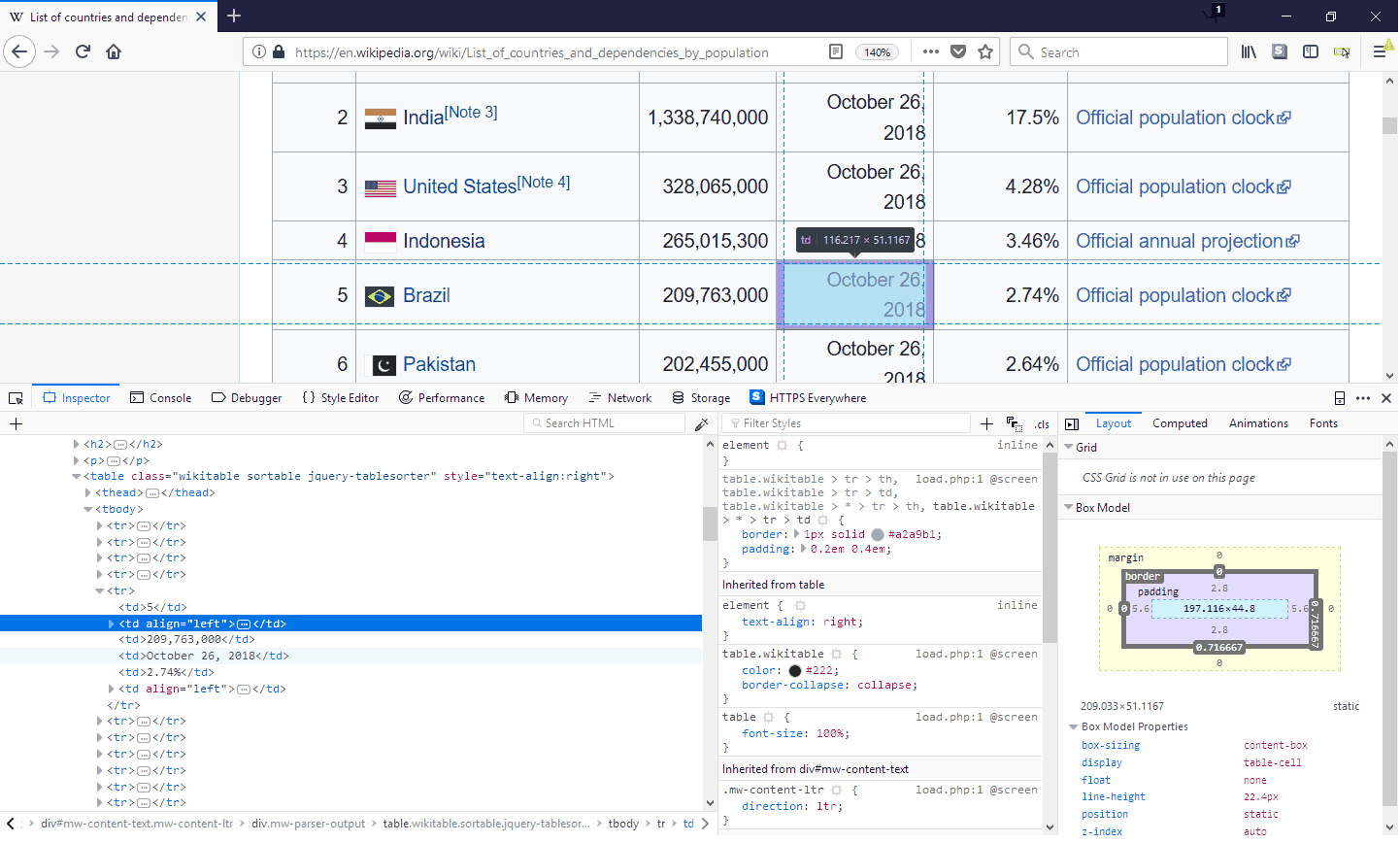

When you click Inpect Element, you’ll be greeted with the following explorer frame on the bottom of the IE window.

The Dev Tools Tab and Inspector showing our selection

The highlighted line with the blue arrow is the first cell of the table under the header, and it is the “element” we inspected. The red arrow is the “parent” object, and its tag is tr, which is what we will look for in the next section.

If your IE window isn’t visible, you can just navigate to our sample website using your favorite browser and inspect the page from there. All the major browsers have a similar function when you right click.

If you want to play around more, other browsers (like Chrome and Firefox) have dev tools that are more interactive. You can highlight different parts of the code, and a box will show up around the element on the actual website. This sort of interactivity will make web scraping a lot easier since you’ll be able to explore the HTML until you find the tag or tags you’re looking for.

In the image above, we can see the element we’ve moused over highlighted on the actual webpage. Tools like this one in Firefox and the equivalent tool in Chrome and Edge make understanding the website’s HTML structure a lot simpler. It was sometimes a pain to scrape websites with VBA before these tools were around!

Extracting info from the website

The tr tag is an HTML tag representing a new row in a table. At this point, you’re probably starting to connect the dots between the VBA getElementsByTagName method and the tag name we just found. The getElementsByTagName method actually marches through the HTML until it finds certain tags. From there, you’re able to extract the data you need.

Okay, we know we are looking for the tag

Eventually, we will iterate through an entire ocean of HTML. HTML source codes tend to be extremely long on modern websites, as JavaScript produces lots of code automatically.

Before we do that, we need to make sure we are looking at the right content on our website. Before running loops that return a bunch of garbage or errors, we need to do some research. This part can be tedious and frustrating on large pages, because several elements will have the same tag. If we have three tables on a page, all of them will have

Set webpage = ie.Document

Set table_data = webpage.getElementsByTagName("tr")The variable

To ensure we have the correct data, let’s use the Immediate Window, which will inform us of what we’re actually capturing. Add a breakpoint to your code before it ends and type ?table_data.Item(0).innertext in your Immediate Window. Doing this will show you the text of the first item in the

Oh no! It seems our text is not what we expected. As of the date of this tutorial, we get the message “It has been suggested that List of countries by population (United Nations) be merged…”, which is actually the blurb at the top of the Wikipedia article.

Your results may vary depending on the HTML structure of the webpage when you run your macro. This is one of the frustrations with web scraping and it’s why it’s useful to look at what you’re scraping before grabbing everything. Even though we only think of the populations as the table, the HTML may be structured in such a way that other elements are marked with “tr” tag before we get to our population list.

It seems the second item, .Item(1), is the table we really need. Calling this entry in our Immediate Window prints the correct table header row. We could just march through the rows starting with .Item(1) instead of .Item(0), but long-time reader YasserKhalil pointed out an even cleaner approach.

He suggested we define a collection of tables before we define our collection of

To implement his solution, we’ll save all the tables by using the same GetElementsByTagName method we used to grab our

Set webpage = ie.document

'Change the number in the line below to 0, 2, or something else if you get 424 object required.

'This means the website html has changed since this article was written.

Set mtbl = webpage.getElementsByTagName("Table")(1)

Set table_data = mtbl.getElementsByTagName("tr")Now our

The lesson learned here is that you can drill down on the websites HTML by nesting multiple VBA GetElementsByTagName statements. We first defined a collection of tables. Once we found the table we wanted, then we defined a smaller collection of rows that only contained the rows for that particular table.

Note: If you’re not sure which table you need, you would just pause your macro after the Set webpage = ie.document line and then enter something like ?webpage.getElementsByTagName("Table")( in your Immediate Window and press the return key. You would replace the ("Table")(1) was the table we wanted when we wrote this tutorial. With that said, ("Table")(0) may very well represent the table when you run this macro.

Iterating through the HTML

Now you can safely iterate over the entire

To simply get the whole row, you can iterate through each item in the collection, kind of like we did when finding the correct row in our Immediate Window. Conversely, looking at the dev tools info again, we can see that there is an inner structure to each tr group: the td tag. You can use each item’s Children property to read each cell as a separate piece of data. This is handy if you only want to scrape a subset of the data for your VBA program.

We’ll go over a couple different ways to scrape data from a table.

Using For Each

The Wikipedia data is pretty; it is well formatted, standardized, and well structured. Of course, the data on other websites might not look so good, so we’re going to use for-each loops to grab all the data we can find. We want to do this just in case some items are missing or you’re not exactly sure about your data’s shape.

The easiest way to scrape tables off the web and into Excel is by just running through each row and each cell in that row. The cells are accessed via the Children of that row.

The simplest code for this is:

For Each trow In table_data

For Each tcell In trow.Children

'add code for the text

'Debug.Print tcell.innertext

Next tcell

Next trowThe commented Debug.Print line will output every cell’s text to the Immediate Window. This is helpful if you’re still exploring the HTML to confirm you’re grabbing the correct data.

If you want each row printed to an Excel sheet while preserving structure, you could use counters, like this:

trowNum = 1

For Each trow In table_data

For Each tcell In trow.Children

tcellNum = tcellNum + 1

Cells(trowNum, tcellNum) = tcell.innerText

Next tcell

trowNum = trowNum + 1

tcellNum = 0

Next trowThis marches through every row in your innerText property to grab the unformatted text from that cell.

Hard-Coded Iterator Limits

If you know the size of your data won’t change or it is very nicely structured, you can also use the .Item and .Children properties to scrape your data. We can see our data always has 6 columns, so we could use a hard-coded VBA snippet like this to scrape the data we need:

For itemNum = 1 To 240

For childNum = 0 To 5

Cells(itemNum, childNum + 1) = table_data.Item(itemNum).Children(childNum).innerText

Next childNum

Next itemNumWe just hard-coded a maximum number of rows (240) in this example, but you can develop a way to programmatically find the last row in your macro.

This approach actually makes it a bit easier for you to control the output. Of course, if your data is of varying length or you don’t know its shape in advance (which is likely the case if you’re scraping multiple tables from many different sites), I strongly urge the use of the For Each method instead.

Quitting IE

Don’t forget to kill off IE before you finish your macro! This is especially true if your IE instance isn’t visible or you have a lot of websites to visit and you’re opening a new session each time. You can wind up with a stack of invisible IE instances stored in your system’s memory without ever realizing it.

Closing your IE window is as simple as adding ie.Quit. You can also optionally set your ie instance to Nothing so memory is fully cleared, like this:

ie.Quit

Set ie = NothingBefore we present our finished VBA web scraping code block, I want to emphasize something. If you’re code crashes with an error before you get to your ie.Quit line and your IE window isn’t set to visible, you may need to go into your Windows Task Manager to manually kill your internet explorer application. Failure to do this can eat up a bunch of memory as the windows remain active, but invisible. Forewarned is forearmed!

Full VBA Web Scraping Macro with GetElementsByTagName

Sub scrape_wikipedia_pop_data()

'Add Reference (Tools > References) to the following libraries:

' 1) Microsoft Internet Controls

' 2) Microsoft HTML Object Library

Dim ie As InternetExplorer

Dim pagePiece As Object

Dim webpage As HTMLDocument

Set ie = New InternetExplorer

'ie.Visible = True 'Optional if you want to make the window visible

ie.navigate ("https://en.wikipedia.org/wiki/List_of_countries_and_dependencies_by_population")

Do While ie.readyState = 4: DoEvents: Loop

Do Until ie.readyState = 4: DoEvents: Loop

While ie.Busy

DoEvents

Wend

Set webpage = ie.document

Set mtbl = webpage.getElementsByTagName("Table")(1)

Set table_data = mtbl.getElementsByTagName("tr")

For itemNum = 1 To 240

For childNum = 0 To 5

Cells(itemNum, childNum + 1) = table_data.Item(itemNum).Children(childNum).innerText

Next childNum

Next itemNum

ie.Quit

Set ie = Nothing

End SubTroubleshooting

Things don’t always go according to plan when trying to scrape data from a website using VBA. In this section, we’ll describe some of the most common errors and give you a little workaround that might help resolve the error.

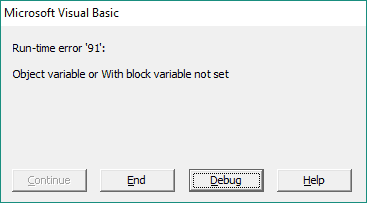

Runtime Error 91 when web scraping

If your website is really big or your computer is slow, you may find yourself with a Runtime error 91 message when trying to extract the text from your website.

This error is more common when your IE window is hidden, but making your window visible doesn’t mean you’re immune. When you get this error while web scraping, 9 out of 10 times you can click Debug, then simply click the green play button in your VBA editor (or press F5) and your macro will continue running as if nothing happened.

The workaround solution to this is to add a brief pause to your macro once an error is encountered and then continue processing. It’s weird, I know, but it’s also a pretty reliable solution. The downside is you may be burying a real error and you’d get yourself stuck in a loop.

To avoid getting stuck in a never-ending loop, I added a counter so if you find yourself with more the 5 errors, the macro pauses and lets you investigate. It prints each error number and description to the Immediate Window using the Debug.Print command.

Sub scrape_website_with_delay()

'Add Reference (Tools > References) to the following libraries:

' 1) Microsoft Internet Controls

' 2) Microsoft HTML Object Library

Dim ie As InternetExplorer

Dim pagePiece As Object

Dim webpage As HTMLDocument

Set ie = New InternetExplorer

'ie.Visible = True 'Optional if you want to make the window visible

ie.navigate ("https://en.wikipedia.org/wiki/List_of_countries_and_dependencies_by_population")

Do While ie.readyState = 4: DoEvents: Loop

Do Until ie.readyState = 4: DoEvents: Loop

While ie.Busy

DoEvents

Wend

Set webpage = ie.document

Set mtbl = webpage.getElementsByTagName("Table")(1)

Set table_data = mtbl.getElementsByTagName("tr")

On Error GoTo tryagain:

For itemNum = 1 To 240

For childNum = 0 To 5

Cells(itemNum, childNum + 1) = table_data.Item(itemNum).Children(childNum).innerText

Next childNum

Next itemNum

ie.Quit

Set ie = Nothing

Exit Sub

tryagain:

Application.Wait Now + TimeValue("00:00:02")

errcount = errcount + 1

Debug.Print Err.Number & Err.Description

If errcount = 5 Then

MsgBox "We've detected " & errcount & " errors and we're going to pause the program" & _

" so you can investigate.", , "Multiple errors detected"

Stop

errcount = 0

End If

Err.Clear

Resume

End SubNotice how this macro jumps to the bottom and adds a 2 second delay using the VBA Application.Wait method.

You’d be wise to narrow error handling down so it only adds the delay if the err.Number equals 91, but I didn’t do that. Because I didn’t screen the error that way, you’ll want to wait until you’re sure your application is error-free before implementing this solution.

Alternatively, you could modify the code yourself to only screen out the error you’re encountering!

My macro used to work, but now it doesn't

This is another common observation. Your macro could work perfectly fine for months and then, one day, it just stops working.

Unfortunately, that’s one of the risks of web scraping. If the format of the website changes, or even if there’s an HTML change behind-the-scenes, it could render your macro useless.

The only solution is to take the time reconfigure your macro so it properly scrapes with the new website format.

Closing thoughts

If you’re scraping data off the web, you’ll likely encounter many websites, each with slightly different HTML structures. In unpredictable cases like this, it is a good idea to pair the VBA GetElementsByTagName method with a strategy that can address HTML variability. What I mean is if you plan to use the program for the long-term, an adaptable macro approach might be the most robust since webpages change all the time. In the end, it just takes a little clever manipulation of counters to get your data into the right places on an Excel sheet.

Web scraping is extremely powerful if you need to aggregate data from different sources, but it can be just as useful for something as simple as reproducing a table from Wikipedia on your own spreadsheet or grabbing finance data based on a stock ticker. After scraping with VBA, you can do your own calculations on the data, like building charts or checking changes from previous scrapes. If other parts of your spreadsheet are already automated (like chart building or calculations) why not take the final step and automate the data acquisition (web scraping) process, as well? There’s something quite satisfying about a one-click solution for your problem.

If you haven’t already done so, please subscribe to my free wellsrPRO VBA Training Program using the form below and share this article on Twitter and Facebook.