Anthropic released Claude 3.5 Sonnet LLM on June 20, 2024. According to Anthropic, Claude 3.5 Sonnet outperforms OpenAI GPT-4o (the model that powers ChatGPT) on several benchmarks. At the time of this article, livebench.ai, a reliable LLM leaderboard, also places Claude 3.5 Sonnet at the first rank for the Global LLM benchmark average.

Inspired by these numbers, we tried to perform a few experiments, namely zero-shot text classification and zero-shot text summarization, to compare the performance of Claude 3.5 Sonnet and OpenAI GPT-4o. We’ll share our findings in this article and also show you how to use these LLMs with Python.

By the end of this article, you will know how to call the Anthropic and OpenAI APIs to perform zero-shot text classification and summarization. You will also see which model is better for these tasks.

Let’s get to it!

Importing and Installing Required Libraries

You will need to install the Anthropic and OpenAI Python libraries. In addition, we will install the rouge-score module to evaluate the text summarization performance of different LLMs.

!pip install anthropic

!pip install openai

!pip install rouge-scoreThe following script imports the required libraries into your Python application.

import os

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import anthropic

from openai import OpenAI

from rouge_score import rouge_scorerClaude 3.5 Sonnet vs GPT-4o For Text Classification

Let’s first compare Claude 3.5 Sonnet and GPT-4o for text classification.

Importing and Preprocessing the Dataset

We will use the US Consumer Finance Complaints dataset to perform zero-shot classification. The dataset consists of customer complaints about different financial products. The script below imports the dataset CSV file into a Pandas dataframe.

##Dataset Link

##https://www.kaggle.com/datasets/kaggle/us-consumer-finance-complaints

dataset = pd.read_csv(r"D:\Datasets\consumer_complaints.csv")

print(dataset.shape)

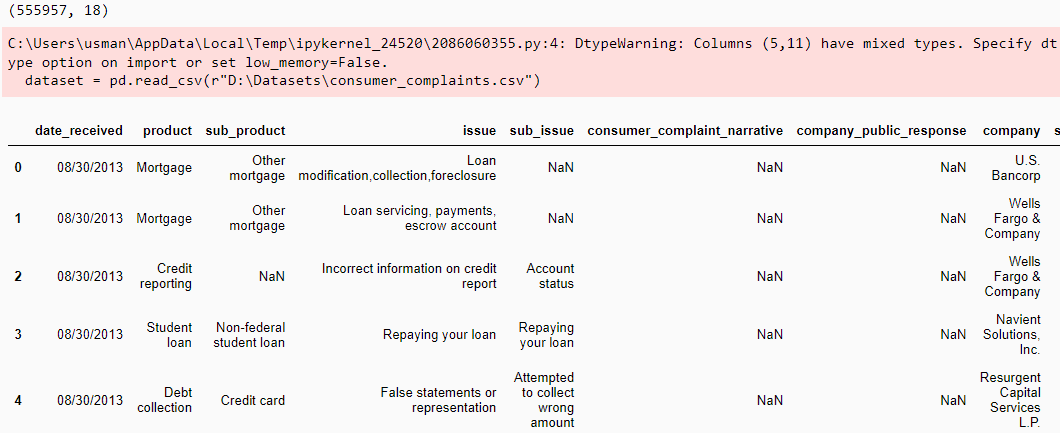

dataset.head()Output:

The dataset consists of more than 550k records. The consumer_complaint_narrative column contains complaint text, while the product column contains the corresponding text. We will use LLMs to predict the value for the product column using the consumer_complaint_narrative text as input.

Let’s print the number of complaints for each product category:

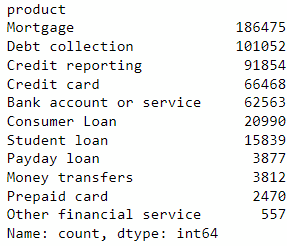

dataset["product"].value_counts()Output:

The dataset is highly imbalanced. We will preprocess it. First, we will remove all the records where the consumer_complaint_narrative column is null. Next, we will remove the product Other financial service so that we have records with actual products. We will remove all the dataset columns except product and consumer_complaint_narrative.

Finally, to balance our dataset, we will select 10 records for each product, resulting in a total of 100 records. You can select more records, but remember that you will have to pay to process each record via the Anthropic and OpenAI APIs.

The following script performs data preprocessing.

#Remove rows where consumer_complaint_narrative is null

df_filtered = dataset.dropna(subset=['consumer_complaint_narrative'])

#Remove rows with "Other financial service" in the product column

df_filtered = df_filtered[df_filtered['product'] != 'Other financial service']

#Keep only the "product" and "consumer_complaint_narrative" columns

df_filtered = df_filtered[['product', 'consumer_complaint_narrative']]

#Sample 10 rows from each remaining product category

df_sampled = df_filtered.groupby('product').apply(lambda x: x.sample(10)).reset_index(drop=True)

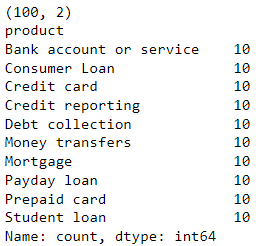

print(df_sampled.shape)

print(df_sampled["product"].value_counts())Output:

We can now perform zero-shot text classification with Claude 3.5 Sonnet and GPT-4o.

Zero Shot Text Classification with Claude 3.5 Sonnet

To call the Claude 3.5 Sonnet model, you must create a client object of the anthropic.Anthropic class and pass it your Anthropic API key and the Claude 3.5 Sonnet model id.

client = anthropic.Anthropic(

api_key = os.environ.get('ANTHROPIC_API_KEY')

)

model = "claude-3-5-sonnet-20240620"Next, we will define a script that iterates through all the records in consumer_complaint_narrative column of our sampled dataset. For each record, we will call the messages.create() function of the Anthropic client object and ask the Claude 3.5 Sonnet model to predict the product for the complaint from the list of products. The model response is appended to the predictions list.

predictions = []

complaints_list = df_sampled["consumer_complaint_narrative"].tolist()

complaint_category_list = """

Bank account or service

Consumer Loan

Credit card

Credit reporting

Debt collection

Money transfers

Mortgage

Payday loan

Prepaid card

Student loan

"""

i = 0

while i < len(complaints_list):

try:

complaint = complaints_list[i]

content = """You are a consumer complaint processing officer.

For the following complaint text, select one of the complaint categories from the complaint category list.

Return only the sentiment complaint category without any additional remarks or text.

\n\n

Complaint text: {}

\n\n

Complaint Category list {}""".format(complaint, complaint_category_list)

prediction = client.messages.create(

model= model,

max_tokens=25,

temperature=0.0,

messages=[

{"role": "user", "content": content}

]

).content[0].text

predictions.append(prediction)

i = i + 1

print(i, prediction)

except Exception as e:

print("===================")

print("Exception occurred:", e)Finally, we compare the model predictions with the actual products to print the model accuracy.

accuracy = accuracy_score(predictions, df_sampled["product"])

print("Accuracy:", accuracy)Output:

Accuracy: 0.65The above output shows that the Claude 3.5 Sonnet model achieved 65% accuracy for zero-shot multiclass text classification.

Zero Shot Text Classification with GPT-4o

The process for Zero-shot text classification with GPT-4o is similar to Claude 3.5 Sonnet.

First, create a client object of the OpenAI class and pass it your OpenAI API key and GPT-4o model ID.

client = OpenAI(

api_key = os.environ.get('OPENAI_API_KEY'),

)

model = "gpt-4o"Next, you iterate through all the consumer complaints and make predictions for the product category using the chat.completions.create() method of the OpenAI client object. Finally, you can compare the predictions with the actual categories to calculate model accuracy.

predictions = []

i = 0

while i < len(complaints_list):

try:

complaint = complaints_list[i]

content = """You are a consumer complaint processing officer.

For the following complaint text, select one of the complaint categories from the complaint category list.

Return only the sentiment complaint category without any additional remarks or text.

\n\n

Complaint text: {}

\n\n

Complaint Category list {}""".format(complaint, complaint_category_list)

prediction = client.chat.completions.create(

model= model,

temperature = 0,

max_tokens = 25,

messages=[

{"role": "user", "content": content}

]

).choices[0].message.content

predictions.append(prediction)

i = i + 1

print(i, prediction)

except Exception as e:

print("===================")

print("Exception occurred:", e)

accuracy = accuracy_score(predictions, df_sampled["product"])

print("Accuracy:", accuracy)Output:

Accuracy: 0.67The above output shows that GPT-4o performs slightly better than Claude 3.5 sonnet for zero-shot text classification.

Next, we will compare Claude 3.5 Sonnet and GPT-4o for zero-shot text summarization.

Claude 3.5 Sonnet vs GPT-4o For Text Summarization

The comparison process for zero-shot text summarization remains similar to zero-shot text classification. However, the dataset, prompt, and evaluation criteria will change.

Importing the Dataset

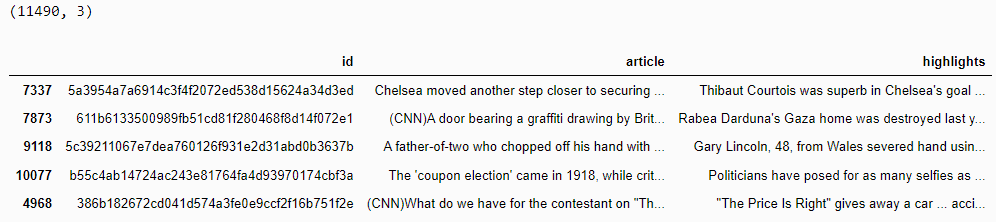

We will use the CNN Daily Mail Dataset from Kaggle, which contains news articles and corresponding human-generated highlights, or summaries.

The following script imports the dataset.

##Dataset download link

##https://www.kaggle.com/datasets/gowrishankarp/newspaper-text-summarization-cnn-dailymail

dataset = pd.read_csv(r"D:\Datasets\cnn_dailymail\test.csv")

dataset = dataset.sample(frac=1)

print(dataset.shape)

dataset.head()Output:

The article column contains the text, while the highlights column contains the corresponding highlights.

Next, we will calculate the average number of characters in all the highlights. This number will be the maximum output tokens while calling the Claude 3.5 Sonnet and GPT-4o models.

dataset['summary_length'] = dataset['highlights'].apply(len)

average_length = dataset['summary_length'].mean()

print(f"Average length of summaries: {average_length:.2f} characters")Output:

Average length of summaries: 311.93 charactersThe output shows that, on average, we have 312 characters in the highlights.

Zero-shot Text Summarization with Claude 3.5 Sonnet

As mentioned, we will define the Anthropic class as the client object.

client = anthropic.Anthropic(

api_key = os.environ.get('ANTHROPIC_API_KEY')

)

model = "claude-3-5-sonnet-20240620"Next, we will define the calculate_rouge() method, which accepts human-generated and model-generated highlights and returns ROUGE1, ROUGE2, and ROUGEL scores. These are common metrics used to evaluate LLMs on tasks such as text summarization and text translation.

def calculate_rouge(reference, candidate):

scorer = rouge_scorer.RougeScorer(['rouge1', 'rouge2', 'rougeL'], use_stemmer=True)

scores = scorer.score(reference, candidate)

return {key: value.fmeasure for key, value in scores.items()}Finally, we will iterate through the first 20 articles in the dataset and pass them to the Claude 3.5 Sonnet model to generate the article highlights. You can pass more articles, but, again, this costs money so we want to be prudent.

The model response, which contains the model-generated highlights and the human-generated highlights, is passed to the calculate_rouge() method. The corresponding ROUGE scores are stored in the results dictionary.

results = []

i = 0

for _, row in dataset[:20].iterrows():

article = row['article']

highlight = row['highlights']

i = i + 1

print(f"Summarizing article {i}")

prompt = f"Create highlights of the following article in 320 characters. The highlights should look like they were human created:\n\n{article}\n\nSummary:"

generated_summary = client.messages.create(

model= model,

max_tokens=320,

temperature=0.7,

messages=[

{"role": "user", "content": prompt}

]

).content[0].text

rouge_scores = calculate_rouge(highlight, generated_summary)

results.append({

'article_id': row.id,

'generated_summary': generated_summary,

'rouge1': rouge_scores['rouge1'],

'rouge2': rouge_scores['rouge2'],

'rougeL': rouge_scores['rougeL']

})To print the model performance, we convert the results dictionary into a Pandas dataframe and print the average of the rouge1, rouge2, and rouge3 columns.

results_df = pd.DataFrame(results)

average_rouge_scores = results_df[['rouge1', 'rouge2', 'rougeL']].mean()

print(f"Average ROUGE-1 Score: {average_rouge_scores['rouge1']:.4f}")

print(f"Average ROUGE-2 Score: {average_rouge_scores['rouge2']:.4f}")

print(f"Average ROUGE-L Score: {average_rouge_scores['rougeL']:.4f}")Output:

Average ROUGE-1 Score: 0.3434

Average ROUGE-2 Score: 0.1009

Average ROUGE-L Score: 0.1907The above output shows the ROUGE scores achieved by the Claude 3.5 Sonnet model.

Zero-shot Text Summarization with GPT-4o

Next, we will perform zero-shot text summarization using the GPT-4o model.

The following script creates the OpenAI client object.

client = OpenAI(

api_key = os.environ.get('OPENAI_API_KEY'),

)

model = "gpt-4o"Next, we pass the first 20 articles from the dataset to the GPT-4o model to get article highlights. Finally, we evaluate the model performance using the calculate_rouge() function.

results = []

i = 0

for _, row in dataset[:20].iterrows():

article = row['article']

highlight = row['highlights']

i = i + 1

print(f"Summarizing article {i}")

prompt = f"Create highlights of the following article in 320 characters. The highlights should look like they were human created:\n\n{article}\n\nSummary:"

generated_summary = client.chat.completions.create(

model=model,

messages=[{"role": "user", "content": prompt}],

max_tokens=320,

temperature=0.7

).choices[0].message.content

rouge_scores = calculate_rouge(highlight, generated_summary)

results.append({

'article_id': row.id,

'generated_summary': generated_summary,

'rouge1': rouge_scores['rouge1'],

'rouge2': rouge_scores['rouge2'],

'rougeL': rouge_scores['rougeL']

})The following script prints the ROUGE scores for OpenAI GPT-4o model.

results_df = pd.DataFrame(results)

average_rouge_scores = results_df[['rouge1', 'rouge2', 'rougeL']].mean()

print(f"Average ROUGE-1 Score: {average_rouge_scores['rouge1']:.4f}")

print(f"Average ROUGE-2 Score: {average_rouge_scores['rouge2']:.4f}")

print(f"Average ROUGE-L Score: {average_rouge_scores['rougeL']:.4f}")Output:

Average ROUGE-1 Score: 0.4120

Average ROUGE-2 Score: 0.1429

Average ROUGE-L Score: 0.2255Final Verdict

The results in this article show that GPT-4o is a better choice for zero-shot text classification and summarization tasks. I recommend you try different prompts to see if you get different results. But for now, we’ll stick with ChatGPT for our everyday tasks.