Large language models (LLMs) have revolutionized the way people interact with software applications. You can use natural language commands to instruct AI agents to perform specific tasks.

One of the most useful LLM applications is interacting with a database using natural language, as it allows non-technical users to issue commands and retrieve data from databases, which would otherwise require some database expertise.

In this tutorial, you’ll see how to develop a MySQL AI agent using the OpenAI GPT-4o model that allows you to interact with a MySQL database using natural language. We will utilize the LangGraph orchestration framework to develop the AI agent.

Installing and Importing Required Libraries

You need to install the following libraries to run scripts in this tutorial.

#LangGraph + LangChain + OpenAI + MySQL driver + SQLAlchemy

%pip install -U langgraph langgraph-prebuilt langchain_community "langchain[openai]" pymysql sqlalchemyThe script below imports the required libraries.

from langchain_community.utilities import SQLDatabase

from langchain_openai import ChatOpenAI

from langchain_community.agent_toolkits import SQLDatabaseToolkit

from langgraph.prebuilt import create_react_agent

from IPython.display import Image, display

from langchain_core.runnables.graph import CurveStyle, MermaidDrawMethod, NodeStylesCreating a MySQL Connection and Retrieving DB Information

To execute sample codes, we store the dummy employees database on a local MySQL server.

The following script uses the LangChain SQLDatabase utility to retrieve information from the MySQL database.

We will pass this information on to our AI agent, which will then generate accurate MySQL queries based on natural language commands.

##database link

##https://github.com/datacharmer/test_db

db_uri = "mysql+pymysql://root:abcd123@localhost:3306/employees"

db = SQLDatabase.from_uri(db_uri)

print("Dialect:", db.dialect)

print("Tables :", db.get_usable_table_names()[:6], "...")Output:

Dialect: mysql

Tables : ['departments', 'dept_emp', 'dept_manager', 'employees', 'salaries', 'titles'] ...Importing LangChain Database Tools

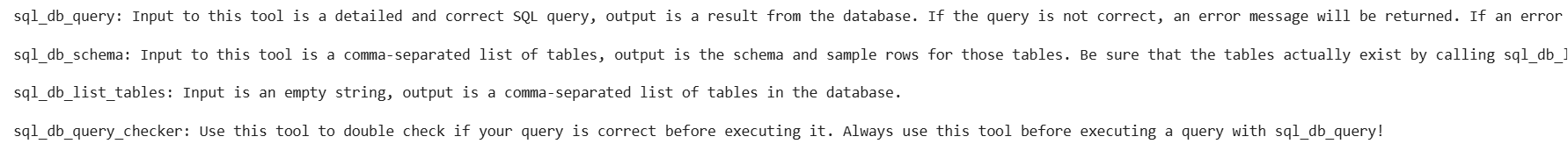

LangChain’s SQLDatabaseToolkit contains tools that allow you to generate a SQL query from natural language, verify the query’s accuracy, retrieve the database schema, and list database tables.

These tools are used by LangGraph AI agents while retrieving information from a database.

The following script imports these tools into your application and prints their descriptions. Notice the placeholder below where you’ll need to enter your own OpenAI API key.

openai_api_key = "YOUR_OPENAI_API_KEY"

llm = ChatOpenAI(api_key = openai_api_key,

temperature = 0,

model_name = "gpt-4o")toolkit = SQLDatabaseToolkit(db=db, llm=llm)

tools = toolkit.get_tools()

for tool in tools:

print(f"{tool.name}: {tool.description}\n")Output:

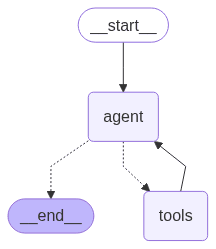

Creating MySQL Agent using LangGraph Default ReAct Agent

Creating an agent in LangGraph is straightforward. You can use LangGraph’s default ReAct agent, which, given an input and a prompt, automatically selects and executes various tools to generate the best possible response.

The following script creates a MySQL ReAct agent in LangGraph. The prompt informs the Agent of the type of input it will receive and the steps it should take to generate a response.

from langgraph.prebuilt import create_react_agent

system_prompt = """

You are an agent designed to interact with a SQL database.

Given an input question, create a syntactically correct {dialect} query to run,

then look at the results of the query and return the answer. Unless the user

specifies a specific number of examples they wish to obtain, always limit your

query to at most {top_k} results.

You can order the results by a relevant column to return the most interesting

examples in the database. Never query for all the columns from a specific table,

only ask for the relevant columns given the question.

You MUST double check your query before executing it. If you get an error while

executing a query, rewrite the query and try again.

DO NOT make any DML statements (INSERT, UPDATE, DELETE, DROP etc.) to the

database.

To start you should ALWAYS look at the tables in the database to see what you

can query. Do NOT skip this step.

Then you should query the schema of the most relevant tables.

""".format(

dialect=db.dialect,

top_k=5,

)

agent = create_react_agent(

llm,

tools,

prompt=system_prompt,

)

display(Image(agent.get_graph().draw_mermaid_png()))Output:

Let’s test the AI agent by asking a question. The typo (employes) in the question below is intentional. The output will show that our MySQL agent is capable of comprehending natural language queries, even if they contain minor errors.

question = "Give me the names of the top three employes with highest salaries"

for step in Agent.stream(

{"messages": [{"role": "user", "content": question}]},

stream_mode="values",

):

step["messages"][-1].pretty_print()Output:

The following output displays the flow of messages and corresponding tool calls executed by the MySQL agent to generate the final response.

The agent first called the sql_db_list_tables tools as mentioned in the prompt, which returned all the table names. It then called the sql_db_schema tool to retrieve the scheme of the relevant tables, which are employees, and salaries tables in our case. The agent generates a query and then calls the sql_db_query_checker tool to see if the query is correct. Finally, it calls the sql_db_query tool to execute the query.

You can see the names of the top three employees with highest salaries.

================================ Human Message =================================

Give me the names of the top three employes with highest salaries

================================== Ai Message ==================================

Tool Calls:

sql_db_list_tables (call_bzgBY6HeV69vEhUWyRdRjHHU)

Call ID: call_bzgBY6HeV69vEhUWyRdRjHHU

Args:

================================= Tool Message =================================

Name: sql_db_list_tables

departments, dept_emp, dept_manager, employees, salaries, titles

================================== Ai Message ==================================

Tool Calls:

sql_db_schema (call_TcCnyCqrR6elKtSSmm2WhsNc)

Call ID: call_TcCnyCqrR6elKtSSmm2WhsNc

Args:

table_names: employees, salaries

================================= Tool Message =================================

Name: sql_db_schema

CREATE TABLE employees (

emp_no INTEGER NOT NULL,

birth_date DATE NOT NULL,

first_name VARCHAR(14) NOT NULL,

last_name VARCHAR(16) NOT NULL,

gender ENUM('M','F') NOT NULL,

hire_date DATE NOT NULL,

PRIMARY KEY (emp_no)

)DEFAULT CHARSET=utf8mb4 COLLATE utf8mb4_0900_ai_ci ENGINE=InnoDB

/*

3 rows from employees table:

emp_no birth_date first_name last_name gender hire_date

10001 1953-09-02 Georgi Facello M 1986-06-26

10002 1964-06-02 Bezalel Simmel F 1985-11-21

10003 1959-12-03 Parto Bamford M 1986-08-28

*/

CREATE TABLE salaries (

emp_no INTEGER NOT NULL,

salary INTEGER NOT NULL,

from_date DATE NOT NULL,

to_date DATE NOT NULL,

PRIMARY KEY (emp_no, from_date),

CONSTRAINT salaries_ibfk_1 FOREIGN KEY(emp_no) REFERENCES employees (emp_no) ON DELETE CASCADE

)DEFAULT CHARSET=utf8mb4 COLLATE utf8mb4_0900_ai_ci ENGINE=InnoDB

/*

3 rows from salaries table:

emp_no salary from_date to_date

10001 60117 1986-06-26 1987-06-26

10001 62102 1987-06-26 1988-06-25

10001 66074 1988-06-25 1989-06-25

*/

================================== Ai Message ==================================

Tool Calls:

sql_db_query_checker (call_F2w7ubGL6p8yNjyzuxAps09Z)

Call ID: call_F2w7ubGL6p8yNjyzuxAps09Z

Args:

query: SELECT e.first_name, e.last_name, s.salary FROM employees e JOIN salaries s ON e.emp_no = s.emp_no ORDER BY s.salary DESC LIMIT 3;

================================= Tool Message =================================

Name: sql_db_query_checker

```sql

SELECT e.first_name, e.last_name, s.salary FROM employees e JOIN salaries s ON e.emp_no = s.emp_no ORDER BY s.salary DESC LIMIT 3;

```

================================== Ai Message ==================================

Tool Calls:

sql_db_query (call_5GYtmevKF2TyQJXcGfEH8ZzM)

Call ID: call_5GYtmevKF2TyQJXcGfEH8ZzM

Args:

query: SELECT e.first_name, e.last_name, s.salary FROM employees e JOIN salaries s ON e.emp_no = s.emp_no ORDER BY s.salary DESC LIMIT 3;

================================= Tool Message =================================

Name: sql_db_query

[('Tokuyasu', 'Pesch', 158220), ('Tokuyasu', 'Pesch', 157821), ('Honesty', 'Mukaidono', 156286)]

================================== Ai Message ==================================

The top three employees with the highest salaries are:

1. Tokuyasu Pesch with a salary of 158,220

2. Tokuyasu Pesch with a salary of 157,821

3. Honesty Mukaidono with a salary of 156,286The LangGraph ReAct agent provides the thought-action-observation loop out of the box. However, to have more control over tool execution, you can customize a LangGraph AI agent, as shown in the following section.

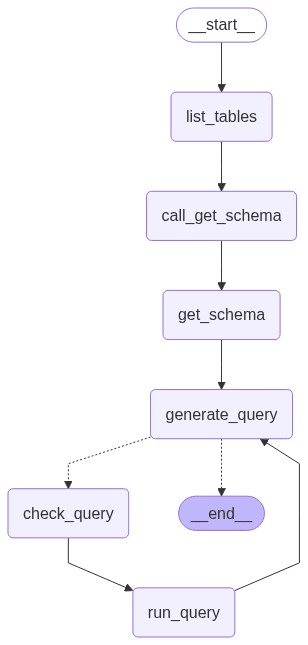

Creating a Customized MySQL Agent with LangGraph

To create a customized MySQL agent, we will first define two high-level tools: get_schema_tool, which internally calls the sql_db_schema tool, and run_query_tool, which calls the sql_db_query tool from the SQLDatabaseToolkit.

from typing import Literal

from langchain_core.messages import AIMessage

from langchain_core.runnables import RunnableConfig

from langgraph.graph import END, START, MessagesState, StateGraph

from langgraph.prebuilt import ToolNodeget_schema_tool = next(tool for tool in tools if tool.name == "sql_db_schema")

get_schema_node = ToolNode([get_schema_tool], name="get_schema")

run_query_tool = next(tool for tool in tools if tool.name == "sql_db_query")

run_query_node = ToolNode([run_query_tool], name="run_query")We want to force the agent to always call the sql_db_list_tables tool first. To achieve this, we define the list_tables method, which we will call from the first node in our graph.

def list_tables(state: MessagesState):

tool_call = {

"name": "sql_db_list_tables",

"args": {},

"id": "abc123",

"type": "tool_call",

}

tool_call_message = AIMessage(content="", tool_calls=[tool_call])

list_tables_tool = next(tool for tool in tools if tool.name == "sql_db_list_tables")

tool_message = list_tables_tool.invoke(tool_call)

response = AIMessage(f"Available tables: {tool_message.content}")

return {"messages": [tool_call_message, tool_message, response]}Next, we define the call_get_schema function, which calls the get_schema_tool that we defined earlier. This will be the second node in our graph.

def call_get_schema(state: MessagesState):

llm_with_tools = llm.bind_tools([get_schema_tool], tool_choice="any")

response = llm_with_tools.invoke(state["messages"])

return {"messages": [response]}Finally we define the prompts for nodes that generate a query and check if the query is valid.

generate_query_system_prompt = """

You are an agent designed to interact with a SQL database.

Given an input question, create a syntactically correct {dialect} query to run,

then look at the results of the query and return the answer. Unless the user

specifies a specific number of examples they wish to obtain, always limit your

query to at most {top_k} results.

You can order the results by a relevant column to return the most interesting

examples in the database. Never query for all the columns from a specific table,

only ask for the relevant columns given the question.

DO NOT make any DML statements (INSERT, UPDATE, DELETE, DROP etc.) to the database.

""".format(

dialect=db.dialect,

top_k=5,

)

def generate_query(state: MessagesState):

system_message = {

"role": "system",

"content": generate_query_system_prompt,

}

llm_with_tools = llm.bind_tools([run_query_tool])

response = llm_with_tools.invoke([system_message] + state["messages"])

return {"messages": [response]}check_query_system_prompt = """

You are a SQL expert with a strong attention to detail.

Double check the {dialect} query for common mistakes, including:

- Using NOT IN with NULL values

- Using UNION when UNION ALL should have been used

- Using BETWEEN for exclusive ranges

- Data type mismatch in predicates

- Properly quoting identifiers

- Using the correct number of arguments for functions

- Casting to the correct data type

- Using the proper columns for joins

If there are any of the above mistakes, rewrite the query. If there are no mistakes,

just reproduce the original query.

You will call the appropriate tool to execute the query after running this check.

""".format(dialect=db.dialect)

def check_query(state: MessagesState):

system_message = {

"role": "system",

"content": check_query_system_prompt,

}

# Generate an artificial user message to check

tool_call = state["messages"][-1].tool_calls[0]

user_message = {"role": "user", "content": tool_call["args"]["query"]}

llm_with_tools = llm.bind_tools([run_query_tool], tool_choice="any")

response = llm_with_tools.invoke([system_message, user_message])

response.id = state["messages"][-1].id

return {"messages": [response]}We also define the should_continue function, which checks if a query is accurate or not. If it is accurate, it is sent to the run_query node; otherwise, the query is generated again.

def should_continue(state: MessagesState) -> Literal[END, "check_query"]:

messages = state["messages"]

last_message = messages[-1]

if not last_message.tool_calls:

return END

else:

return "check_query"The next step is to define the LangGraph with the node functions we defined. The graph executes in sequence, following the order of the nodes in the graph.

builder = StateGraph(MessagesState)

builder.add_node(list_tables)

builder.add_node(call_get_schema)

builder.add_node(get_schema_node, "get_schema")

builder.add_node(generate_query)

builder.add_node(check_query)

builder.add_node(run_query_node, "run_query")

builder.add_edge(START, "list_tables")

builder.add_edge("list_tables", "call_get_schema")

builder.add_edge("call_get_schema", "get_schema")

builder.add_edge("get_schema", "generate_query")

builder.add_conditional_edges(

"generate_query",

should_continue,

)

builder.add_edge("check_query", "run_query")

builder.add_edge("run_query", "generate_query")

agent = builder.compile()

display(Image(agent.get_graph().draw_mermaid_png()))Output:

The above script shows our final AI agent graph.

Let’s ask a question and see the outputs.

question = "Give me the names of the top three employes with highest salaries"

for step in Agent.stream(

{"messages": [{"role": "user", "content": question}]},

stream_mode="values",

):

step["messages"][-1].pretty_print()Output:

The output below shows that the graph executes the nodes in the same order as they are added in the graph.

================================ Human Message =================================

Give me the names of the top three employes with highest salaries

================================== Ai Message ==================================

Available tables: departments, dept_emp, dept_manager, employees, salaries, titles

================================== Ai Message ==================================

Tool Calls:

sql_db_schema (call_Q9g0WWat9Ti4frZXnNVYFBpq)

Call ID: call_Q9g0WWat9Ti4frZXnNVYFBpq

Args:

table_names: employees, salaries

================================= Tool Message =================================

Name: sql_db_schema

CREATE TABLE employees (

emp_no INTEGER NOT NULL,

birth_date DATE NOT NULL,

first_name VARCHAR(14) NOT NULL,

last_name VARCHAR(16) NOT NULL,

gender ENUM('M','F') NOT NULL,

hire_date DATE NOT NULL,

PRIMARY KEY (emp_no)

)DEFAULT CHARSET=utf8mb4 COLLATE utf8mb4_0900_ai_ci ENGINE=InnoDB

/*

3 rows from employees table:

emp_no birth_date first_name last_name gender hire_date

10001 1953-09-02 Georgi Facello M 1986-06-26

10002 1964-06-02 Bezalel Simmel F 1985-11-21

10003 1959-12-03 Parto Bamford M 1986-08-28

*/

CREATE TABLE salaries (

emp_no INTEGER NOT NULL,

salary INTEGER NOT NULL,

from_date DATE NOT NULL,

to_date DATE NOT NULL,

PRIMARY KEY (emp_no, from_date),

CONSTRAINT salaries_ibfk_1 FOREIGN KEY(emp_no) REFERENCES employees (emp_no) ON DELETE CASCADE

)DEFAULT CHARSET=utf8mb4 COLLATE utf8mb4_0900_ai_ci ENGINE=InnoDB

/*

3 rows from salaries table:

emp_no salary from_date to_date

10001 60117 1986-06-26 1987-06-26

10001 62102 1987-06-26 1988-06-25

10001 66074 1988-06-25 1989-06-25

*/

================================== Ai Message ==================================

Tool Calls:

sql_db_query (call_OEqSCKEeVU5NRM7VXdhXkVVp)

Call ID: call_OEqSCKEeVU5NRM7VXdhXkVVp

Args:

query: SELECT e.first_name, e.last_name, s.salary

FROM employees e

JOIN salaries s ON e.emp_no = s.emp_no

ORDER BY s.salary DESC

LIMIT 3;

================================== Ai Message ==================================

Tool Calls:

sql_db_query (call_v0sSVGHXcF81WbRCFTxmGRND)

Call ID: call_v0sSVGHXcF81WbRCFTxmGRND

Args:

query: SELECT e.first_name, e.last_name, s.salary

FROM employees e

JOIN salaries s ON e.emp_no = s.emp_no

ORDER BY s.salary DESC

LIMIT 3;

================================= Tool Message =================================

Name: sql_db_query

[('Tokuyasu', 'Pesch', 158220), ('Tokuyasu', 'Pesch', 157821), ('Honesty', 'Mukaidono', 156286)]

================================== Ai Message ==================================

The top three employees with the highest salaries are:

1. Tokuyasu Pesch with a salary of 158,220

2. Tokuyasu Pesch with a salary of 157,821

3. Honesty Mukaidono with a salary of 156,286The final output displays the same response as was observed with the ReAct agent. However, in this case, you have much more control over the execution of steps in the Graph.