In our last tutorial, we explained how to develop multi-agent chatbots with LangGraph. In this tutorial, we’ll go a step further and explain how to develop a Retrieval Augmented Generation(RAG) system with LangGraph.

Previously, most RAG and chatbot applications were developed with LangChain agents. However, owing to LangGraph’s agentic nature, companies are now adopting it as the default framework for these applications.

LangGraph is a framework built on top of LangChain. It does not entirely replace LangChain; rather, it uses various components from LangChain.

Installing and Importing Required Libraries

The following script installs the libraries to run scripts in this tutorial.

!pip install langchain-community

!pip install langchain-openai

!langchain-text-splitters

!pip install langgraph

!langchain-core

!pip install pypdf

!pip install chromadbThe script below imports the required libraries into your Python application. Notice the placeholder for your API key.

from langchain_openai import ChatOpenAI

from langchain_openai import OpenAIEmbeddings

from langchain_community.document_loaders import PyPDFLoader

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_core.documents import Document

from langchain_community.vectorstores import Chroma

from langchain_core.documents import Document

from langgraph.graph import START, StateGraph

from langgraph.checkpoint.memory import MemorySaver

from typing_extensions import List, TypedDict

from IPython.display import Image, display

import os

from google.colab import userdata

os.environ["OPENAI_API_KEY"] = userdata.get('OPENAI_API_KEY')Data Ingestion into Vector Database

The first step in a RAG application is to import data, create vector embedding from the data, and ingest the embeddings into a vector database.

The following script loads text from a PDF document. The PDF document contains Alphabet’s earnings call for Q3 2024.

data_url = "https://abc.xyz/assets/71/a5/78197a7540c987f13d247728a371/2024q3-alphabet-earnings-release.pdf"

loader = PyPDFLoader(data_url)

docs = loader.load()Next, we will split our PDF document into smaller chunks of 1000 characters, with an overlap of 200 characters between each chunk. This approach helps RAG models retrieve more relevant information that closely aligns with the user’s query. The overlap is crucial because it maintains the flow of information across chunk boundaries, preventing the loss of context which might occur if the text were split without any overlap.

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000, # chunk size (characters)

chunk_overlap=200, # chunk overlap (characters)

add_start_index=True, # track index in original document

)

all_splits = text_splitter.split_documents(docs)

print(f"Document split into {len(all_splits)} sub-documents.")Output:

Document split into 33 sub-documents.Finally, we create vector embeddings from the text chunks and insert them into the Chroma vector database. You can use any other database if you want. We’ll use OpenAI embeddings to create text chunks.

embeddings = OpenAIEmbeddings()

vector_store= Chroma.from_documents(

documents=all_splits,

embedding=embeddings

)That’s all we have to do for our data ingestion. The next step is to retrieve it.

Data Retrieval

To retrieve the data we just ingested, we need a prompt that embeds the context (the data ingested and retrieved from the vector database) into the query we will send to our LLM. To do so, you can write your own prompt or use a RAG prompt from a long-chain hub, as the following script shows.

from langchain import hub

prompt = hub.pull("rlm/rag-prompt")

example_messages = prompt.invoke(

{"context": "(context goes here)", "question": "(question goes here)"}

).to_messages()

assert len(example_messages) == 1

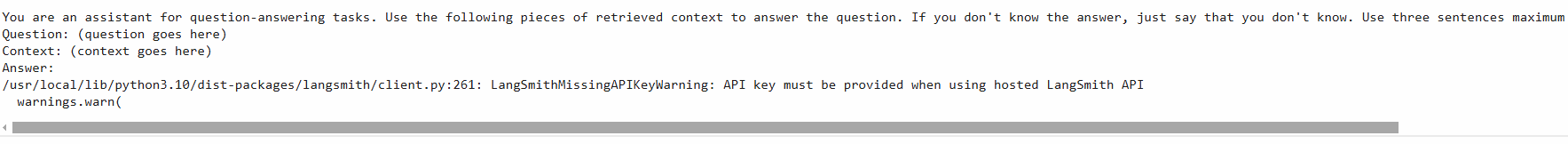

print(example_messages[0].content)Output:

The above output shows the prompt we will use to invoke an LLM.

Next, we will define the state of our LangGraph. A LangGraph agent’s state contains information shared between nodes and edges of a graph. In our case, the agent state will consist of a question containing the user query, the context containing the documents retrieved from the vector database, and the answer containing the LLM response.

We will also define the retrieve() function that will serve as a node in our LangGraph. The retrieve() function will return the documents from the vector database that matches the user query. These documents will be stored in the context of the agent state.

We will ask a question to test the retrieve() function and see what documents are returned.

class State(TypedDict):

question: str

context: List[Document]

answer: str

def retrieve(state: State):

retrieved_docs = vector_store.similarity_search(state["question"])

return {"context": retrieved_docs}

context = retrieve({"question":"How much did Alphabet make from YouTube Ads Revenue?"})

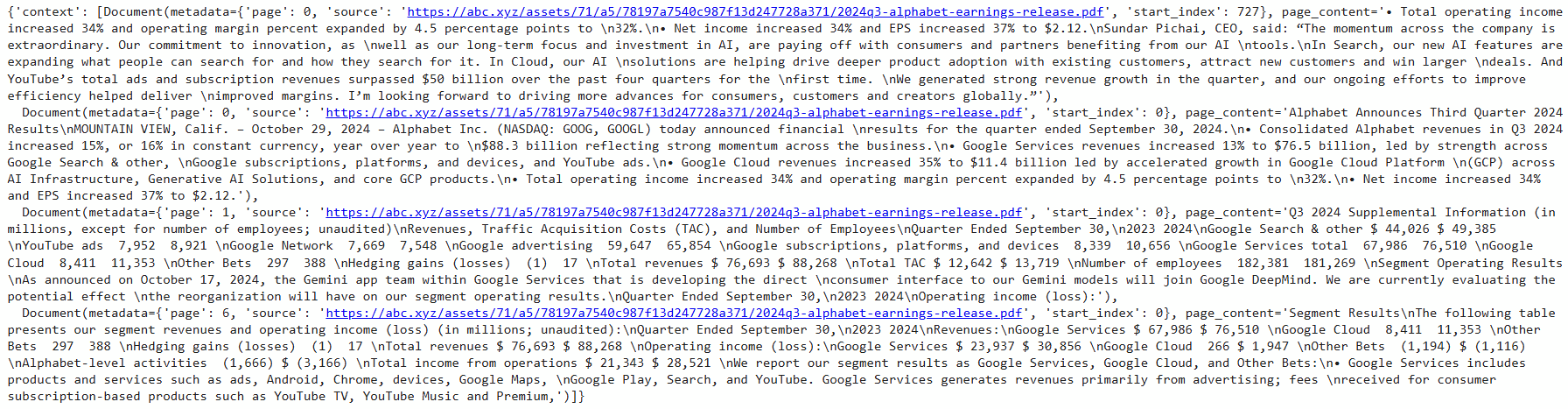

contextOutput:

Most of the documents are relevant to the user query.

Response Generation

In the generation step, the user question and the retrieved context documents are passed to the LLM to generate a response.

To do so, we will define the generate() function, which extracts the context from the documents, embeds the context and the user query in the prompt, and sends the prompt to the OpenAI GPT-4o LLM. Finally, the function stores the response in the state’s response attribute.

llm = ChatOpenAI(model = 'gpt-4')

def generate(state: State):

docs_content = "\n\n".join(doc.page_content for doc in state["context"])

messages = prompt.invoke({"question": state["question"], "context": docs_content})

response = llm.invoke(messages)

return {"answer": response.content}Putting It All Together: Creating a RAG LangGraph Agent

To create a LangGraph agent, we will use the StateGraph object and pass it the state object we defined earlier. Next, we will add the retrieve and generate nodes to our graph and set the retrieve node as the start point.

Finally, we will compile the graph and display its structure.

graph_builder = StateGraph(State).add_sequence([retrieve, generate])

graph_builder.add_edge(START, "retrieve")

graph = graph_builder.compile()

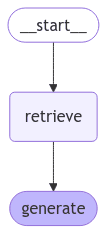

display(Image(graph.get_graph().draw_mermaid_png()))Output:

The above output shows that we have two nodes in the graph: retrieve and generate, corresponding to the two functions we defined earlier. The input to the graph will be the user query in the question attribute of the state object. The retrieve node will retrieve the context related to the user query and store it in the context attribute.

Next, the generate node will extract the context and the question attributes from the state object and embed them into the prompt sent to the LLM. The LLM’s response is stored in the answer attribute.

You can now invoke the LangGraph graph using the invoke() method. Let’s test the graph by asking a simple question. The following script asks LangGraph about Google Cloud’s revenue for Q3 of 2023 and 2024.

input = {"question":"What is Google Cloud revenue in Q3 2023 and 2024?"}

result = graph.invoke(input)

print(f'Answer: {result["answer"]}')Output:

Answer: The Google Cloud revenue in Q3 2023 was $8.411 billion. In Q3 2024, the Google Cloud revenue increased to $11.353 billion.The above output is correct; you can verify it from the document.

You can also stream LangGraph model responses using the stream() function, as the following script shows.

input = {"question":"How much did Alphabet make from YouTube Ads Revenue in Q3 2023 and 2024?"}

for message, metadata in graph.stream(input, stream_mode="messages"):

print(message.content, end="")Output:

Alphabet made $7.952 billion from YouTube ads revenue in Q3 2023 and $8.921 billion in Q3 2024.Going Further: Adding Memory to Conversations

Right now, we’ve just created an agent that allows you to ask one question and get one answer, but it is possible to have a stateful conversation with LangGraph agents, where LangGraph remembers the conversation history and responds accordingly.

To do so, create an object of the MemorySaver class and pass it to the checkpointer argument of a graph’s compile() method.

Next, when invoking a graph, you need to pass the configurable dictionary with the conversation’s thread_id.

Let’s ask a question followed by a second question referring to the information in the previous question.

memory = MemorySaver()

graph = graph_builder.compile(checkpointer=memory)

config = {"configurable": {"thread_id": "1"}}

input = {"question":"What was Google Cloud's revenue in Q3 2023 and 2024?"}

result = graph.invoke(input,

config=config,)

print(f'Answer: {result["answer"]}')Output:

Answer: The Google Cloud revenue in Q3 2023 was $8.411 billion. In Q3 2024, the revenue increased to $11.353 billion.The above out shows the correct answer. Next, we will ask a question that refers to the information in the above question.

input = {"question":"And for YouTube ads?"}

result = graph.invoke(input,

config=config,)

print(f'Answer: {result["answer"]}')Output:

Answer: The revenue from YouTube ads increased from $7,952 million in the third quarter of 2023 to $8,921 million in the third quarter of 2024. Furthermore, YouTube's total ads and subscription revenues surpassed $50 billion over the past four quarters for the first time. This indicates a positive growth trend in YouTube's ad revenue.You can see that LangGraph remembers details from the previous question and used that context to answer the question about YouTube ads. Behavior like this is great for creating your own application, like a chatbot.

Conclusion

In this tutorial, you saw how to develop a RAG system in LangGraph and extract information from a custom PDF document. LangGraph is an excellent framework for developing agentic LLM applications. It certainly comes highly recommended if you’re developing complex and multi-agent applications using LLMs.