This tutorial explains how to call the Twitter Rest API using Python’s Tweepy Library for scraping tweets. We’re going to use their OAuth 2.0 protocol to do so.

Tweet scraping is an important tasks for many natural language processing applications, like sentiment analysis, text classification and topic modeling.

To get access to the Twitter Rest API, you need to create an account on the Twitter Developer Portal.

Getting Your API Authentication Keys from Twitter

Once you create an account, go to your Projects and Apps Overview section. You’ll need to create a new App in order to get your Twitter API authentication keys and access tokens.

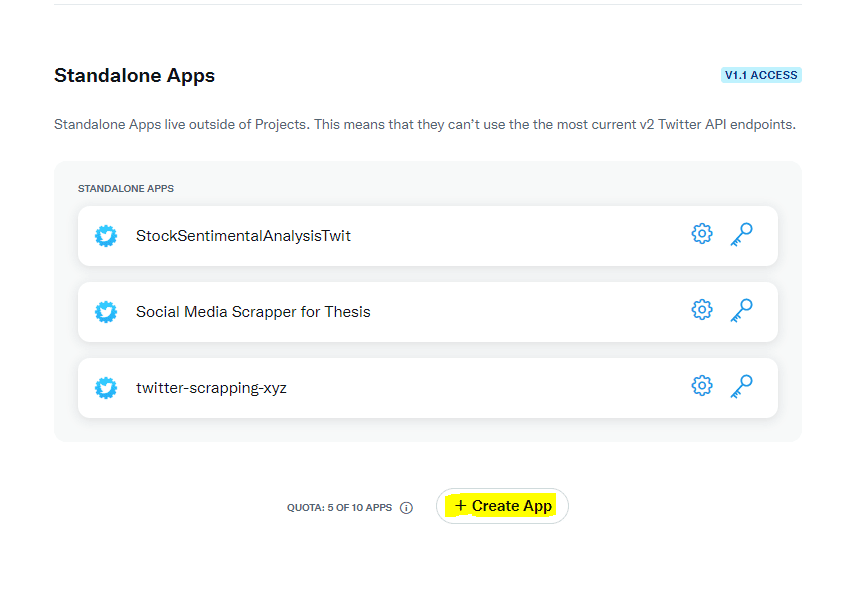

You can create a new project and then create an app inside that project, or you can create a standalone app. Although an app inside a project provides you with more options via V2 access, the quota on the number of project/apps you can build is less than the number of standalone apps. For that reason, we’ll create a standalone app in this tutorial.

To do so, scroll down the Projects and Apps Overview section and you’ll see a section labeled Standalone Apps. Click the Create App button just below the apps in this section (if there are any), as highlighted in the following screenshot.

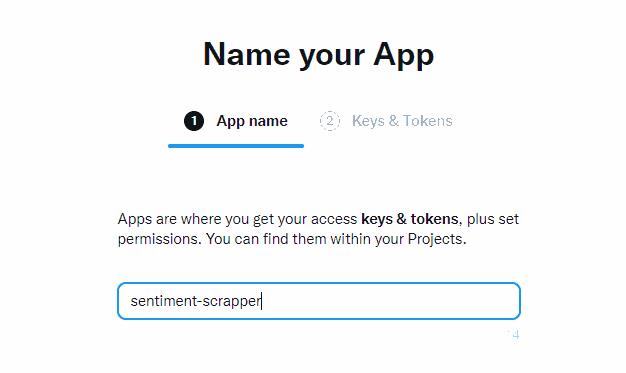

Give any name to your App and hit the Enter key. I named my app sentiment-scrapper [sic] as shown in the following screenshot.

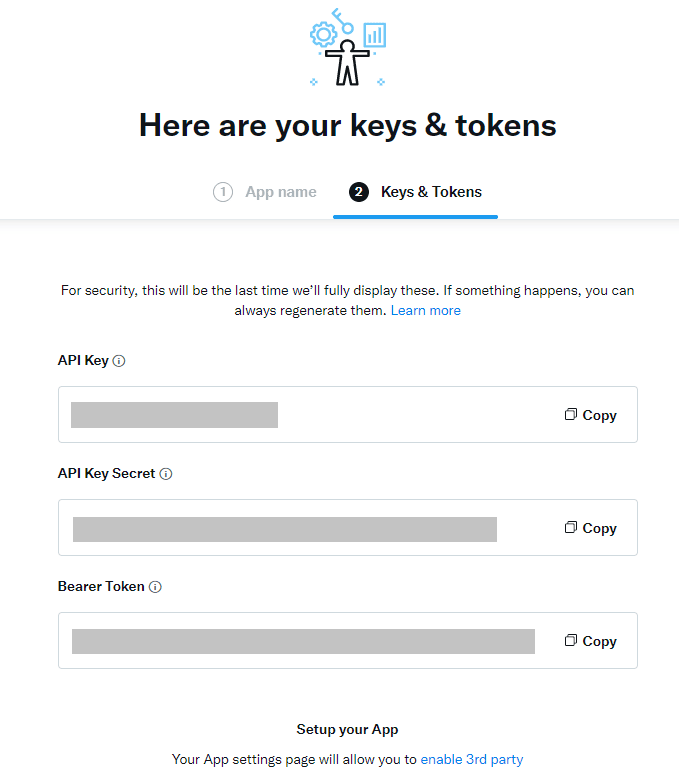

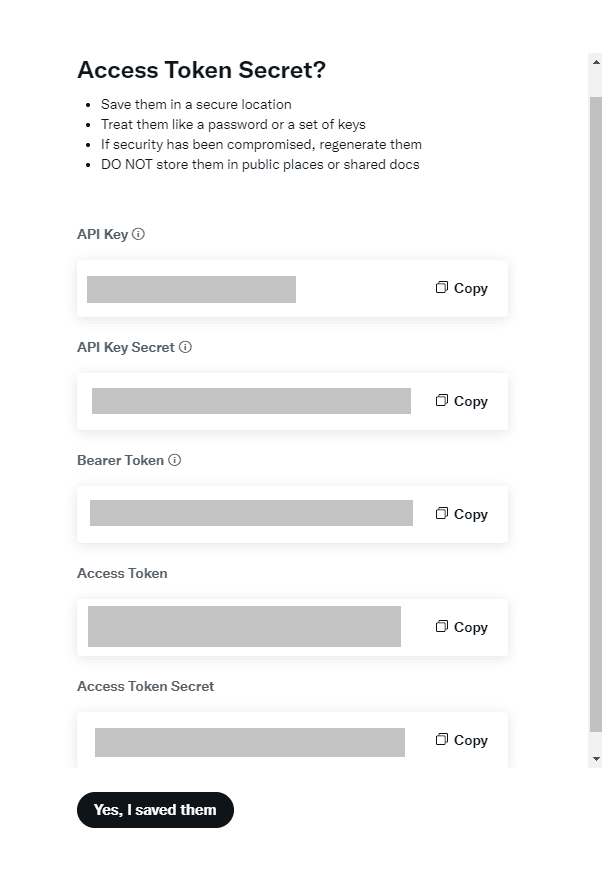

A window will appear that contains your API Key, API Key Secret, and Bearer Token. Copy these keys to a safe place. You can regenerate the keys later in case you forget them.

Creating Twitter Access Tokens

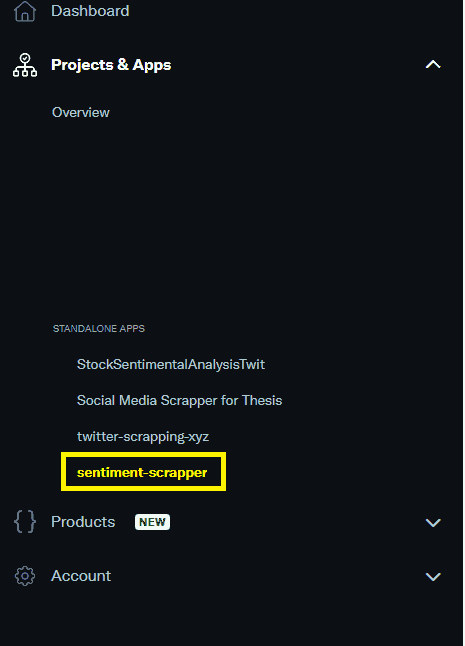

In the left pane of your dashboard, you should see your newly created application.

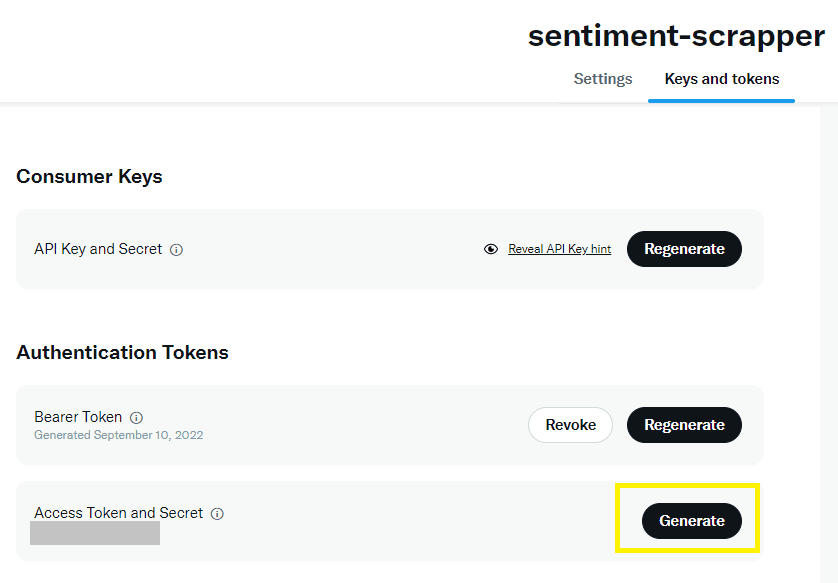

Click the application name, and then select the Keys and tokens option from the top menu.

Click the “Generate” button to generate your access token and secrets.

Now that you have all your API and access keys, you’re ready to scrape tweets using Tweepy.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

Scraping Tweets with Tweepy

The first step when scraping tweets is to create an object of the AppAuthHandler class. You need to pass your API-key, and API-secret to the AppAuthHandler class constructor.

If you’re interested only in scraping tweets with OAuth 2.0 authentication protocol, you don’t need the access token or access token secret.

Next, we pass the AppAuthHandler object to the API class from the Tweepy module.

The API class can now be used to scrape tweets from twitter. Look at the following script for your reference:

import tweepy

import os

consumer_key = os.environ['twitter-api-key']

consumer_secret = os.environ['twitter-api-key-secret']

auth = tweepy.AppAuthHandler(consumer_key, consumer_secret)

api = tweepy.API(auth)Notice we saved our Twitter API Key and Twitter API Key Secret as environment variables on our computer so they’re not displayed publicly in your code. This is a good practice.

Scraping Tweets by Keywords

To search tweets by keyword, you can use the search_tweets() function. The search_tweets() function returns a JSON object that you can parse to extract tweet information. To iterate through the tweets returned by the search_tweets() function, you can create an API Cursor.

You need to pass the following parameter values to the API Cursor to search tweets by keywords.

api.search_tweets()method- the query parameter

qthat contains your search query

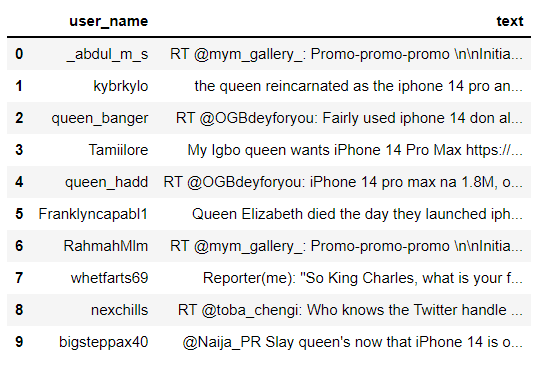

As an example, the following script returns 10 tweets containing the keyword “iphone 14”.

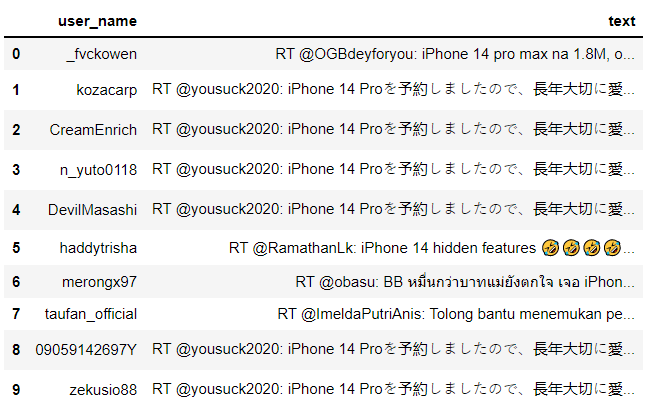

The tweet text and the user name of the owner of the tweet are stored in a Pandas dataframe, which is displayed in the output.

import pandas as pd

dataset = []

for tweet in tweepy.Cursor(api.search_tweets, q='iphone 14').items(10):

tweet_data = {'user_name':tweet.user.screen_name,

'text':tweet.text

}

dataset.append(tweet_data)

df = pd.DataFrame(dataset)

dfOutput:

Scraping Tweets by Language

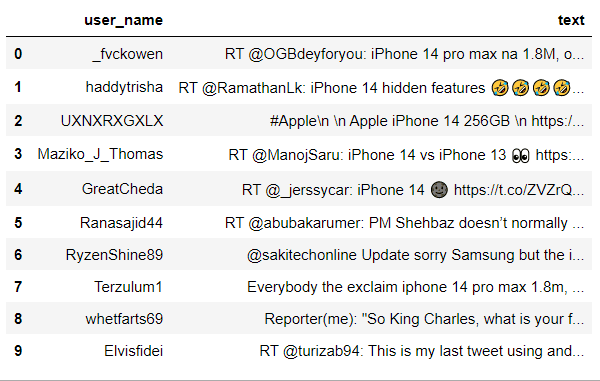

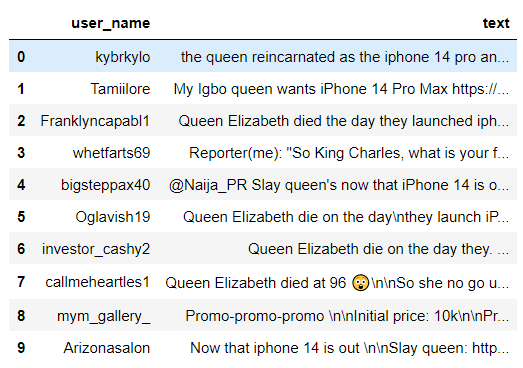

You can also specify the language of the tweets that you want to scrape. To do so, you can pass the language symbol to the lang parameter. For instance, the following script returns tweets in the English language.

import pandas as pd

dataset = []

for tweet in tweepy.Cursor(api.search_tweets, q='iphone 14', lang = 'en').items(10):

tweet_data = {'user_name':tweet.user.screen_name,

'text':tweet.text

}

dataset.append(tweet_data)

df = pd.DataFrame(dataset)

dfOutput:

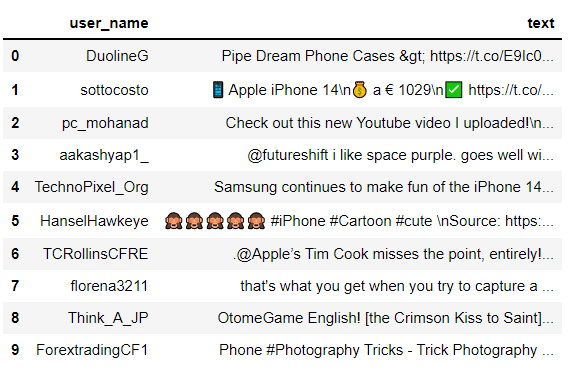

Scraping Tweets by Multiple Keywords

You can scrape tweets containing more than one keyword, too. To do so, you have to separate your desired keywords with spaces. For instance, the following script returns tweets that contain the words “iphone 14” and “queen”.

import pandas as pd

dataset = []

for tweet in tweepy.Cursor(api.search_tweets, q='"iphone 14" queen', lang = 'en').items(10):

tweet_data = {'user_name':tweet.user.screen_name,

'text':tweet.text

}

dataset.append(tweet_data)

df = pd.DataFrame(dataset)

dfOutput:

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

Scraping Original Tweets by Removing Retweets

You can also scrape only tweets that are not retweets. To do so, you need to add the search parameter -filter:retweets to your search query, as shown in the following query.

import pandas as pd

dataset = []

for tweet in tweepy.Cursor(api.search_tweets, q='"iphone 14" queen -filter:retweets', lang = 'en').items(10):

tweet_data = {'user_name':tweet.user.screen_name,

'text':tweet.text

}

dataset.append(tweet_data)

df = pd.DataFrame(dataset)

dfOutput:

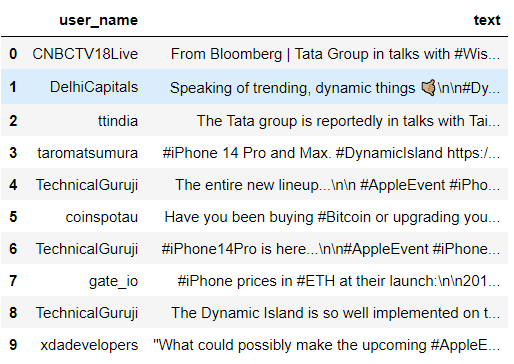

Scraping Tweets by Hashtags

To scrape tweets by hashtags, you can pass the hashtag to the query parameter. For example, the following script returns tweets with the hashtag “#iphone”.

import pandas as pd

dataset = []

for tweet in tweepy.Cursor(api.search_tweets, q='"#iphone" -filter:retweets', lang = 'en').items(10):

tweet_data = {'user_name':tweet.user.screen_name,

'text':tweet.text

}

dataset.append(tweet_data)

df = pd.DataFrame(dataset)

dfOutput:

Scraping Recent/Mixed/Popular Tweets

You can scrape tweets that are either popular, recent or a mix of both. You have to pass the corresponding value to the result_type parameter. For instance, the following script returns tweets that are popular and contain the hashtag “#iphone”.

import pandas as pd

dataset = []

for tweet in tweepy.Cursor(api.search_tweets, q='"#iphone" -filter:retweets', lang = 'en', result_type="popular").items(10):

tweet_data = {'user_name':tweet.user.screen_name,

'text':tweet.text

}

dataset.append(tweet_data)

df = pd.DataFrame(dataset)

dfOutput:

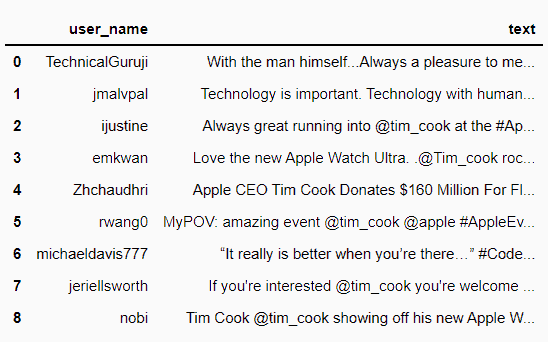

Filtering Tweets that Mention a Username

You can retrieve tweets that mention a specific user by adding “@username” to your search query. As an example, the following script returns tweets that mention @timcook (the CEO of Apple Inc).

import pandas as pd

dataset = []

for tweet in tweepy.Cursor(api.search_tweets, q='@tim_cook -filter:retweets', lang = 'en', result_type="popular").items(10):

tweet_data = {'user_name':tweet.user.screen_name,

'text':tweet.text

}

dataset.append(tweet_data)

df = pd.DataFrame(dataset)

dfOutput:

There are several other search parameters available in the official API documentation, which you can use to specify search criteria for scraping tweets. The Twitter API also allows you to post tweets, images and retweet others, which is what we did when we designed and launched Cohoist Autopilot for full Twitter Automation.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.