In the first part of our CSV tutorial series we learned all about the CSV data format and its many dialects. The second part taught us about character sets and encoding forms in general, then we learned how to read CSV data using the Python csv module facilities. Now it’s time to see which features the csv module provides for writing CSV data. Relying on our skills on reading and writing CSV data, we will tackle a slightly more complex example about handling data from an SQLite cursor. The sqlite3 module will come back in part four, where we will deal with adapters and converters. Finally, we will look under the hood of the csv module, and we will discuss the dialect detection and header detection’s algorithms.

- Writing CSV Data

- Writing Data from an SQLite Database to a CSV File

- Looking Under the Hood of the CPython csv Module

- Closing Thoughts

- References

Writing CSV Data

To write to a CSV file using Python, you must first import the csv module, by adding a line like this at the top of your Python code:

import csvWhen a library writes CSV data, it should perform the following operations:

- Adapting: (optionally) It can convert each field in a record into a string of characters; e.g. one field may be an

intobject, which is converted to astrobject. - Preparing a record: Then it must build a CSV record from a list of fields by adding the field delimiters and the line delimiter.

- Encoding: It must turn a sequence of characters from some charset into a sequence of bytes. Some character sets, like Unicode, provide more than one encoding method.

- Fetching: Finally, it fetches the encoded data, usually on a per-record basis.

In this section we’ll see how the Python csv library writes CSV data.

The writer() Factory Function

The csv library provides the following function:

csv.writer(destination, dialect='excel', **fmtparams)where:

- destination is a file-like object providing at least a

write()method. If it is a file, then it should be opened withnewline='' so that no translation takes place when writing a\ncharacter. - dialect is an optional parameter that can be either the name of a registered dialect, or a dialect class.

- fmtparams is an optional sequence of keyword parameters in the form param=value, where param is one of the formatting parameters and value is its value. If both the dialect and some formatting parameters are passed, the formatting parameters overwrite the respective attributes of the dialect.

The csv writer function returns (1) an object with a writerow(record) method, which writes a single row to the writerows(iterable), which writes an iterable of records to the

def replace_field_delimiter():

""" Replace the ',' field delimiter with '\\t'. """

with open('sample.csv',

encoding='utf-8',

newline='') as src, open(

'sample-tab.csv', 'wt',

encoding='utf-8', newline='') as dst:

writer = csv.writer(dst, delimiter='\t')

for record in csv.reader(src, dialect='excel'):

writer.writerow(record)The replace_field_delimiter() function reads from sample.csv, which uses , as a delimiter, then it writes its contents to sample-tab.csv, replacing , with the \t tab-delimiter notation. First a writer object is created, then this object is used to place a record in the destination for each record fetched from the source.

Note: All supporting files for this tutorial can be downloaded from this ZIP file.

class WritableList(list):

""" List with a `write()` method. """

def write(self, record):

""" Write a record to the list. """

self.append(record)

def writing_to_list():

""" Writing CSV data to a list. """

records = WritableList()

writer = csv.writer(records, lineterminator='\n')

with open('sample.csv', encoding='utf-8', newline='') as src:

for record in csv.reader(src):

writer.writerow(record)

for r in records:

print(r)Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

The WritableList class has been derived from the built-in list type, adding a write() method. That’s enough for the object returned by the csv.writer() function to use it as a destination for its writing operations. In other words, instead of writing to a file, we write to an object in memory. Here is the sample output of our

... Beyond The Black,Jennifer Haben,1995-07-16,DEU,Lost in Forever,2016 Arven,Carina Hanselmann,1986-04-16,DEU,Music of Light,2011 Sleeping Romance,Federica Lanna,1986-04-26,ITA,Alba,2017 Ex Libris,Dianne van Giersbergen,1985-06-03,NLD,Medea,2014 ...

The DictWriter() Class

An alternative to the csv.writer() function for writing CSV data is the csv.DictReader class, which accepts dictionaries as records and maps them into output rows. This class has the writerow() and writerows() methods, just like the object returned by the csv.writer() function. Its __init__() method has the following parameters:

- destination: a file-like object, which provides at least a

write()method. - fieldnames: the list of field names of the CSV data. All the records written using the

writerow()method will sort all fields using this list of names, and all records will have exactly the same number of fields as this list. - restval: (optional) value to be written if a field is missing; it defaults to an empty string.

- extrasaction (optional) if set to

'raise', a writing operation will raise aValueErrorif there are more values in the record dictionary than fields in the list of names; if set to'ignore', extra values are simply ignored. It defaults to'raise'. - all other parameters are passed to the underlying writer object, which we described in the Writer section.

The DictWriter and DictReader classes go hand-in-hand, as we can see from the following example:

def writing_dictreader():

""" Reading with `DictReader` and writing with `DictWriter`. """

with open('sample.csv', 'rt',

encoding='utf-8',

newline='') as src, open(

'sample-ac.csv', 'wt',

encoding='utf-8', newline='') as dst:

# Select just the `Artist` and `Country` fields

writer = csv.DictWriter(dst, [ "Artist", "Country" ],

extrasaction='ignore',

lineterminator='\n')

# Write the selected fields to the destination

for artist in csv.DictReader(src, dialect='excel'):

writer.writerow(artist)The writing_dictwriter() function creates a writer object, which will select just the Artist and Country fields from our data source. Pay attention to the 'ignore', the writerow() method will raise an exception because we selected just two fields, while the DictReader object as we have seen above, we’re able to write just 2 fields to the sample-ac.csv file. Here is an excerpt of our CSV output results:

Simone Simons,NLD "Päivi ""Capri"" Virkkunen",FIN Jennifer Haben,DEU Carina Hanselmann,DEU Federica Lanna,ITA Dianne van Giersbergen,NLD Clementine Delauney,FRA ...

Writing Data from an SQLite Database to a CSV File

Let’s assume you have a SQL database you’ve connected to using a SQLite connection. Now that you know to write CSV data using Python, we’ll show you how to read data from a database and save the results to a CSV file. To connect to your database, you’ll need to import the sqlite3 module, and to write to your CSV file, you’ll need to import the csv module.

In this example, we have a database with all the artists from our sample.csv file, which we’ll call

import sqlite3

import csv

def write_to_csv_file():

""" Write all album released after 2014 to a CSV file. """

with sqlite3.connect('sample.db3') as connection, open(

'albums.csv', 'w', encoding='utf-8', newline='') as dump:

cursor = connection.cursor()

writer = csv.writer(dump)

query = "SELECT band, album, year FROM symphonic WHERE year>2014;"

for record in cursor.execute(query).fetchall():

writer.writerow(record)Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

This Python code should be easy to follow. The only thing worth mentioning is the SELECT statement. 2014. The output of the albums.csv file will be:

Amberian Dawn,Innuendo,2015 Beyond The Black,Lost in Forever,2016 Sleeping Romance,Alba,2017 Visions Of Atlantis,The Deep & The Dark,2018 Nightwish,Endless Forms Most Beautiful,2015 Dark Sarah,Behind the Black Veil,2015 Midnattsol,The Aftermath,2018 Elyose,Ipso Facto,2015

Looking Under the Hood of the CPython csv Module

As of Python 3.8, the csv module is implemented by an underlying C module, called _csv, and a higher level Python module. The Python module defines the DictWriter, DictReader and Sniffer classes, while the C module defines the rest of the interface. Let’s take a look at some behavior of the csv module. This behavior may depend on the official interpreter, which we’ll refer to as CPython in this tutorial.

An Introduction to Flow Charts

Flow charts are a way of representing algorithms in a language-independent fashion. We will use them to describe the two algorithms above. Our flow charts do not fully comply to the UML specification (see the Activities chapter), but they are easy to follow if you keep in mind that:

- The solid circle marks the start of the algorithm (start node).

- The solid circle within a hollow circle marks the end of the algorithm (final node).

- Lines connect the various parts (nodes) of the algorithm.

- Arrows indicate which way a line should be traversed. There can be only one arrow for each line.

- Rectangles contain one or more statements to be executed in sequence.

- Rounded rectangles contain a test condition. The next step of the algorithm will be chosen according to the result of the test. There is a line exiting from this element for each possible result of the test.

- Diamond-shaped symbols indicate that two or more lines are merged into one line.

- The flow chart must be read from the start node to the final node, following the lines in the direction pointed by their arrows.

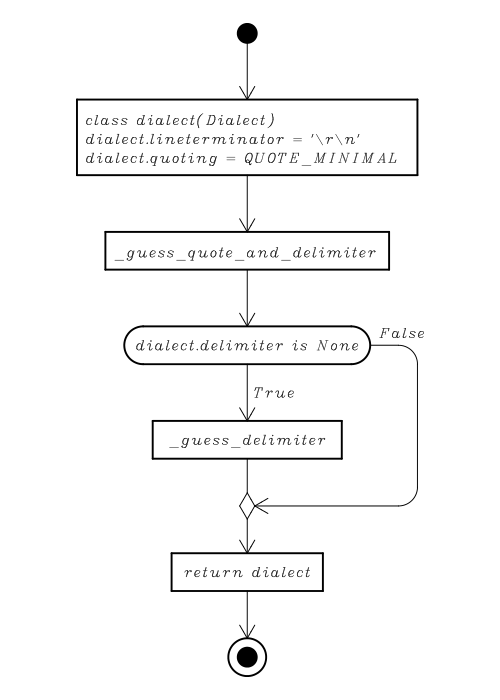

The Dialect Detection's Algorithm

As we have already seen in part one of this tutorial, the aim of the dialect detection’s algorithm is to build a csv.Dialect class describing the CSV dialect of a file by reading just a sample from it. Now we will describe the algorithm thoroughly. Let’s have a look at its flow chart:

So, what’s going on here?

- A new class, derived from

csv.Dialect, is defined. Thelineterminator andquoting attributes are set regardless of the rest of the algorithm. The algorithm will set thedoublequote ,delimiter ,quotechar andskipinitialspace attributes of the dialect. - The

_guess_quote_and_delimiter()function is called. This algorithm uses the regular expression support, provided by theremodule, to analyze each row of the sample. It tries to break down a row using the"or the'quote character, then looks to the first non-space character at the right of the field, which should be the field delimiter. For each row in the sample, it keeps track of the character used as the quote character and that used as a delimiter by incrementing counters. Using these counters, the algorithm will choose thequotechar and thedelimiter attributes. From our description of the algorithm we can see that this approach won’t work if the fields in the sample aren’t quote delimited; e.g. oursample.csvfile and many spreadsheets dealing with numerical data. - If the

_guess_quote_and_delimiter()fails to detect thedelimiter , the_guess_delimiter()function is called. This function takes a completely different approach, based on frequency tables. Instead of trying to break a line using a delimiter, it builds a frequency table for each character of the line. Then it builds a table of frequency based on the line table of frequency (named meta-frequency); e.g. the character c occurred n times in m rows. Using this table, the algorithm can determine the expected frequency of the delimiter character. Finally, it tries to find the character in the table that best meets this frequency, and sets it as thedelimiter . - Finally, the

dialectclass is returned.

Those are the fundamentals of how the Python tries to detect the dialect of a CSV file.

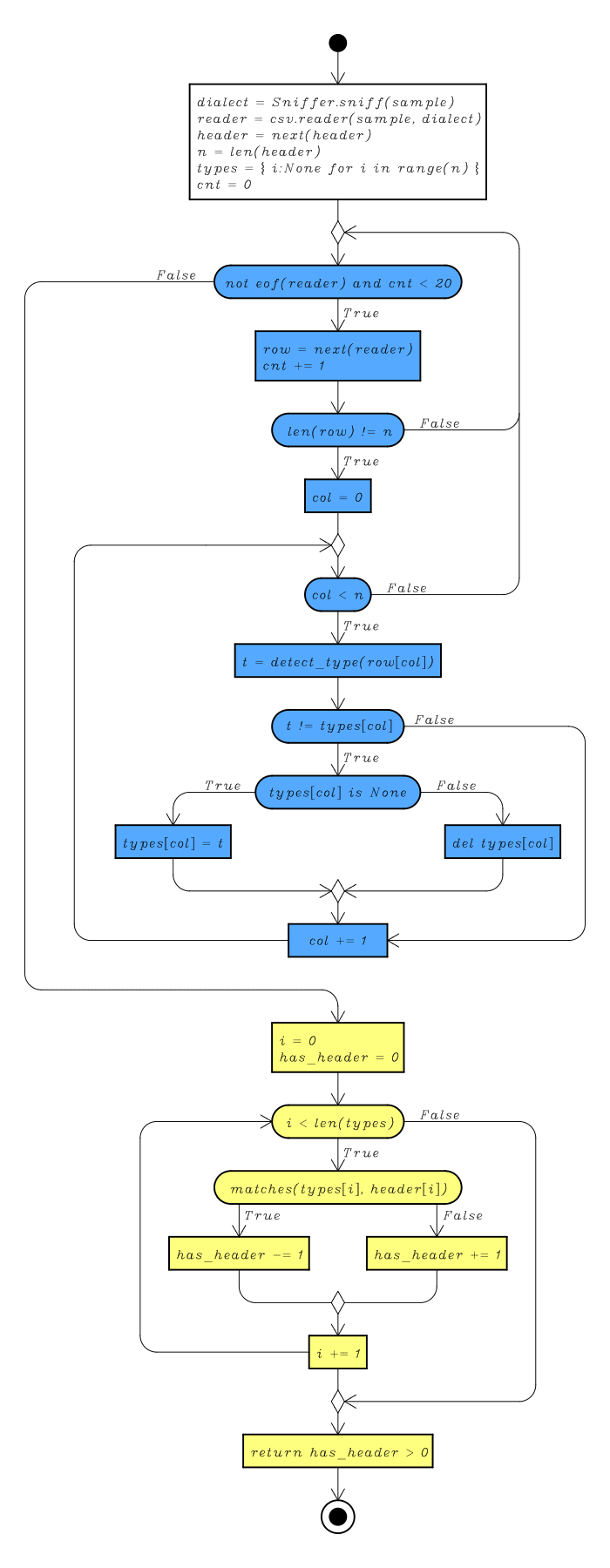

The Headers Detection's Algorithm

As we have already seen in part one of this tutorial, the aim of the header detection’s algorithm is to determine whether a CSV file has a header or not by reading a short data sample. This algorithm uses the csv.reader() function to iterate over the records of the sample, and the Sniffer.sniff() method to determine the dialect of the sample. The sample need not be the entire file. The algorithm may raise an exception if the sample is not an iterable object (csv.Error), or if it is empty (StopIteration). The algorithm goes as follows:

- It assumes that the first record is the header, .

- Then it iterates over the remaining records, up to 20 records. All records that have a different number of fields than the header are discarded.

- It determines the data type of each field in the record, choosing between

int,float,complex, and an integer representing the length of the field, which is the fallback value for all fields. If two values of the same column have a different type, then the whole column is discarded. - The last phase of the algorithm (called voting) iterates over the column types: if a column has the same type as the respective value of the header, then a counter is decremented, otherwise is incremented.

- The first row of a CSV file is a header if the counter is greater than 0.

The rationale behind this algorithm can be summed up as follows:

- If all cells of a column have the same type, and this type is different from the type of the respective cell in the first row, then the first row is likely a header.

- If all cells of a column have a string type and if all strings are the same length except for the one on the first row, then the first row is likely a header. Many database systems and spreadsheets allows the user to declare fixed length strings, e.g. the

CHAR(n)type of the SQL language, wheren is the length of the string.

Now take a look at the flow chart of the header detection algorithm. The nodes with a light blue background represent the type detection part of the algorithm; the nodes with a light yellow background represent the voting phase of the algorithm.

The flow chart should be pretty much self-explanatory, but it is worth noting that:

eof(reader)isn’t a proper Python function; it just serves as a sentinel for the loop iterating over the rows of the sample.detect_type()hides the implementation details of the type detection algorithm. The only thing we need to know is that it will returnint,float,complexor an integer.matches()compares the type of all cells of a column to the type of the first cell of the same column. We just need to know that it returns aboolvalue.

A Fistful of Exceptions

In part two of this tutorial we listed some exceptions raised by the csv module, mostly documented by the official reference. In this section we list all exception raised either by the underlying _csv.c module or by the csv.py module. This list has been built from the Python 3.8 source code, so it might not hold for all implementations and versions of the csv module.

| Source | Type | Raised By | Error Message | Cause |

|---|---|---|---|---|

| py | csv.Error | Dialect.__init__() | Various | Exceptions while initializing a Dialect class. See the exceptions raised by the dialect_new() function. |

| py | ValueError | DictWriter.__init__() | extrasaction must be 'raise' or 'ignore' | Invalid value for the |

| py | ValueError | DictWriter.writerow() | dict contains fields not in fieldnames | If csv.writer() function. |

| py | csv.Error | Sniffer.sniff() | Could not determine the delimiter. | Each time the dialect detection's algorithm fails. |

| py | StopIteration | Sniffer.has_header() | No message | Passing an empty sample to the Sniffer.has_header() method. |

| c | csv.Error | get_dialect_from_registry() | unknown dialect | Trying to get a dialect which hasn't been registered yet. |

| c | csv.Error | dialect_check_quoting() | bad quoting value | The Dialect class have an invalid value. |

| c | csv.Error | dialect_new() | quotechar must be set if quoting enabled. | When |

| c | csv.Error | dialect_new() | lineterminator must be set | The Dialect class is missing the line terminator. |

| c | csv.Error | dialect_new() | delimiter must be a 1-character string | |

| c | csv.Error | dialect_new() | escapechar must be a 1-character string | |

| c | csv.Error | dialect_new() | lineterminator must be a string. | |

| c | csv.Error | dialect_new() | quotechar must be a 1-character string. | |

| c | csv.Error | dialect_new() | quoting must be an integer. | |

| c | csv.Error | parser_add_char() | field larger than the field limit | When passing a field that exceeds the field limit (maximum length). |

| c | csv.Error | parse_process_char() | delimiter expected after quotechar | When a record ends before the matching quote character is encountered. |

| c | csv.Error | parse_process_char() | new-line character seen in unquoted field - do you need to open the file in universal-newline mode? | When opening a file without using the |

| c | csv.Error | Reader_iternext() | unexpected end of data | If in |

| c | csv.Error | Reader_iternext() | iterator should return strings (did you open the file in text mode)? | When there is at least one character in a sequence which is not a valid Unicode character. |

| c | csv.Error | Reader_iternext() | line contains NULL byte | When a field contains the U+0000 (NUL) character. |

| c | csv.Error | csv_reader() | argument 1 must be an iterator | When passing a non-iterable object as first parameter to the csv.reader() function. |

| c | csv.Error | csv_writer() | need to escape, but no escapechar set. | When a field needs to be escaped, but the |

| c | csv.Error | csv_writer() | argument 1 must have a 'write()' method | The first parameter of the csv.writer() function must be a file-like object. |

| c | csv.Error | csv_writerow() | iterable expected. | When passing anything other than an iterable object as the first parameter to the csv.writerow() function. |

| c | csv.Error | csv_writerow() | single empty field record must be quoted. | When |

| c | csv.Error | csv_writerows() | writerows() argument must be iterable. | When passing anything other than an iterable to the csv.writerows() function. |

| c | csv.Error | csv_register_dialect() | dialect name must be a string. | Calling csv.register_dialect(n, ...), where |

| c | csv.Error | csv_unregister_dialect() | unknown dialect | When the name of the dictionary passed to the csv.unregister_dialect() hasn't been registered yet. |

| c | csv.Error | csv_field_size_limit() | limit must be an integer | When trying to set the maximum size of a field using an object other than int. |

Closing Thoughts

In this part of our CSV tutorial series we learned how to write data to a CSV file using a certain dialect, but we didn’t stop there. We demonstrated how to read data from a database connection using the Python sqlite3 module and dump the data in a CSV format. Finally, we described the dialect detection and header detection algorithms.

Did you find this free tutorial helpful? Share this article with your friends, classmates, and coworkers on Facebook and Twitter! When you spread the word on social media, you’re helping us grow so we can continue to provide free tutorials like this one for years to come.

Stay tuned for the fourth (and final) part of our CSV tutorial series, where we’ll discuss adapting and converting CSV data.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

References

This section features some normative references for the CSV reading API and for the sqlite3 module.

- Kevin Altis, Dave Cole, Andrew McNamara, Skip Montano, Cliff Wells, CSV File API, PEP 0305:2003

- csv - CSV File Reading and Writing in Guido van Rossum et al., The Python Library Reference, v. 3.8.0