On September 19, 2024, Alibaba released Qwen 2.5 series of models that outperformed state-of-the-art open-source large language models (LLMs) on several benchmarks.

In this article, you will see how to develop a retrieval augmented generation (RAG) chatbot in LangChain. The chatbot will be capable of answering questions related to YouTube videos.

We will import the Qwen 2.5-7b model from Hugging Face to develop our chatbot. You can also use the Qwen 2.5-72b model if you have more powerful GPUs. The process remains exactly the same.

So, let’s begin without ado.

Note: The scripts in this article are run using Google Colab.

Installing and Importing Required Libraries

You must install the following libraries to run scripts in this article.

!pip install -q -U transformers

!pip install -q -U sentence-transformers

!pip install -q -U faiss-cpu

!pip install -q -U langchain

!pip install langchain-community

!pip install -U langchain-huggingface

!pip install -q -U youtube-transcript-api

!pip install -q -U pytubeThe following script imports the required libraries.

from transformers import AutoModelForCausalLM, AutoTokenizer, logging, pipeline

from langchain_huggingface import HuggingFacePipeline

from langchain.embeddings import HuggingFaceEmbeddings

from langchain_core.prompts import PromptTemplate

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_community.document_loaders import YoutubeLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import FAISS

from langchain.chains import create_retrieval_chain

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_community.chat_message_histories import ChatMessageHistory

from langchain_core.runnables.history import RunnableWithMessageHistory

from langchain_core.prompts import MessagesPlaceholder

from langchain.chains import create_history_aware_retriever

import warnings

logging.set_verbosity(logging.CRITICAL)Importing Qwen 2.5 LLM from Hugging Face in LangChain

Before we develop a YouTube question-answering chatbot, let’s first see how to generate text using the Qwen 2.5-7b model in LangChain.

We will first import the Qwen 2.5-7B instruct model and the corresponding tokenizer from Hugging Face.

model_name = "Qwen/Qwen2.5-7B-Instruct"

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_name)Next, we will create a LangChain Hugging Face pipeline for the Qwen 2.5-7b model you just imported.

We will convert the pipeline to a LangChain LLM by passing the pipeline object to the HuggingFacePipeline class.

hf_pipe = pipeline("text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=1048)

llm = HuggingFacePipeline(pipeline=hf_pipe)Now, you can use the Qwen 2.5-7B model like any other LLM in LangChain. Let’s see an example. In the script below, we create a LangChain chain using the Qwen 2.5-7B model to answer Python-related questions.

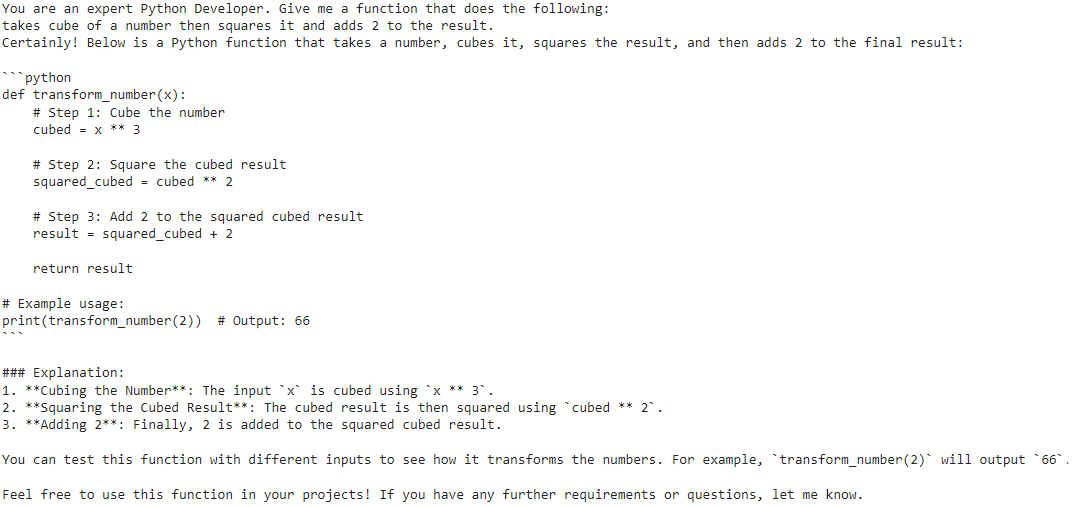

We ask the LLM to generate a function that takes a cube of a number, squares it, and finally adds 2 to it.

template = """You are an expert Python Developer. Give me a function that does the following:

{function_description}"""

prompt = PromptTemplate.from_template(template)

chain = prompt | llm | StrOutputParser()

description = "takes cube of a number then squares it and adds 2 to the result"

print(chain.invoke({"function_description": description}))Output:

The above output shows that the Qwen 2.5-7b model gave us a function along with its explanation.

Let’s test the above function.

def transform_number(x):

# Step 1: Cube the number

cubed = x ** 3

# Step 2: Square the cubed result

squared_cubed = cubed ** 2

# Step 3: Add 2 to the squared cubed result

result = squared_cubed + 2

return result

#Example usage:

print(transform_number(2)) # Output: 66Output:

66The output shows that the function works perfectly.

Let’s now see how to develop a RAG chatbot with the Qwen 2.5-7b model in LangChain.

Retrieval Augmented Generation with Qwen 2.5 in LangChain

In this section, you will see how to develop a Chatbot that will give you answers to your questions about a YouTube video.

Generating Embeddings from YouTube Videos

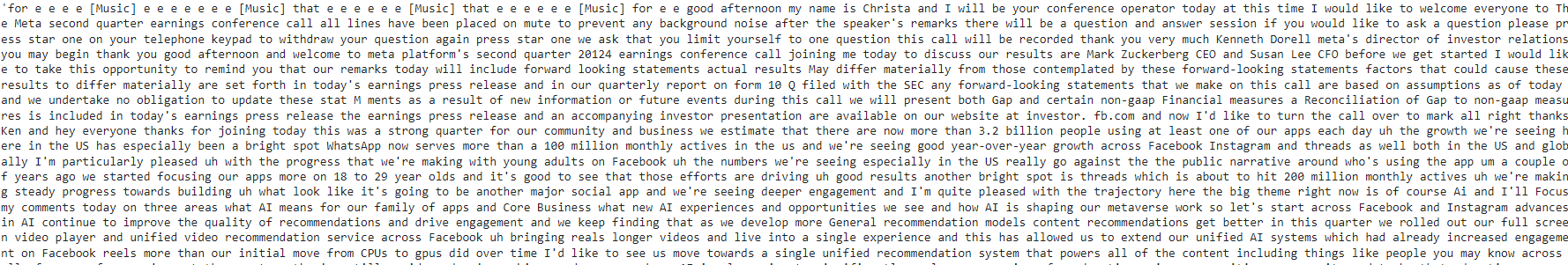

We will import the text of Meta’s earnings call for the second quarter of 2024. As the following script shows, you can use LangChain’s YoutubeLoader to load the content of a YouTube video.

url = "https://www.youtube.com/watch?v=uY3E-7jU8Xo"

doc = YoutubeLoader.from_youtube_url(url).load()

doc[0].page_contentOutput:

Next, we will split the content of the YouTube video into smaller chunks since Vector databases show better performance for semantic similarity of smaller text chunks.

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=200,

length_function=len,

)

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(doc)

print(f"Number of documents: {len(documents)}")

print(f"Last Document: {documents[-1].page_content}")Output:

Finally, we will convert the text chunks into vector embeddings and store them in a vector retriever store.

model_path = "thenlper/gte-large"

embeddings = HuggingFaceEmbeddings(

model_name = model_path

)

embedding_vectors = FAISS.from_documents(documents, embeddings)

retriever = embedding_vectors.as_retriever()Creating Chatbot Response Based on Documents

Now, we are ready to create our chatbot. To do so, we need to create two chains: a stuff document chain and a retriever chain.

The stuff document chain will create a placeholder (context) for storing the contents of the YouTube video retrieved by the retriever chain. The retriever chain will use the vector retriever to retrieve the YouTube content that best answers the user’s question using the vector similarity search.

#source: https://python.langchain.com/docs/tutorials/rag/

system_prompt = (

"You are an assistant for question-answering tasks. "

"Use the following pieces of retrieved context to answer "

"the question. If you don't know the answer, say that you "

"don't know. You dont add any additional information in the response and just provide concise answer."

"Answer in three sentences at the maximum"

"\n\n"

"{context}"

)

prompt = ChatPromptTemplate.from_messages(

[

("system", system_prompt),

("human", "{input}"),

]

)

question_answer_chain = create_stuff_documents_chain(llm, prompt)

rag_chain = create_retrieval_chain(retriever, question_answer_chain)Once we create the retrieval chain, we can ask questions about the YouTube video, as shown in the following script.

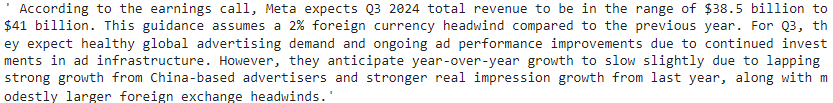

input = "What are Meta revenue goals and expected outlook for Q3?"

response = rag_chain.invoke({"input": input })

response["answer"].split(input)[1]Output:

The above output shows that the Qwen 2.5-7B model correctly answers our query. You can verify this by searching the video’s contents directly.

Let’s ask another question.

input = "What are the major risks for Q3?"

response = rag_chain.invoke({"input": input })

response["answer"].split(input)[1]Output:

Again, the model returns the correct output.

Conclusion

The Qwen 2.5 series of models have proven to be state-of-the-art open-source LLMs. In this article, you saw how to create a RAG chatbot using Qwen 2.5-7B and hopefully you saw that it performed pretty well! I encourage you to develop your RAG chatbot using Qwen 2.5 and LangChain and share your feedback.