This tutorial will introduce you to the Python SQLite module. To start, we’ll describe all five of the SQLite native data types. While mapping SQLite types to Python types is straightforward, the conversion from ISO SQL types is rather contrived.

Once we get past the typing system, we can dive into the module’s API. There, we will find everything we need to manage our databases using Python: creating a connection, executing SQL statement, querying cursors, error handling, and committing transactions.

In this tutorial, you’ll learn that the way SQLite defines table schemas and enforces constraints is rather peculiar. Moreover, SQLite allows us to define virtual tables, which extend all SQL commands over objects that are not regular tables or views, e.g. external files or sequences of numbers.

Here are the topics we’ll cover in this Python sqlite3 tutorial. If you want a quick primer instead of a detailed overview, just click a topic and start reading.

- SQLite Data Types

- sqlite3 Basics

- Closing Thoughts

- References

SQLite Data Types

Before diving into the Python sqlite3 API, we will describe the set of native data types in SQLite, since it differs greatly from the ISO SQL Standard. There are five sqlite3 data types (called storage classes):

- NULL

- Integer

- Real

- Text

- BLOB

This tutorial is going to start off pretty technical, but stick with us we’ll make sense of it all with some great examples.

SQLite Native Data Types

Unlike other implementations of the SQL language, SQLite stores the data type on a per-value basis, i.e. each value of a table has its own data type, regardless of the column type declared in the CREATE TABLE statement. Such a technique is called manifest typing. For example, an UPDATE statement may change both the value and the type of a field.

The NULL Type

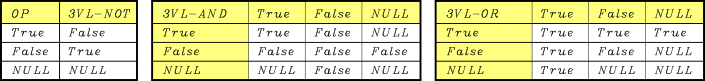

NULL is a data type that doesn’t hold any value. It is represented by the keyword NULL. Since SQLite allows NULL values in logical expression, it implements a three-valued logic, using the following tables:

Since SQLite does not provide a boolean type, there aren’t literal values for booleans, so

The Text Type and its Encoding Forms

The TEXT type holds sequences of Unicode characters (or strings). All characters in a text string are enclosed between ' (single quote character). A single quote within a string can be escaped with ''. There is no other form of character escape. In other words, there’s nothing similar to the C-like escape sequences. The encoding form, which is the way a sequence of characters is represented in binary form, of all the strings is set upon creation of the database and can only be one of the following:

- UTF-8, a variable-length encoding form that represents all Unicode code points using 1 to 4 bytes.

- UTF-16LE, an encoding form that represents all Unicode code point between

U+0000andU+FFFFas a 16-bit unit, all others with two 16-bit units (called surrogate pairs). It uses the little-endian byte order; e.g. theU+1234code point is represented by the byte sequence3412. - UTF-16BE, an encoding form that is similar to UTF-16LE, but uses the big-endian byte order; e.g. the

U+1234code point is represented by the byte sequence1234.

Though all strings are stored with the same encoding form, the SQLite C API provides functions to retrieve data in all others encoding forms. As we will see, the sqlite3 API will treat all TEXT data as UTF-8 strings.

SQLite Numeric Types

SQLite provides two ways of representing numbers: integer and real (floating point) numbers:

- Integers are signed numbers in “two’s complement” representation using 1, 2, 3, 4, 6, or 8 bytes, depending on the minimum size required to store their respective literal value. The integer literal is a sequence of hexadecimal digits (available since SQLite 3.8.6), or a sequence of decimal digits for which the following conditions hold:

- it has no decimal point;

- it has no exponent;

- it is not between -264 and (263)-1, which are the lower and upper bounds, respectively, of the two’s complement 8-byte representation. Otherwise, they will be represented as real numbers; e.g.

0xdeadand123are both integer literals.

- Real numbers are represented as an 8-byte IEEE 754 floating-point number. Their literal is a sequence of digits including a decimal point, or a sequence of digits with an exponent; e.g.

-123.45,.23and123e-12are real literals.

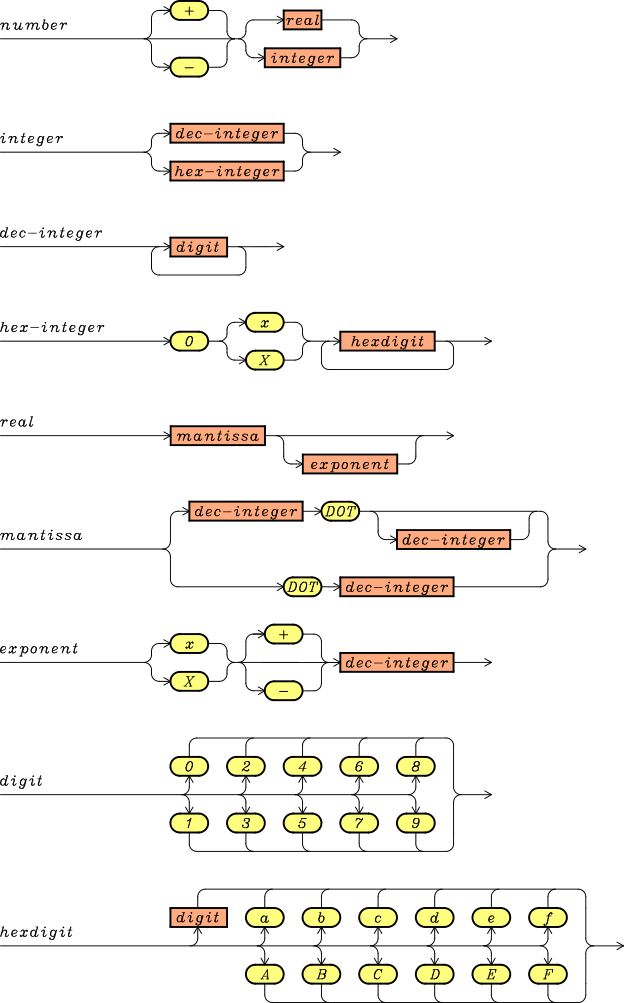

The following syntax diagrams sums up the rules for numeric literals:

Byte Sequences

The BLOB type (Binary Large OBject) holds arbitrary sequences of bytes, much like the bytes Python type. Literal BLOBs are represented as a sequence of hexadecimal digits, enclosed within ' (single quote character) and prefixed by x or X; e.g. x'deadbeef' is a valid BLOB literal. There must be an even number of hexadecimal digits in the literal, since two hexadecimal bytes makes up a byte. If we try to insert a BLOB with an odd sequence of hexadecimal digits, like x'12345', into a database, the SQLite core will raise an exception: sqlite3.Operational Error: unrecognized token. It treats such a literal as a syntax error.

The BLOB is represented as a sequence of n bytes, where n is half the length of the hexadecimal sequence of the literal.

Mapping SQLite Types

After describing all SQLite data types, we must understand how such a limited set of types can represent all data types required by the ISO SQL language, and how we can map them to Python types when we use them in the sqlite3 module.

Mapping SQLite Types into ISO SQL Types: Type Affinities

The ISO SQL language features many data types that don’t belong to SQLite, e.g. DATE, smallint, CHAR. Though SQLite doesn’t recognize these types, it accepts them in the schema definition. In order to do so, it must implicitly convert (map) them into its native types. It defines the concept of type affinity, i.e. a set of native SQLite types that an ISO SQL type can represent. If a value has type that doesn’t belong to the type affinity, it must be converted implicitly to a type included in the type affinity before being stored in the table. Five type affinities are defined:

- Text. A field with this affinity can only store

NULL,TEXT, orBLOB. Any other type will be converted to a text value before being stored in the table. - Numeric. A field with this affinity can store values of any type. Values other than text are stored without performing any conversion. The SQLite core attempt to interpret text data as a literal for a numeric type: if it succeeds, then the converted value is stored, otherwise the original value is stored; e.g.

'10'and'0xA'can be interpreted as string literals for10, but'home'can’t be converted, so it will be stored as it is. - Integer. This type affinity is similar to the numeric one, but it converts to an integer any

REALthat lacks a fractional part. - Real. This type affinity works like the numeric one, but it converts all integers to floating-point numbers.

- None (or BLOB). This type affinity indicates that all data will be stored with their original type.

We can see that we haven’t tackled our problem yet. We’ve given a set of rules to convert between values and type affinity, but we still have no clue how to map type affinities in ISO SQL types. That’s exactly what the following algorithm does:

- If the field definition has no type, than it has BLOB affinity.

- If the field type has

INTas substring, than it has Integer affinity. - If the field type contains any of

CHAR,TEXTorCLOBstrings, then it has a Text affinity. - If the field type contains the substring

BLOB, then has BLOB affinity. - If the field type contains any of

REAL,FLOA, orDOUB, then it has a Real affinity. - If no match is found, then the field is assigned the Numeric affinity.

To sum up this algorithm, I’ll provide a conversion table between the most relevant ISO SQL types and the SQLite Type Affinities:

| ISO SQL Type | SQLite Type Affinity |

|---|---|

| CHARACTER(n) | Text |

| VARCHAR(n) | Text |

| BOOLEAN | Numeric |

| smallint | Integer |

| INTEGER | Integer |

| DECIMAL(p, s) | Numeric |

| NUMERIC(p,s) | Numeric |

| REAL | Real |

| FLOAT | Real |

| DOUBLE PRECISION | Real |

| DATE | Numeric |

| TIME | Numeric |

| TIMESTAMP | Numeric |

| CLOB(n) | Text |

| BLOB(n) | BLOB |

Beware that you can use any identifier as a type, not only those in the ISO Standard, though restricting to those may improve portability of the CREATE TABLE statement; e.g. name Str NOT NULL is an acceptable column definition in SQLite, but it may not be in other database management systems.

Mapping SQLite Types into Python Types

The sqlite3 module goes a step further, and converts Python types into SQLite types (and the other way around) automatically, complying with the rules in the following table:

| Python Type | SQLite Type |

|---|---|

| None | NULL |

| int | Integer |

| float | Real |

| str | Text |

| bytes | BLOB |

This mapping is fine for most cases, but what if we have an arbitrary class, like a complex number, and we want to put it in a SQLite table? The sqlite3 API provides a more sophisticated mechanism for mapping Python types to SQLite types based on adapters and converters, but we won’t address those topics here.

sqlite3 Basics

Regardless of the database management system at hand, let’s talk about how to get our database up and running with Python:

- We need a way to keep track of all open databases. The

sqlite3.Connectionclass will do this. In our example we will attach just one database. (Attach is the fancy SQL way of saying open) - We need a way to interact with the SQLite core. We can feed all our SQL commands to a

sqlite3.Cursorobject. - We want to query a database to retrieve some data. The

sqlite3.Cursorclass has afetchmany()method which can fetch a certain number of records at a time. - We want to save changes to the database, just as we save changes to a text file after editing it. The

sqlite3.Connectionobject has acommit()method to do just that.

In the next sections we’re going to investigate each of these topics in detail.

Python Database API Specification v2.0

The API provided by the sqlite3 module is fully compatible with the Python Database API Specification (DB-API 2.0), which is meant as a template for APIs for the various database systems. This way, all API implementations will have a common set of classes and functions, making it easier for the programmer to switch from one to another. Each implementation can enhance this basic template by providing other classes and functions. In the next sections we will focus our attention only on the objects from the DB-API 2.0 specification.

Creating a Database Connection

This API defines one function:

connect(...) -> Connection

which has an undefined parameter list, and which must return a Connection object. Then, it defines three classes: Connection, Cursor and Error. We’ll deal with the Error class later.

The Connection Class

The Connection class must have the following methods:

| Name | Description |

|---|---|

| close() | Closes the connection. Further attempts to use the connection will raise an Error Exception. |

| commit() | Commit all pending changes to the database. |

| rollback() | Discard all pending changes. |

| cursor() | Returns a Cursor object for the connection. |

We will see below how sqlite3 implements all these methods.

The Cursor Class

The Cursor class must have the following methods and attributes:

| Name | Description |

|---|---|

| description | Read-only tuple holding a tuple of 7 items for each field in the schema of the data returned by a query. The items are: None. |

| close() | Closes the cursor. Every subsequent attempt of using the cursor will result in an Error exception. |

| execute(stmt) | Prepares and executes a SQL statement. |

| executemany(stmt, parameters) | Executes multiple SQL statements using the |

| fetchone() | Fetches the next row of a query result set, returning None if no more data is available. |

| fetchmany(n) | Fetches up to |

| fetchall() | Fetches all rows from a query result set. |

| arraysize | Read/write attribute that specifies the maximum number of rows a fetchmany() call returns by default. |

We will see below how sqlite3 implements all these methods.

Opening a New Connection with sqlite3.connect()

In order to open a database, we must first create a sqlite3.Connection object by calling the function:

sqlite3.connect(database: str, timeout: float) -> sqlite3.Connection- database is the path to the database. If you set it to the empty string, an anonymous, temporary, on-disk database is created. This database can only be accessed through the database connection which created it, and it will be destroyed once the connection is closed. If you set it to

:memory:, then a temporary, in-memory database is created. Each open connection will create its own database, and the database will be destroyed when the connection is closed. - timeout (optional) is the number of seconds the connection should wait for a lock on the database to be lifted before raising an exception. The default value is 5 seconds.

We shouldn’t mistake a sqlite3.Connection for a database! In fact:

- We can connect to an in-memory object and then attach as many databases as we want with the

ATTACHstatement. - The same database (the physical file where the database resides) can be opened by multiple connections at the same time.

Using a Connection Object as a Context Manager

The sqlite3.Connection object created by the sqlite3.connect() function is a context manager, so we can use it in a with statement, which will take care of closing the connection:

with sqlite3.open(path) as connection:

curs = perform_query(connection)

fetch_data(curs)The sqlite3.Cursor object created by the Connection.cursor() method, has a close() method to delete the object, but it is not a context manager. We can overcome this issue by defining a subclass of it, and then passing the subclass to the Connection.cursor() method:

class ContextManagerCursor(sqlite3.Cursor):

def __enter__(self):

return self

def __exit__(self, a, b, c):

self.close()Now we can use ContextManagerCursor in a with statement, like this:

with sqlite3.open(path) as conn:

with conn.cursor(ContextManagerCursor) as cursor:

query_cursor(cursor)Defining our Data Sample

From this point on, we will use the same sample database in our examples. This database is located in the file sample.db3, and has a single table, named symphonic, defined by the following CREATE TABLE statement:

CREATE DATABASE IF NOT EXISTS symphonic (

id INTEGER PRIMARY KEY,

band TEXT NOT NULL,

artist TEXT NOT NULL,

birth_date TEXT,

country TEXT NOT NULL,

album TEXT NOT NULL,

year INTEGER NOT NULL

);

The sample data can be found in the datasrc.csv file attached to this tutorial. If you want to create this database from its source data (and maybe add some more records), you can use the create_sample_database() function in the Python source code attached to this tutorial.

Executing a Statement

Now that we have created a database connection using Python and sqlite3, any other operation on the database requires the execution of a SQL statement. The sqlite3 module provides two ways of executing a statement:

- By using the

Connection.execute(stmt)method, which returns asqlite3.Cursorobject that can be used to retrieve the results. - By creating a cursor with the

Connection.cursor()method, and then calling theCursor.execute(stmt)method.

During the statement execution, the SQLite core can raise any exception derived from the sqlite3.Error class.

Executing Multiple Statements

Preparing a statement for execution is a time-consuming task. The SQLite core has to parse the statement string, create the proper data structure, and create the bytecode for the Virtual Database Engine. The SQLite core tries to reuse previously prepared statements by caching them. While we can’t control statement caching, we can improve performance by using using another execution method, called executemany(stmt, iterable). This method requires two parameters:

?: SQLite will automatically assign an index to each parameter.?n: wheren is the index of the parameter. Indices start from 1. The same parameter can be used more than once.:name: the fields can be referenced by name. The same name can be used more than once.@name: the fields can be referenced by name. The same name can be used more than once. This is a non-standard feature.

Since all these replacement methods can be a bit confusing, let’s take a look at some examples. These examples have some common features:

- They all use

sample-replace.db3 as sample database. This database has the same schema as thesample.db3database. Both sample databases are included in this ZIP file. - They delete all data from the

symphonictable before trying to insert new rows. - They all use the

executemany()method to perform a sequence ofINSERTstatements in order to load data from a CSV file. - The

datasrc.csv file has the same column order as thesymphonictable in the database. - The

datasrc-mod.csv file has the same data as thedatasrc.csv file, but columns are arranged in the following order:id ,artist ,birth_date ,country ,band ,album ,year . We need this file to test the ability of the?nand:nameplaceholders to rearrange the columns from the input source. - The

print_all()function just selects all data from thesymphonictable and prints the data. It serves to test if the data has been loaded properly into the table. - They all use the

csvmodule to read CSV data from a file.

To get use the sqlite3 module in Python, you must enter import sqlite3 at the top of your script. To be complete, we’re going to import the sqlite3 and csv module in this example.

import sqlite3

import csv

def replace_by_position():

""" Tests the `?` placeholder. """

with sqlite3.connect('sample-replace.db3') as conn, \

open('datasrc.csv', 'rt', newline='') as src:

conn.execute('DELETE FROM symphonic;')

stmt = 'INSERT INTO symphonic VALUES(?, ?, ?, ?, ?, ?, ?);'

conn.executemany(stmt, csv.reader(src))

print_all(conn)

def print_all(conn):

""" Prints all the contents of the sample database. """

for row in conn.execute('SELECT * FROM symphonic;').fetchall():

print(','.join([str(f) for f in row]))

replace_by_position()The replace_by_position() function uses the ? placeholder to mark which field from the source row should take its place. The first ? will be replaced by the first field of the source row, the second ? will be replaced by the second field, and so on.

If you’re running an earlier version of Python, like Python 2.7 instead of Python 3, you may need to remove the newline argument from your open csv file statement. You’ll also most likely need to add conn.text_factory = str to prevent execution errors.

def replace_by_index():

""" Tests the `?n` placeholder. """

with sqlite3.connect('sample-replace.db3') as conn, \

open('datasrc-mod.csv', 'rt', newline='') as src:

conn.execute('DELETE FROM symphonic;')

old_header = ('id', 'band', 'artist', 'birth_date',

'country', 'album', 'year')

new_header = ('id', 'artist', 'birth_date',

'country', 'band', 'album', 'year')

stmt = 'INSERT INTO symphonic VALUES' \

'(?1, ?5, ?2, ?3, ?4, ?6, ?7);'

conn.executemany(stmt, csv.reader(src))

print_all(conn)The replace_by_index() function is a bit more sophisticated, since it uses indices, starting from 1, to mark the corresponding field in the source row. The first placeholder will be replaced by the first field in the row, the second placeholder will be replaced by the fifth field in the row, and so on. This approach can be error prone, since we have to map the indices of the table schema to the indices of the source file. Regardless, this function uses the same print_all function presented in our first example, and you’ll still need to import the csv and sqlite3 modules to get it to run.

def replace_by_name():

""" Tests the `:name` placeholder. """

with sqlite3.connect('sample-replace.db3') as conn, \

open('datasrc-mod.csv', 'rt', newline='') as src:

conn.execute('DELETE FROM symphonic;')

new_header = ('id', 'artist', 'birth_date',

'country', 'band', 'album', 'year')

stmt = 'INSERT INTO symphonic VALUES' \

'(:id, :band, :artist, :birth_date,' \

' :country, :album, :year);'

conn.executemany(stmt, csv.DictReader(src, new_header))

print_all(conn)The replace_by_name() function uses the :name placeholder to mark the replacement point. Here’s the twist: instead of using the csv.reader() function, which parses the CSV file into a list of tuples, we employ the csv.DictReader() class, feeding it with the new header. This class returns a list of dictionaries, which map each column name to its value. Now we can automatically match the fields to the :name placeholder to which they belong. This approach is more intuitive than mapping numeric indices.

Each of these functions will print the same output, i.e. the entire contents of the table:

... 17,Elyose,Justine Daaé,,FRA,Ipso Facto,2015 100,Nightwish,Tarja Turunen,1977-08-17,FIN,Once,2004 101,Diabulus in Musica,Zuberoa Aznárez,,ESP,Argia,2014 102,Frozen Crown,Giada Etro,,ITA,The Fallen King,2018 103,Ravenia,Armi Päivinen,,FIN,Beyond the Walls of Death,2016 104,Trillium,Amanda Somerville,1979-03-07,USA,Tectonic,2018 105,Nemesea,Manda Ophuis,1980-11-27,NLD,Uprise,2016 ...

The SELECT statement

The clauses in a SELECT statement are not evaluated in the same order they are written. To have a more accurate insight of what happens under the hood, we need to take a look at the bytecode of the Virtual DataBase Engine (VDBE), which is an abstract machine that serves as a layer between the SQLite statements feed to the SQLite core and the processor. The following algorithm should suffice for now:

FROM table-list: all data from the tables intable-list are retrieved.WHERE filter: just the rows for whichfilter holds are selected from the table.GROUP BY expr: all selected data are grouped using theexpr expression of this clause.SELECT field-list: only the fields infield-list are projected into the answer.HAVING expr: if aGROUP BYclause is provided, then just the rows for whichexpr holds true are selected. Otherwise an exception is raised, since eachHAVINGclause must have its correspondingGROUP BYclause.DISTINCT: if this keyword is present, then all duplicate rows are eliminated from the answer.ORDER BY methods: all data are sorted by applying all methods from left to right.OFFSET nandLIMIT m: if present, theOFFSETclause skipsn rows (starting from the beginning) from the answer.LIMITclause will reduce the answer to at mostmrows.

The actual algorithm of the VDBE is not like this, though. It doesn’t need to hold the entire table described in phase 1, but it just produces one row of results at a time.

Defining a SQLite Table

In the SQL language, creating a table is a matter of providing the CREATE TABLE statement with a table schema, defining name, types and a set of constraints for each column. We can also define table-wise constraints and compound primary keys. In this section we will only point out the differences between the ISO Standard and the SQLite implementation.

Regular Tables

A regular table, i.e. a table holding data that resides in the database, are created with the CREATE TABLE statement. SQLite adds an optional IF NOT EXISTS keyword, which prevents the table creation in case it already exists, without raising any exception. Beware that in SQLite all table and field identifiers are case-insensitive, e.g. if a table has an

An odd property of SQLite tables is the PRIMARY KEY constraint, which implies the UNIQUE constraint, but not the NOT NULL constraint, except for integer primary keys. This behavior is due to a bug in an older release, and it has been kept since then for compatibility’s sake. That means when we declare a non-integer primary key, we must always add the NOT NULL constraint as well. I told you it was odd.

SQLite requires that at least one column of the table can be indexed. SQLite will implicitly create a hidden * placeholder of the SELECT statement, so you should include them implicitly in the field list of the SELECT statement using one of the available aliases.

If a table includes a column defined as INTEGER PRIMARY KEY, that column becomes another alias for the INTEGER PRIMARY KEY column has the following advantages:

- That column is automatically indexed, so there is no need to create a new index by using a

CREATE INDEXstatement. - That column will be checked for both

NOT NULLandUNIQUEconstraints. - A default value is automatically provided to that column if no value is specified. If the column is also marked as

AUTOINCREMENT, the new default key will be incremented by one, and previous keys, even when they are freed by a deletion, will never be used again. When the key hits the maximum value, the SQLite core raises an exception.

Tables without a ROWID Column

Since SQLite version 3.8.2 (2013-12-06), a table can be created without an implicit WITHOUT ROWID keyword in the CREATE TABLE statement. While tables with a

- A table without

ROWID must have a primary key, since no automatic index is built on the table. - When a table has no

ROWID the behavior described above forINTEGER PRIMARY KEYno longer applies. Such a column will be treated with an integer affinity. AlsoAUTOINCREMENTwill not work, since the column may no longer contain only integer values. - The

NOT NULLconstraint will be enforced on primary keys for tables withoutROWID .

I encourage you to refer to the official documentation for more information about this new kind of table.

Virtual Tables

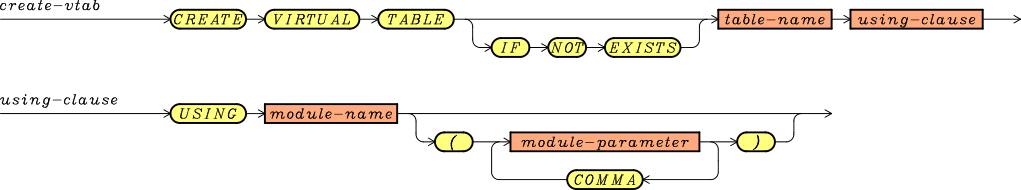

Along with regular tables and views, SQLite provides another way of declaring a table by using virtual tables. A virtual table is a table implemented through a C module, which is then loaded into the SQLite core. A virtual table is created using the CREATE VIRTUAL TABLE statement as described in the following syntax diagram:

where:

- module-name is the name used when registering the module; it is hardcoded in the C source.

- module-parameter is one of the parameters that the module allows. You should browse the module documentation to see if the module allows parameters and what they mean.

In order to add an extension module into your database connection, you need to allow extensions with Connection.enable_load_extension(True). After doing this, you will load it into the connection with Connection.load_extension(path), where DROP TABLE.

Even if you don’t know how to write a module yourself, the SQLite Development Team provides some useful modules, including a module for treating CSV file as read-only virtual tables, a module for generating a series of integers within a certain range, and a module for performing text searches. For more information about virtual tables see the official documentation.

Handling Exceptions and Basic Transactions

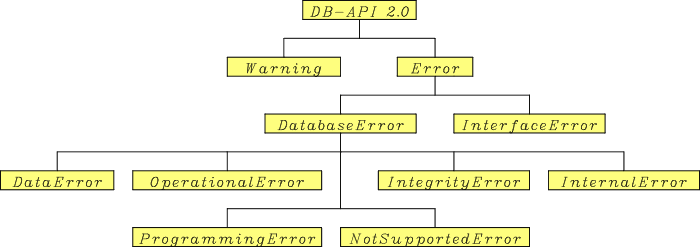

As stated above, the DB-API 2.0 defines an Error class, as well as many other subclasses:

| Class Name | Description |

|---|---|

| Warning | Exception raised for important warnings, like truncation of data while inserting. |

| Error | Base class for all exceptions of the module. |

| InterfaceError | Errors related to the database interface, rather than the interpreter. |

| DatabaseError | For errors related to the database. |

| DataError | For errors due to data handling, e.g. value out of bounds. |

| OperationalError | Encompasses a wide variety of errors, e.g. unexpected disconnection, missing table names, error while handling transactions, memory allocation failure. |

| IntegrityError | Violation of data integrity, e.g. failing a foreign key check. |

| InternalError | Error pertaining to the SQL interpreter, e.g. invalid cursor, transactions out of sync. |

| ProgrammingError | Encompasses many kinds of SQL syntax and semantic errors, e.g. wrong number of bindings for a prepared statement, syntax error in a statement. |

| NotSupportedError | Notifies that a method of the API is not supported by the database, e.g. requesting a commit() or rollback() when transactions are disabled. |

All these classes are organized in a hierarchy, as follows.

Knowing the exceptions’ hierarchy might help us when we want to filter exceptions by using an appropriate except clause in a try statement.

The Connection.commit() and Connection.rollback() Methods

All changes to the database are made within a transaction. Transactions allow you to store changes to the database elsewhere, and to write them back into the database when the transaction is closed (or committed). Normally, the SQLite core is in autocommit mode, that is:

- it will automatically start a transaction before a command that can modify the contents of the database (e.g.

INSERT,UPDATE,DELETE). - it executes the command.

- if no exception is raised during the execution, it automatically commits the transaction.

The autocommit mode can be disabled by explicitly opening a new transaction with a BEGIN TRANSACTION command, in which case you should also issue the COMMIT or ROLLBACK commands to close the transaction. The sqlite3 module provides the Connection.commit() and Connection.rollback() as a shorthand for issuing, respectively, the COMMIT and ROLLBACK commands.

In all our examples, we will use SQLite in autocommit mode. Beware that changes to the database are not discarded (rolled back) if SQLite is in autocommit mode and an exception occurs. So every time an exception from the SQLite core is raised while executing a command that may change the content of the database, we will use the following code pattern:

try:

curs = connection.execute(stmt)

handle_results(curs)

except sqlite3.Error:

connection.rollback()

else:

connection.commit()This way, we can guarantee that all changes to the database from the start of the execution of the statement until the exception was raised will be discarded.

Reading Data from a SQLite Database

Now, we will turn to the last of our objectives: querying a database.

Fetching Results from a Cursor

After a SQL statement has been executed successfully, we are ready to retrieve data from the cursor. The sqlite3.Cursor provides three ways to do this:

- Using the

fetchone()method, which returns one row from the data set of the query, orNonewhen no more rows are available. - Using the

fetchmany(n)method, which returns up ton rows, depending on how many rows are left. Alternatively, we can setCursor.arraysizeto the number of rows we want to fetch at a time, and then omit then parameter. - Using the

fetchall()methods, which returns a list of all remaining rows in the query data set.

Let’s take a look at some examples:

import sqlite3

def test_fetchone():

""" Fetching results from a query one by one. """

with sqlite3.connect('sample.db3') as conn:

query = 'SELECT Band, Artist, Country FROM symphonic ' \

"WHERE Country='DEU';"

curs = conn.execute(query)

row = curs.fetchone()

while row is not None:

print(','.join(row))

row = curs.fetchone()

def test_fetchmany():

""" Fetching results from a query in blocks. """

with sqlite3.connect('sample.db3') as conn:

query = 'SELECT Band, Artist, Country FROM symphonic ' \

"WHERE Country='FIN';"

curs = conn.execute(query)

curs.arraysize = 3

rows = curs.fetchmany()

n = 1

while rows:

for row in rows:

print('%d: %s' % (n, ','.join(row)))

n += 1

rows = curs.fetchmany()

print "--RESULTS test_fetchone--"

test_fetchone()

print "--RESULTS test_fetchmany--"

test_fetchmany()The test_fetchone() function queries the database for all German artists, then fetches the results one by one. Notice how we used row is not None as a sentinel for the while loop, since the fetchone() method returns None when it has no more rows to fetch. The output of this function is:

Beyond The Black,Jennifer Haben,DEU Arven,Carina Hanselmann,DEU Xandria,Manuela Kraller,DEU Aeverium,Aeva Maurelle,DEU

The test_fetchmany() function queries the database for all Finnish artists. We set cursor.arraysize = 3, so that curs.fetchmany() will return at most three rows at a time. Notice how we used rows as sentinel to the while loop, since the fetchmany() method returns an empty tuple when it has no more rows to fetch. We prefixed each row with a number indicating the output block to which the row belongs. As we can see from the output below, the second block of output has only two rows:

1: Amberian Dawn,Päivi "Capri" Virkkunen,FIN 1: Dark Sarah,Heidi Parviainen,FIN 1: Katra,Katra Solopuro,FIN 2: Nightwish,Tarja Turunen,FIN 2: Ravenia,Armi Päivinen,FIN

We’ll show examples of the fetchall() method in the remaining sections.

Using sqlite3.Row to Access Fields by Name

The sqlite3.Connection class provides a row_factory attribute which allows you to customize the way rows are fetched by one of the fetching methods we just described. This attribute must be set to a callable object, which will be called as row_factory(cursor, row), where:

- cursor is a class derived from the

sqlite3.Cursorclass. - row is a tuple, holding a single row of data from a query.

A callable is every object providing a __call__() method. Let’s see an example demonstrating how to access fields by name:

def access_fields_by_name(cursor, row):

""" `row_factory` function to access fields by name. """

header = [ field[0].lower() for field in cursor.description ]

return { name : value for name, value in zip(header, row) }

def test_row_factory():

""" Fetching data with a customized `row_factory`. """

with sqlite3.connect('sample.db3') as conn:

conn.row_factory = access_fields_by_name

query = "SELECT Band, Artist, Country FROM symphonic;"

for row in conn.execute(query).fetchall():

print(", ".join((row['artist'], row['country'])))access_fields_by_name() is the row factory that maps each item in the cursor.description holds the list of column names for the result of the query. The test_row_factory() function opens a connection to the sample database, then it sets str.join() function to separate each field with a comma. This is a snippet from its output:

... Jennifer Haben, DEU Carina Hanselmann, DEU Federica Lanna, ITA Dianne van Giersbergen, NLD Clementine Delauney, FRA Floor Jansen, NLD Liv Kristine Espenæs, NOR ...

Isn’t that neat?

The sqlite3 module also provides a Row class, that can be assigned to the Connection.row_factory attribute to provide both index-based and key-based access to the fields of the records. Key matching is case insensitive. Let’s see this class in action:

def test_row_class():

""" Fetching data with the `sqlite3.Row` class. """

with sqlite3.connect('sample.db3') as conn:

conn.row_factory = sqlite3.Row

query = "SELECT * FROM symphonic;"

for row in conn.execute(query).fetchall():

print(", ".join((row['Band'], row[2], row['COUNTRY'])))The test_row_class() function sets sqlite3.Row as the row factory of the open connection, then it selects the test_row_class prints:

... Within Temptation, Sharon den Adel, NLD Dark Sarah, Heidi Parviainen, FIN Delain, Charlotte Wessels, NLD Katra, Katra Solopuro, FIN Ancient Bards, Sara Squadrani, ITA Xandria, Manuela Kraller, DEU ...

Closing Thoughts

In this tutorial we addressed the main features of the sqlite3 module from the Python standard library. First, we describe all native SQLite types, and we learned how to map them into ISO SQL types using type affinities. After that, we got in touch with the DB-API 2.0, an API for database management systems that aims to be independent from any particular implementation. This API allows us to open a new connection, to execute SQL commands, and to retrieve a query data set using cursors. We also learned about basic transactions, like Commit and Rollback. Finally, we took a look at how all these classes are implemented in the sqlite3 module with several Python examples.

Did you find this free tutorial helpful? Share this article with your friends, classmates, and coworkers on Facebook and Twitter! When you spread the word on social media, you’re helping us grow so we can continue to provide free tutorials like this one for years to come.

References

- SQLite Development Team, The Virtual Table Mechanism Of SQLite, Retrieved 2019-03-22

- SQLite Development Team, List of Virtual Tables, Retrieved 2019-23-22

- SQLite Development Team, Datatypes In SQLite Version 3, Retrieved 2019-03-22

- SQLite Development Team, Clustered Indexes and the WITHOUT ROWID Optimization, Retrieved 2019-03-22

- Marc-André Lemburg, PEP 249 – Python Database API Specification v2.0, PEP-0249