Question-answering systems are applications that can provide natural language answers to natural language questions. They are useful in all kinds of domains, including education, customer service and health care.

In this article, you will learn to develop a basic question-answering system in Python using the OpenAI API. This API provides access to ChatGPT, a large-scale pre-trained language model capable of generating coherent and engaging texts.

You will study how to develop stateless and stateful question-answering systems. In a stateless conversation, the system retains no memory of past interactions, and user input is treated in isolation. On the contrary, in a stateful conversation, the system retains memory of past interactions and maintains context throughout the conversation.

Let’s begin with our stateless question/answering system.

Developing a Stateless Question/Answering System

To use ChatGPT for stateless question-answering, you need to perform the following steps:

- Import the OpenAI library and set the OpenAI chat model.

- Define a function that takes a question as input and returns an answer as output.

- Call the function with different questions and print the answers.

Let’s see how to implement each step in detail.

Step 1: Import the OpenAI library and Set the OpenAI Chat Model

The first step is to import the OpenAI library and configure the ChatGPT model. We will use the get-3.5-turbo model, which ChatGPT employed before the introduction of GPT-4. Make sure you replace YOUR_OPEN_AI_KEY with a valid API key. Here’s how you can create your own OpenAI API key.

#Import the OpenAI library

import openai

#Set the authentication key

openai.api_key = "YOUR_OPEN_AI_KEY"

model = "gpt-3.5-turbo" # ChatGPT used this model before GPT-4.Step 2: Define a Question/Answering Function

The next step is to define a function that takes a question as input and returns an answer as output. We can do this using the following code:

#Define a function that takes a question as input and returns an answer as output

def ask_chat_gpt(question):

#Generate a response from ChatGPT using the question chat message

response = openai.ChatCompletion.create(

model = model,

messages = [

{"role": "user", "content": question}

],

max_tokens=100,

temperature=0.9,

frequency_penalty=0.5,

presence_penalty=0.5,

)

#Extract the answer from the response

answer = response.choices[0].message['content']

#Return the answer

return answerThe ask_chat_gpt() function in the above script uses the openai.ChatCompletion.create() method to generate a response from ChatGPT using the question as a prompt. The method takes several parameters, such as:

- model: The name of the OpenAI model to use. We used

gpt-3.5-turbo, but you can usegpt-4if you have access to GPT-4 model in the OpenAI API. - messages: The text given to ChatGPT as input.

- max_tokens: The maximum number of tokens (words or characters) that ChatGPT can generate as output.

- temperature: A value between 0 and 1 that controls the randomness of the output. A higher value means more creativity and diversity, while a lower value means more consistency and coherence.

- frequency_penalty: A value between 0 and 1 that penalizes the repetition of words or phrases in the output. A higher value means less repetition, while a lower value means more repetition.

- presence_penalty: A value between 0 and 1 that penalizes the absence of words or phrases that have been previously mentioned in the output. A higher value means more continuity and relevance, while a lower value means more novelty and variety.

The openai.ChatCompletion.create() method returns a dictionary that contains several fields. You can extract the output using the choices field, which consists of a list of possible outputs generated by ChatGPT. Each output contains the messages field, which is also a dictionary. You can use the messages dictionary’s content field to extract the response.

In our case we only have a single ChatGPT response for each input question. Therefore, the ask_chat_gpt() function extracts the answer from the first output in the list. The function then returns the answer as a string.

Step 3: Execute Conversations using the Question/Answering Function

The final step is to call the function with different questions and print the answers. The following script does that:

#Call the function with different questions and print the answers

questions = [

"Who is the president of France?",

"What is the capital of Australia?",

"How many planets are there in the solar system?",

"Who wrote Romeo and Juliet?",

"What is the square root of 81?"

]

for question in questions:

answer = ask_chat_gpt(question)

print(f"Q: {question}")

print(f"A: {answer}")

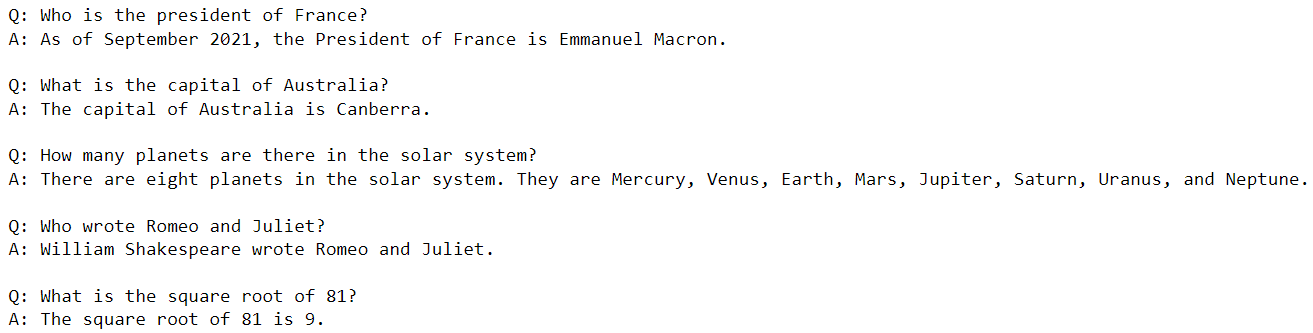

print()Output:

The above output shows the response generated by ChatGPT for every question in the questions list. NOTE: If you get an “Incorrect API key provided” error, you’ll need to make sure you’ve copied a valid API key into the script in Step 1.

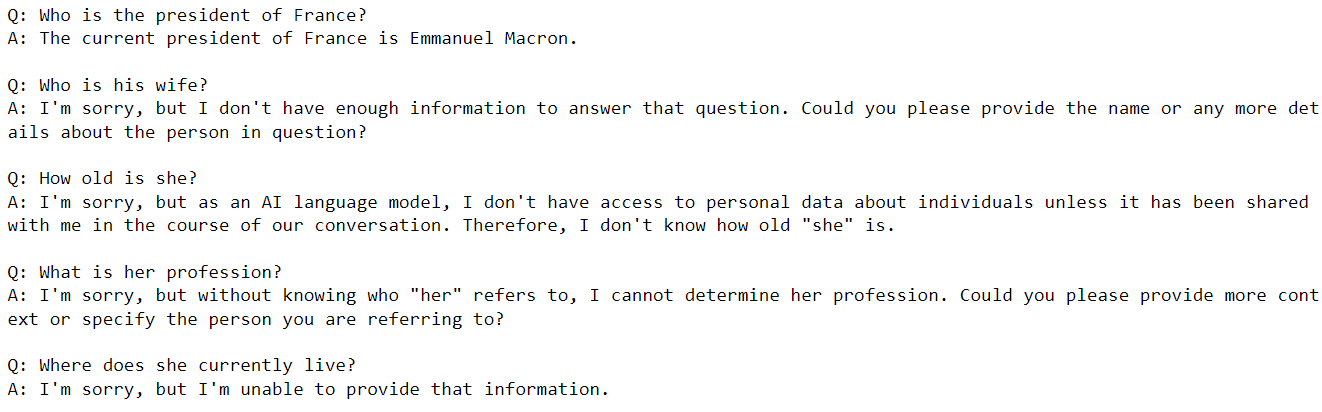

One limitation of the question-answering system we built so far is that it does not maintain any state or memory of the previous questions and answers. This means that ChatGPT cannot handle follow-up questions or refer back to previous information. For example, if we ask “Who is his wife?” after asking “Who is the president of France?”, ChatGPT will not know who “he” is and will generate a random or irrelevant answer.

For instance, ChatGPT will not be able to answer the follow-up questions in the following code:

#Call the function with different questions and print the answers

questions = [

"Who is the president of France?",

"Who is his wife?",

"How old is she?",

"What is her profession?",

"Where does she currently live?"

]

for question in questions:

answer = ask_chat_gpt(question)

print(f"Q: {question}")

print(f"A: {answer}")

print()Output:

In the next section, you will see how to overcome this limitation.

Maintaining Stateful Conversations where ChatGPT Remembers Chat History

To implement stateful conversation with ChatGPT, we need to modify our ask_chat_gpt() function. This modification involves keeping track of the previous questions and answers, and appending them to the prompt before generating a new answer. This way, ChatGPT can use the previous context to generate more relevant and coherent answers.

Here is the modified ask_chat_gpt() function for stateful conversation.

#Define a global variable to store the previous questions and answers

history = ""

#Modify the function to append the previous questions and answers to the prompt

def ask_chat_gpt(question):

# Declare the global variable

global history

#Append the question to the history

history += f"Q: {question}\n"

#Generate a response from ChatGPT using the question as prompt

response = openai.ChatCompletion.create(

model = model,

messages = [

{"role": "user", "content": history}

],

max_tokens=100,

temperature=0.9,

frequency_penalty=0.5,

presence_penalty=0.5,

)

#Extract the answer from the response

answer = response.choices[0].message['content']

#Append the answer to the history

history += f"A: {answer}\n"

# Return the answer

return answerIn the above code, we define a global variable history that stores the previous questions and answers as a string. The function ask_chat_gpt() appends each question and answer to the history and uses it as a prompt for ChatGPT. The function also returns only the answer, not the whole history.

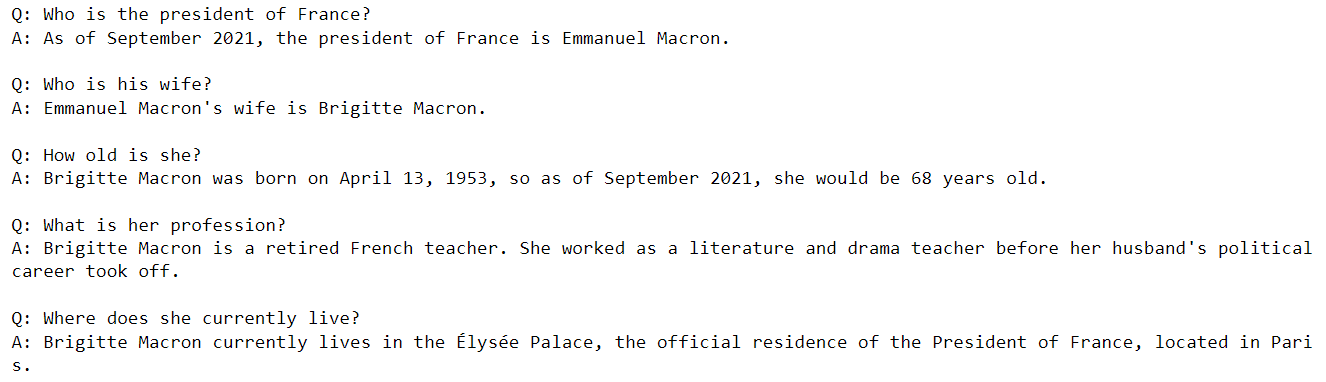

Now, we can call the function with different questions and print only the answers. We can also ask follow-up questions or refer back to previous information.

Run the following script to see the stateful conversation in action.

#Call the function with different questions and print the answers

questions = [

"Who is the president of France?",

"Who is his wife?",

"How old is she?",

"What is her profession?",

"Where does she currently live?"

]

for question in questions:

answer = ask_chat_gpt(question)

print(f"Q: {question}")

print(f"{answer}")

print()Output:

You can see that now ChatGPT is able to answer follow-up questions. I hope you liked the article! For more tutorials like this, subscribe using the form below.