Exchanging data between a CSV file and a database is a very common task. The csv module from the Python standard library proves to be a useful tool for reading and writing CSV data in one of the many flavors of the CSV format (called dialects), but this module treats all fields in a CSV record as plain text. This happens even when our databases are populated with other data types, like integers, real numbers, dates. In this tutorial we’ll describe how to detect data types from a CSV file, and how to map them to one of the native SQLite types so we can store them in a SQLite database. Once our data are in the database, we will learn how to convert the SQLite query results back into CSV records.

- Introduction

- Converting CSV to SQLite Using CSV Headers

- Converting CSV to SQLite Using Data Literals

- Converting CSV to SQLite Using SQLite Literals

- Closing Thoughts

Introduction

Remarks on CSV Files

As we previously explained in our Python CSV Dialects tutorial, the RFC dialect of CSV doesn’t impose any meaning on the contents of a field. It just prescribes that:

- fields including a

,(comma) character must be surrounded by"(double quote character); e.g. the fielda, b must be written as"a, b" in a CSV file. - double quote characters within a field must be escaped by

""(two double quote characters in a row); e.g. the fielda " b must be written asa "" b in a CSV file.

Fortunately, we don’t need to bother with these issues: the csv module will take care of them for us! We just need to pick a proper CSV dialect for the csv.reader() and csv.writer() classes.

Choosing a CSV Dialect

The RFC dialect of the CSV data format can be defined as follows:

import csv

import os

class RFCDialect(csv.Dialect):

delimiter = ','

doublequote = True

lineterminator = os.linesep

quotechar = '"'

quoting = csv.QUOTE_MINIMALwhere:

- the

delimiter attribute stands for the field delimiter. - we set

doublequote toTrue, in order to escape a"character in a field with"". - the

lineterminator attribute stands for the character which separates two consecutive lines. It is set toos.linesep, which is the default line terminator for the operating system. - the

quotechar attribute is the character enclosing a field. - by setting

quoting tocsv.QUOTE_MINIMAL, only those fields including a comma will be enclosed betweenquotechar .

This dialect can be passed as a csv.reader(), csv.DictReader, csv.writer(), and csv.DictWriter. We will use the default Excel dialect in all our examples, since it encompasses all RFC restrictions listed above. It can be defined just as RFCDialect, except for the fact that \r\n. For additional information on how to define new dialects and on all predefined dialects, please refer to our complete Python CSV Dialect tutorial.

The Data Exchange Process

Reading CSV data from a file and loading them into a SQLite database requires the following steps:

- Reading the CSV file: we need to split each line from the CSV file into its fields. The

csvmodule will take care of it for us. At this point, each field will be represented by astrobject. - Building Python objects from the fields: now that we have a tuple of strings for each row of CSV data, we wish to convert each field into a suitable Python object that can represent our data. This way, we can perform some operations on them, before loading them into the database. This step is optional.

- Adapting Data: before storing our CSV data into a SQLite database, we must convert them to one of the native SQLite types. We will use adapters to handle this step.

Writing data from a SQLite database to a CSV file requires the following steps:

- Querying the database: we use a

SELECTstatement to retrieve the data from the SQLite database. - Converting data to Python objects: we use converters to turn SQLite types into suitable Python objects. Then, we can perform some operation on those objects before writing them to a CSV file. Depending on the type of data in the database, it’s possible to skip this step.

- Mapping Python objects into strings: we can provide our Python objects with a

__str__()method to convert them intostrobjects. Thecsv.writer()andcsv.DictWriter()classes will call this method implicitly while preparing a CSV field. - Writing to the CSV file: now that all fields are ready, we can write them to a CSV file. The

csvmodule has this covered, since it can implicitly perform all necessary transformations (e.g. quoting, escaping, and encoding).

For each approach described here, we will explain how both processes (converting CSV data to a SQLite database and writing data from a SQLite database to a CSV file) are implemented.

Remarks on Adapters and Converters

Unlike other user-defined functions (e.g. scalar and aggregate functions), if an adapter raises an exception, sqlite3 will automatically catch the exception and revert to the default per-value adapter.

import sqlite3

def test_adapters_exceptions():

""" Faulty adapters. """

def faulty_adapter(data):

""" An adapter raising an exception. """

print('Faulty adapter for %r' % data)

raise ValueError('Faulty adapter')

print("I won't get here")

sqlite3.register_adapter(str, faulty_adapter)

with sqlite3.connect(':memory:') as conn:

conn.execute('CREATE TABLE test(c INTEGER);')

# Faulty adapter in action

conn.executemany('INSERT INTO test VALUES(?);',

( ('a', ), ('1.2', ), ('3', ) ))

for col, ctype in conn.execute('SELECT c, typeof(c) FROM test;'):

print('%r is of type %s' % (col, ctype))

test_adapters_exceptions()Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

In this example, we registered the str data. This adapter prints the data it’s working on before raising a ValueError exception. As we can see from the function’s output,

Faulty adapter for 'a' Faulty adapter for '1.2' Faulty adapter for '3' 'a' is of type text 1.2 is of type real 3 is of type integer

the sqlite3 module, but then they are re-raised as an exception derived from the sqlite3.Error class.

The sqlite3 module, though, doesn’t catch exceptions from converters, nor does it re-raise them as a subclass of sqlite3.Error. In other words, protecting SQLite statement’s execution against sqlite3.Error exceptions won’t suffice.

import sqlite3

def test_converters_exceptions():

""" Faulty converters. """

def faulty_converter(data):

""" A converter raising an exception. """

print('Faulty converter')

raise ValueError('Faulty Converter')

print("I won't get here")

sqlite3.register_adapter(str, None)

sqlite3.register_converter('text', faulty_converter)

with sqlite3.connect(

':memory:',

detect_types = sqlite3.PARSE_DECLTYPES) as conn:

conn.execute('CREATE TABLE test(c TEXT);')

conn.executemany('INSERT INTO test VALUES(?);',

( ('a', ), ('b', ), ('c', ) ))

# Faulty converter in action

try:

for c in conn.execute('SELECT * FROM test;'):

print(c[0])

except sqlite3.Error:

print("This error handler won't suffice.")

except Exception as e:

print('This handler will be called instead.')

else:

print("Converters' exceptions aren't " \

"automatically caught")

test_converters_exceptions()In this example, we register TEXT. The TEXT, so upon insertion of 'a' into the sqlite3 module tries to call this converter, which in turn raises a ValueError exception. The test_converters_exceptions() function will print:

Faulty converter This handler will be called instead.

The except sqlite3.Error clause can’t catch the ValueError exception, so another handler is called instead.

Remarks on the csv Module

We have already discussed using Python to both read from a CSV file and write to a CSV file using the csv module. In this section we will simply address some issues you should be aware of when using the csv module. All our examples will avoid these problems by making the following assumptions on the data:

- We will assume that all CSV sources don’t have empty strings.

- We will assume that all SQLite tables don’t hold

Nonevalues. - We will assume that all records read from the CSV file have the same length, so we will use

csv.reader()andcsv.DictReader()without performing any check on the length of the records. - We will assume that all records written to a CSV file have the same length, so we will use

csv.writer()andcsv.DictWriter()without checking the records’ length.

When you write actual code, you should address all these potential issues with care.

Beware of Empty Strings

Both the csv.reader() function and the csv.DictReader() class treat empty string as zero-length TEXT data. If you want empty strings to mean NULL values, you have to map them yourself, like this:

import csv

def test_reading_empty_strings():

""" Reading empty strings from a CSV file. """

records = ( '1,a,b,c\n', '2,,b,c\n',

'3,a,,c\n', '4,a,b,\n' )

print("`reader` doesn't take care of empty strings...")

for record in csv.reader(records):

print(record)

print('... nor does `DictReader` ...')

for record in csv.DictReader(records,

fieldnames=('N', 'A', 'B', 'C')):

print('N={N!r}, A={A!r}, B={B!r}, C={C!r}'.format(**record))

print('... so we must convert them explicitly')

for record in csv.reader(records):

print( [i for i in map(

lambda x: None if not x else x, record)] )

test_reading_empty_strings()The output of the test_reading_empty_strings() function is self-explanatory:

reader doesn't take care of empty strings... ['1', 'a', 'b', 'c'] ['2', '', 'b', 'c'] ['3', 'a', '', 'c'] ['4', 'a', 'b', ''] ... nor does DictReader... N='1', A='a', B='b', C='c' N='2', A='', B='b', C='c' N='3', A='a', B='', C='c' N='4', A='a', B='b', C='' ... so we must convert them explicitly ['1', 'a', 'b', 'c'] ['2', None, 'b', 'c'] ['3', 'a', None, 'c'] ['4', 'a', 'b', None]

Beware of Short Records

Neither the csv.reader() function nor the csv.DictReader class will raise an exception if a record in the CSV file has fewer values than the header. csv.reader() returns all records as they are, while csv.DictReader pads them with the value of its None.

import csv

def test_too_short():

""" Reading records that are too short. """

records = ( '1,a,b,c\n', '2,a,b\n', '3,a\n', '4\n' )

print('`reader` just returns all records as they are...')

for r in csv.reader(records):

print(r)

print('... while `DictReader` sets to `None` all missing values...')

for r in csv.DictReader(records,

fieldnames=('N', 'A', 'B', 'C')):

print('N={N!r}, A={A!r}, B={B!r}, C={C!r}'.format(**r))

print('... or to the value of `restval`')

for r in csv.DictReader(records,

fieldnames=('N', 'A', 'B', 'C'), restval=''):

print('N={N!r}, A={A!r}, B={B!r}, C={C!r}'.format(**r))

test_too_short()The output of the test_too_short() function speaks volumes:

reader just returns all records as they are... ['1', 'a', 'b', 'c'] ['2', 'a', 'b'] ['3', 'a'] ['4'] ... while DictReader sets to None all missing values... N='1', A='a', B='b', C='c' N='2', A='a', B='b', C=None N='3', A='a', B=None, C=None N='4', A=None, B=None, C=None ... or to the value of restval N='1', A='a', B='b', C='c' N='2', A='a', B='b', C='' N='3', A='a', B='', C='' N='4', A='', B='', C=''

The csv.writer() function will not check the record’s length. The csv.DictWriter() class will pad them with 'strict', in which case it raises an exception.

Beware of Long Records

Neither the csv.reader() function nor the csv.DictReader class will raise an exception if a record in the CSV file has more values than the header. csv.reader() just returns the records as they are, while csv.DictReader puts all the trailing fields into a list under the

import csv

def test_too_long():

""" Reading a record that is too long. """

records = ( '1,a,b,c,d\n', '2,a,b,c,d,e\n', '3,a,b,c,d,e,f\n' )

print('`reader` just returns all records as they are...')

for r in csv.reader(records):

print(r)

print('... while `DictReader` ignores the trailing fields...')

for r in csv.DictReader(records,

fieldnames=('N', 'A', 'B', 'C')):

print('N={N!r}, A={A!r}, ' \

'B={B!r}, C={C!r}'.format(**r))

print('... which are really stored under the `restkey` key')

for r in csv.DictReader(records,

fieldnames=('N', 'A', 'B', 'C'),

restkey='R'):

print('N={N!r}, A={A!r}, ' \

'B={B!r}, C={C!r} R={R!r}'.format(**r))

test_too_long()The output of the test_too_long() function is straightforward:

reader just returns all records as they are... ['1', 'a', 'b', 'c', 'd'] ['2', 'a', 'b', 'c', 'd', 'e'] ['3', 'a', 'b', 'c', 'd', 'e', 'f'] ... while DictReader ignores the trailing fields... N='1', A='a', B='b', C='c' N='2', A='a', B='b', C='c' N='3', A='a', B='b', C='c' ... which are really stored under the restkey key N='1', A='a', B='b', C='c' R=['d'] N='2', A='a', B='b', C='c' R=['d', 'e'] N='3', A='a', B='b', C='c' R=['d', 'e', 'f']

The csv.writer() function won’t check the length of the records, while the csv.DictWriter() class will ignore the all extra fields, unless its 'strict', in which case it will raise an exception.

Trying Different Approaches

In the remaining sections we will demonstrate how to exchange data between a CSV file and a SQLite database using a couple different approaches:

- When trying to adapt data from a CSV source:

- we will guess the data type from the CSV header.

- we will guess the data type from the data itself, either by devising custom adapters, or by using SQLite literals.

- When trying to convert data from a SQLite database:

- we will use column names to pick a converter.

- we will use the quote() function to convert data on a per-value basis.

All sample data and the Python source code for this tutorial can be downloaded here. Without further ado, let’s get to it.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

Converting CSV to SQLite Using CSV Headers

In this section we will try to detect data types using the header of a CSV file. The header is the first row of a CSV file, and is used to hold the column names. The csv.DictReader() tries to auto-detect the header unless a

Defining our Sample

The sample data for both the employees.csv and the employees-header.csv files follow this schema:

| Field Name | Python Class | SQLite Type | Description |

|---|---|---|---|

| name | Name |

TEXT NOT NULL | The employee's name. |

| contract | Contract |

TEXT NOT NULL | The employee's hiring date, in YYYY-MM-DD format. |

| wage | Wage |

INTEGER NOT NULL | The monthly wage, in dollars. |

EMail |

TEXT NOT NULL | The employee's e-mail address. | |

| signature | Signature |

BLOB NOT NULL | The employee's digital signature. |

The Python Class column refers to the name of the class representing the data type in our example. The SQLite Type is the column declaration for the employees.db3 and employees-header.db3 SQLite databases. For convenience, here are the contents of the employees-header.csv file:

| Name | Contract | Wage | Signature | |

|---|---|---|---|---|

| Jim Ure | 2017-02-17 | $2900 | jim.ure@zahoo.ccc | x'dd349bddfe' |

| Brian White | 2007-06-03 | $2300 | brian.white@slowmail.zzz | x'c87f6f52f1' |

| Noah Cave | 2010-01-09 | $2800 | noah.cave@saturn.sat | x'520a8d4344' |

| Mark Bellamy | 2002-04-08 | $3000 | mark.bellamy@jupiter.yyy | x'76077d03a3' |

| Jodie Grant | 2004-06-26 | $4800 | jodie.grant@zahoo.ccc | x'15fa119ec9' |

| Frank Collins | 2008-10-11 | $2700 | frank.collins@zahoo.ccc | x'c2c0596f05' |

| George Sayer | 2001-12-25 | $4700 | george.sayer@jupiter.yyy | x'95635eeac0' |

| Bob Ferry | 2013-04-20 | $4400 | bob.ferry@saturn.sat | x'47e3422439' |

| Paul Cetera | 2001-07-08 | $4900 | paul.cetera@mercury.ccc | x'1655612512' |

| Aaron Hadley | 2005-11-02 | $3800 | aaron.hadley@saturn.sat | x'c5de069978' |

Reading Data from the CSV File

Though the RFC dialect of CSV doesn’t give any meaning to headers, in the next example we will match headers to the Python objects that represents them. After this, these objects will be adapted to SQLite types automatically by their respective __conform__() methods, as we have already seen in our tutorial on converting SQLite data types for Python.

In the next example, first we will define all classes needed to represent our data types, then we will call the test_adapting_by_header() function to read the CSV file, and load its data to the

import sqlite3

import csv

import datetime

class Name:

""" An employee's name. """

def __init__(self, name):

self.name = name

def __str__(self):

return self.name

@staticmethod

def from_literal(literal):

return Name(literal)

@staticmethod

def convert(data):

return Name(str(data, encoding='utf-8'))

def __conform__(self, protocol):

""" A name will be of SQLite TEXT type. """

if protocol == sqlite3.PrepareProtocol:

return self.name

class Contract:

""" An employee's contract. """

def __init__(self, date):

self.date = date

def __str__(self):

return self.date.isoformat()

@staticmethod

def from_literal(literal):

return Contract(datetime.date(

*map(int, literal.split('-'))))

@staticmethod

def convert(data):

return Contract(datetime.date(

*map(int, data.split(b'-'))))

def __conform__(self, protocol):

""" A date will be of SQLite TEXT type. """

if protocol == sqlite3.PrepareProtocol:

return str(self.date)

class Wage:

""" An employee's monthly wage. """

def __init__(self, wage):

self.wage = wage

def __str__(self):

return "$%d" % self.wage

@staticmethod

def from_literal(literal):

""" Strips the leading `$` symbol and convert to `int`. """

return Wage(int(literal[1 : ]))

@staticmethod

def convert(data):

return Wage(int(data))

def __conform__(self, protocol):

""" A wage will be of SQLite INTEGER type. """

if protocol == sqlite3.PrepareProtocol:

return self.wage

class EMail:

""" An e-mail address. """

def __init__(self, email):

self.email = email

def __str__(self):

return self.email

@staticmethod

def from_literal(literal):

return EMail(literal)

@staticmethod

def convert(data):

return EMail(str(data, encoding='utf-8'))

def __conform__(self, protocol):

""" An e-mail address will be of SQLite TEXT type. """

if protocol == sqlite3.PrepareProtocol:

return self.email

class Signature:

""" An employee's digital signature. """

def __init__(self, signature):

self.signature = signature

def __str__(self):

return "x'%s'" % (''.join(

map(lambda x: "%02x" % x, self.signature)))

@staticmethod

def from_literal(literal):

""" Convert to `bytes` object. """

return Signature(bytes.fromhex(literal[2 : -1]))

@staticmethod

def convert(data):

return Signature(data)

def __conform__(self, protocol):

""" A signature will be of SQLite BLOB type. """

if protocol == sqlite3.PrepareProtocol:

return self.signature

def test_adapting_by_header():

""" Adapting CSV data by header. """

fieldnames_map = {

'name': Name, 'contract' : Contract,

'wage': Wage, 'email' : EMail,

'signature': Signature }

def match_header_to_class(field_name, data):

""" Builds a class from the field name. """

try:

return fieldnames_map[field_name.lower()].from_literal(data)

except KeyError:

raise ValueError('Invalid field name %s' % field_name)

with open('employees-header.csv', 'rt',

newline='', encoding='utf-8') as src, \

sqlite3.connect('employees-header.db3') as conn:

conn.execute(

'CREATE TABLE IF NOT EXISTS employees ('\

'name TEXT NOT NULL,' \

'contract DATE NOT NULL,' \

'wage INTEGER NOT NULL, ' \

'email TEXT NOT NULL, '\

'signature BLOB);')

conn.execute('DELETE FROM employees;')

conn.executemany('INSERT INTO employees VALUES' \

'(:name, :contract, :wage, :email, :signature);',

( { name.lower() : match_header_to_class(name, record[name]) \

for name in record } \

for record in csv.DictReader(src) ))

for record in conn.execute(

'SELECT name, typeof(name), ' \

'contract, typeof(contract), ' \

'wage, typeof(wage), ' \

'email, typeof(email), ' \

'quote(signature), typeof(signature) ' \

'FROM employees;'):

print(','.join(map(str, record)))

for name, contract, wage, email, signature in conn.execute(

'SELECT * FROM employees;'):

print('%s has been an employee since %s and earns $%d per month.' \

% (name, contract, wage))

test_adapting_by_header()Let’s take a look at the classes first. We provide a Python class for each column of the SQLite table. Our classes have five methods. The only methods we should care about right now are:

- the

__conform__(), which adapts each object into one of the SQLite native types, according to the SQLite Type column of the table above. - the

from_literal()method, which accepts a CSV field and returns an instance of the class.

If you want, each class can be enhanced with additional methods. For example, you can provide a validate() method to check if the contents of the CSV field is valid, or you can do additional string formatting. We’re not going to get into that right now, though. Instead, let’s take a look at the test_adapting_by_header() function:

- The

filednames_map is a dictionary mapping column names to their respective Python classes, according to the table above. - The

match_header_to_class(field_name, data)function usesfilednames_map to get the Python class from the column name. It creates an instance of the class usingdata . - After opening both the source CSV file and an in-memory database, we create a new

employees table using the schema described above. - Then, we read data from the source file using the

csv.DictReader()class. We’ve described this class in great detail in our reading CSV files tutorial. Basically, this class reads each record from the file as a Pythondictobject, whose keys are the headers of the CSV file, and the values are the contents of each field in the record. Since we want to map the column names to the Python objects that represents the contents of the field, we use thematch_header_to_class()function to build the respective Python object. Notice all names are mapped in a case-insensitive way, so if you use Name in the header of the CSV file, it will be converted to lowercase before being inserted into the row’s dictionary. - At this point, we could perform some operations on the data (e.g. validation, formatting). Here, instead, we load the Python object directly in the SQLite database using an

INSERTstatement. That’s where the magic happens. Before calling theexecutemany()function, thesqlite3module implicitly adapts our objects into SQLite types by calling their__conform__()methods. Then, theexecutemany()function will match each column name to a field in the record from theDictReaderclass by using named placeholders. - Now that our data have been loaded into the

employees table in our database, we can query it using theSELECTstatement.

The output of the first SELECT statement will be (formatted as a table):

| name | typeof (name) | contract | typeof (contract) | wage | typeof (wage) | typeof (email) | signature | typeof (signature) | |

|---|---|---|---|---|---|---|---|---|---|

| Jim Ure | text | 2017-02-17 | text | 2900 | integer | jim.ure@zahoo.ccc | text | X'DD349BDDFE' | blob |

| Brian White | text | 2007-06-03 | text | 2300 | integer | brian.white@slowmail.zzz | text | X'C87F6F52F1' | blob |

| Noah Cave | text | 2010-01-09 | text | 2800 | integer | noah.cave@saturn.sat | text | X'520A8D4344' | blob |

| Mark Bellamy | text | 2002-04-08 | text | 3000 | integer | mark.bellamy@jupiter.yyy | text | X'76077D03A3' | blob |

| Jodie Grant | text | 2004-06-26 | text | 4800 | integer | jodie.grant@zahoo.ccc | text | X'15FA119EC9' | blob |

| Frank Collins | text | 2008-10-11 | text | 2700 | integer | frank.collins@zahoo.ccc | text | X'C2C0596F05' | blob |

| George Sayer | text | 2001-12-25 | text | 4700 | integer | george.sayer@jupiter.yyy | text | X'95635EEAC0' | blob |

| Bob Ferry | text | 2013-04-20 | text | 4400 | integer | bob.ferry@saturn.sat | text | X'47E3422439' | blob |

| Paul Cetera | text | 2001-07-08 | text | 4900 | integer | paul.cetera@mercury.ccc | text | X'1655612512' | blob |

| Aaron Hadley | text | 2005-11-02 | text | 3800 | integer | aaron.hadley@saturn.sat | text | X'C5DE069978' | blob |

As we can see from the SELECT statement is presented below. Notice the values of

Jim Ure has been an employee since 2017-02-17 and earns $2900 per month. Brian White has been an employee since 2007-06-03 and earns $2300 per month. Noah Cave has been an employee since 2010-01-09 and earns $2800 per month. Mark Bellamy has been an employee since 2002-04-08 and earns $3000 per month. Jodie Grant has been an employee since 2004-06-26 and earns $4800 per month. Frank Collins has been an employee since 2008-10-11 and earns $2700 per month. George Sayer has been an employee since 2001-12-25 and earns $4700 per month. Bob Ferry has been an employee since 2013-04-20 and earns $4400 per month. Paul Cetera has been an employee since 2001-07-08 and earns $4900 per month. Aaron Hadley has been an employee since 2005-11-02 and earns $3800 per month.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

Writing Data to the CSV File

Now that our employees-header.csv file, we want to write all data from this table in our database to a new employees-header-out.csv file. That’s what converters are for. The following example requires all classes defined in the previous section.

import sqlite3

import csv

import datetime

def test_converting_by_header():

""" Convert data from the `employees` table. """

# Register converters

sqlite3.register_converter('Name', Name.convert)

sqlite3.register_converter('Contract', Contract.convert)

sqlite3.register_converter('Wage', Wage.convert)

sqlite3.register_converter('EMail', EMail.convert)

sqlite3.register_converter('Signature', Signature.convert)

with open('employees-header.csv', 'rt',

newline='', encoding='utf-8') as src, \

open('employees-header-out.csv', 'wt+',

newline='', encoding='utf-8') as dst, \

sqlite3.connect(

'employees-header.db3',

detect_types=sqlite3.PARSE_COLNAMES) as conn:

# Write all data to `employees-header-out.csv'

writer = csv.writer(dst)

writer.writerow(

('Name', 'Contract', 'Wage', 'EMail', 'Signature'))

writer.writerows(

conn.execute(

'SELECT name AS "name [Name]", ' \

'contract AS "contract [Contract]", ' \

'wage AS "wage [Wage]", ' \

'email AS "email [EMail]",' \

'signature AS "signature [Signature]" ' \

'FROM employees;'))

# Compare `employees-header.csv` to `employees-header-out.csv`:

dst.seek(0)

for line1, line2 in zip(src, dst):

assert line1.rstrip() == line2.rstrip()

test_converting_by_header()Let’s see what happens inside the test_converting_by_header() function:

- First, we register a converter function for each data type. The converter are implemented as the

convert method of the corresponding Python class. Notice that these method are decorated with the@staticmethodfunction, which prevents the instance of the object from being passed as the first argument when calling the method. That’s the reason why none of the converters haveself as the first parameter. Each converter accepts abyteobject, and returns their respective Python class. - Then, we create the database connection setting the

detect_types parameter tosqlite3.PARSE_COLNAMESparameter. This allows the converter to be mapped to table columns by name. - We write the CSV header using the

writer.writerow()method. - Then, we read all data from the

employees table using aSELECTstatement. For each column, the corresponding converter is called, e.g.name AS "name [Name]"calls the converter registered asName, that is,Name.convert. This step converts SQLite types into Python objects. - The cursor returned by

conn.execute()is fed towriter.writerows(), which implicitly calls the__str__()method of each object created in the previous step, e.g. it callsName.__str__(). After this step, all Python objects are converted into strings, which can be written to theemployees-header-out.csvfile. - Finally, we rewind the

dst file, and we compare it line by line to thesrc file. AnAssertionErrorexception will be raised if these two files are not equal.

The approach we just described proves more useful when we want to perform some operation on the Python objects before writing them to the CSV file. If we don’t need the intermediate Python objects, we can convert directly to the literal representation of the data, as we can see from the following example:

import sqlite3

import csv

def test_direct_conversion_by_header():

""" Converting directly from SQLite data to CSV data. """

# Register converters

sqlite3.register_converter('Name',

lambda name : str(name, encoding='utf-8'))

sqlite3.register_converter('Contract',

lambda date : str(date, encoding='utf-8'))

sqlite3.register_converter('Wage',

lambda wage : "$%d" % int(wage))

sqlite3.register_converter('EMail',

lambda email : str(email, encoding='utf-8'))

sqlite3.register_converter('Signature',

lambda signature : "x'%s'" % (''.join(

map(lambda x: "%02x" % x, signature))))

with open('employees-header.csv', 'rt',

newline='', encoding='utf-8') as src, \

open('employees-header-direct.csv', 'wt+',

newline='', encoding='utf-8') as dst, \

sqlite3.connect(

'employees-header.db3',

detect_types=sqlite3.PARSE_COLNAMES) as conn:

# Write all data to `employees-header-out.csv`

writer = csv.writer(dst)

writer.writerow(

('Name', 'Contract', 'Wage', 'EMail', 'Signature'))

writer.writerows(

conn.execute(

'SELECT name AS "name [Name]", ' \

'contract AS "contract [Contract]", ' \

'wage AS "wage [Wage]", ' \

'email AS "email [EMail]",' \

'signature AS "signature [Signature]" ' \

'FROM employees;'))

# Compare `employees-header.csv` to `employees-header-direct.csv`:

dst.seek(0)

for line1, line2 in zip(src, dst):

assert line1.rstrip() == line2.rstrip()

test_direct_conversion_by_header()The code in this example closely mirrors the first example; the only difference being on the converters. In this example, we use a set of lambda functions, which works just like the __str__ methods of the corresponding Python classes.

Let’s recap what we have achieved in this section:

- Reading from a CSV file:

- We assigned an appropriate Python class to each column of data from the CSV

employees-header.csvfile using the file’s header. This is the part often overlooked in tutorials where simple “CSV to SQLite Python” solutions are provided. - The class converts each CSV field to an instance of the class using the

from_literal()method. - The instance of the class is converted to a SQLite type before being inserted into the database using the

__conform__()method, which is called implicitly by thesqlite3module. - Now, all data resides in the

employees table of theemployees-header.db3database.

- We assigned an appropriate Python class to each column of data from the CSV

- Writing to a CSV file:

- We match all column aliases from the

SELECTstatement to a converter. - The

sqlite3module implicitly converts all SQLite types intobytesobjects. - Then, it calls the appropriate

convert()method, which creates an instance of the class. - Before writing a record to a CSV file, the

writerows()method implicitly calls the__str__()method of the object. - If we are not interested in the intermediate Python objects, we can register a set of converters which maps SQLite types directly into CSV fields.

- We match all column aliases from the

Converting CSV to SQLite Using Data Literals

In this example, we will try to detect the CSV data type from data literals. This is a fairly common task. As a matter of fact, Microsoft Excel and LibreOffice Calc try to do just that when reading data from a CSV file. Whereas in the “using headers” example we read the first row from the CSV to detect the header, here we will use pattern matching, but only in the first row. We will assume that all values from the same column are of the same type. The employees.csv file will be the CSV source for our example. It is the same as employees-header.csv, except for the fact that it lacks the header row.

Data Literals

In this section we will define the syntax for our data literals. Then, in the next section we will use

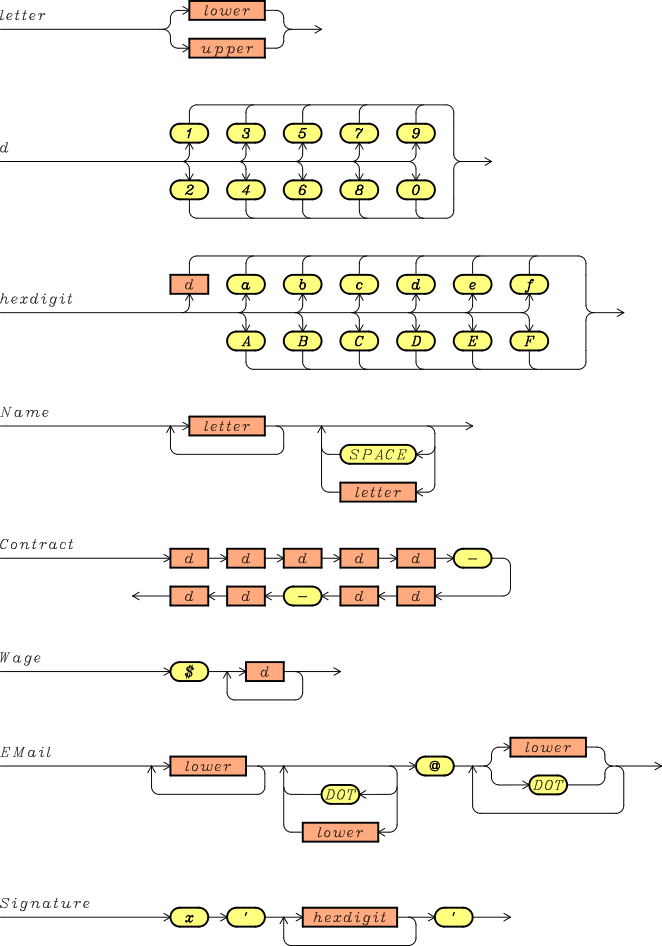

Let’s have a look at these rules:

- a

letter is an uppercase or lowercase alphabetic character from the ASCII character set. - the

d rule represents all decimal digits. - the

hexdigits rule stands for all hexadecimal digits. - all rules for data literals have the same name as the classes to which they belong, e.g. the Name rule defines the literal for the

Name class. - a

Name can contain both letters and spaces, but it starts with a letter. - a

Contract is an ISO date, using the YYYY-MM-DD format. - a

Wage is a currency, with the$character followed by at least one digit (and hopefully more). - the

EMail rule stands for an e-mail address, with the username and the domain separated by an@character. - the

Signature rule represents a sequence of hexadecimal digits, enclosed between'(single quotation character) and prefixed byx.

To keep things simple, there are a few reasonable restrictions that are not enforced by these syntax diagrams, nor by the regular expressions that matches them:

- a

Name value shouldn’t have trailing spaces. - we should check that year, month, and day of

Contract value make sense, e.g.0000-99-34is a syntactically valid date, but it doesn’t exist. - usually currencies have a

,separator every three digits from the right, e.g.$4,000. We won’t use any separator. - the

EMail grammar rule allows for invalid e-mail addresses, e.g.a.@domain.. A real application should use a more precise grammar. - a

Signature syntax should check that the number of hexadecimal digits is even.

Reading Data from the CSV File

Before showing our algorithm, we will describe the type detection function, and we will run it on all values from the employees.csv file, just to make sure we got it right.

import sqlite3

import csv

import re

REGEX_NAME = re.compile(r'^[a-zA-Z]+[a-zA-Z ]*$')

REGEX_CONTRACT = re.compile(r'^\d\d\d\d-\d\d-\d\d$')

REGEX_WAGE = re.compile(r'^\$\d+$')

REGEX_EMAIL = re.compile(r'^[a-z]+[.a-z]*@[a-z.]+$')

REGEX_SIGNATURE = re.compile(r"^x'[\da-zA-Z]+'$")

REGEX_MAP = {

'name': REGEX_NAME,

'contract' : REGEX_CONTRACT,

'wage' : REGEX_WAGE,

'email' : REGEX_EMAIL,

'signature' : REGEX_SIGNATURE }

def detect_type_from_literal(literal):

""" Detect type from literals. """

for ctype in REGEX_MAP:

match = REGEX_MAP[ctype].match(literal)

if match:

return ctype

raise ValueError(

"Couldn't detect a data type for %s" % literal)

def test_detect_type_from_literals():

""" Prints the type of each value from `employee.csv`. """

with open('employees.csv',

newline='', encoding='utf-8') as src:

for record in csv.reader(src):

print(','.join([ ','.join((value, ctype)) \

for value, ctype \

in zip(record, map(detect_type_from_literal, record)) ]))

test_detect_type_from_literals()Let’s see what’s going on in this Python code snippet:

- First, we define a regular expression (regex) for each data literal. The Python standard library provides support for regex via

remodule. We won’t explain what each regex means. It suffices to say that:- Each regex closely mirrors the syntax rules that we have seen above.

- We build a regex object with the

re.compile(regex)function. - A string can be matched against a regex with the

r.match(sample)method, wherer is a compiled regex object andsample is the text to search. It returnsNoneif there is no match, otherwise it returns an object whosestring attribute represents the substring matched.

- the

detect_type_from_literal()tries to match itsliteral parameter to one of the regex. If successful, it returns a string representing the data type, otherwise it raises aValueErrorexception. - the

test_detect_type_from_literals()function reads all records from theemployees.csvfile, and detects the type of each value. Themap()function applies the detection algorithm to each value of a CSV record. Thezip()function creates an iterator of (value, type) tuples. The innerjoin()method turns each tuple in “value,type” strings. The outerjoin()concatenates all strings in the record.

The output of the test_detect_type_from_literals() function is presented below, formatted as a table:

| Name | Type | Contract | Type | Wage | Type | Type | Signature | Type | |

|---|---|---|---|---|---|---|---|---|---|

| Jim Ure | name | 2017-02-17 | contract | $2900 | wage | jim.ure@zahoo.ccc | x'dd349bddfe' | signature | |

| Brian White | name | 2007-06-03 | contract | $2300 | wage | brian.white@slowmail.zzz | x'c87f6f52f1' | signature | |

| Noah Cave | name | 2010-01-09 | contract | $2800 | wage | noah.cave@saturn.sat | x'520a8d4344' | signature | |

| Mark Bellamy | name | 2002-04-08 | contract | $3000 | wage | mark.bellamy@jupiter.yyy | x'76077d03a3' | signature | |

| Jodie Grant | name | 2004-06-26 | contract | $4800 | wage | jodie.grant@zahoo.ccc | x'15fa119ec9' | signature | |

| Frank Collins | name | 2008-10-11 | contract | $2700 | wage | frank.collins@zahoo.ccc | x'c2c0596f05' | signature | |

| George Sayer | name | 2001-12-25 | contract | $4700 | wage | george.sayer@jupiter.yyy | x'95635eeac0' | signature | |

| Bob Ferry | name | 2013-04-20 | contract | $4400 | wage | bob.ferry@saturn.sat | x'47e3422439' | signature | |

| Paul Cetera | name | 2001-07-08 | contract | $4900 | wage | paul.cetera@mercury.ccc | x'1655612512' | signature | |

| Aaron Hadley | name | 2005-11-02 | contract | $3800 | wage | aaron.hadley@saturn.sat | x'c5de069978' | signature |

We can see from this table that all values from the CSV file are matched correctly to their data type. Now, it’s time to take advantage of this detection algorithm to convert our CSV file to a SQLite database table while retaining the appropriate data types for each CSV column.

In the following example we will use all regexes, the detect_type_from_literal() defined above, as well as all classes from our previous example.

import sqlite3

import csv

import re

import datetime

def test_adapting_by_data_literal():

""" Adapting using data literals. """

fieldnames_map = {

'name': Name, 'contract' : Contract,

'wage': Wage, 'email' : EMail,

'signature': Signature }

def match_header_to_class(field_name, data):

""" Builds a class from the field name. """

try:

return fieldnames_map[field_name.lower()].from_literal(data)

except KeyError:

raise ValueError('Invalid field name %s' % field_name)

with sqlite3.connect('employees.db3') as conn, \

open('employees.csv', 'rt',

encoding='utf-8', newline='') as src:

# Read just the first row

reader = iter(csv.reader(src))

record = next(reader)

header = [ c for c in map(detect_type_from_literal, record) ]

# Create table

conn.execute(

'CREATE TABLE IF NOT EXISTS employees ('\

'name TEXT NOT NULL,' \

'contract DATE NOT NULL,' \

'wage INTEGER NOT NULL, ' \

'email TEXT NOT NULL, '\

'signature BLOB);')

# Delete previous data, if any

conn.execute('DELETE FROM employees;')

# Insert data from the `employees.csv` file

conn.executemany('INSERT INTO employees VALUES' \

'(:name, :contract, :wage, :email, :signature);',

( { name.lower() : match_header_to_class(name, record[name]) \

for name in record } \

for record in csv.DictReader(src, fieldnames=header) ))

for record in conn.execute(

'SELECT name, typeof(name), ' \

'contract, typeof(contract), ' \

'wage, typeof(wage), ' \

'email, typeof(email), ' \

'quote(signature), typeof(signature) ' \

'FROM employees;'):

print(','.join(map(str, record)))

for name, contract, wage, email, signature in conn.execute(

'SELECT * FROM employees;'):

print('%s has been an employee since %s and earns $%d per month.' \

% (name, contract, wage))

test_adapting_by_data_literal()You’ll notice the test_adapting_by_data_literal() function looks very similar to the test_adapting_by_header() function we described above), with the following differences:

- After creating the database connection and opening the CSV source file, we read the first row from the file with

next(reader)command. - Then, we create the header of the file, mapping each field of the first row to its type with the

detect_type_from_literal()function. Theheader will be set to: name, contract, wage, email, and signature. - We then immediately rewind the

src , otherwise we would skip the first row. - When loading data into the

employees table with theINSERTstatement, we call thecsv.DictReader()class with an additional parameter,fieldnames . This way the reader will use theheader list as header for the CSV file, instead of trying to detect the header from the first row of the input.

The remaining code is copied verbatim from the test_adapting_by_header() function above. This is acceptable since this approach only affects the way we map columns into their adapters, not the definition of the adapters. Once all data have been loaded into the database, we will use the same approach that we have seen previously to map the converters to the column names.

Let’s recap the process of reading a CSV file using this approach:

- We assigned an appropriate Python class to each column of data from the CSV

employees.csvfile using a type detection algorithm on the first row of data. - The class converts each CSV field to an instance of the class using the

from_literal()method. - The instance of the class is converted to a SQLite type before being inserted into the database using the

__conform__()method, which is called implicitly by thesqlite3module.

All in all, this approach makes it easy to add support for new types, since you only need to:

- define a class representing the data type, providing all methods described above.

- compile a regular expression to match the literal representation of the data, and add it to the

REGEX_MAP, so that thedetect_type_from_literal()function will try that, too. - register a converter for the new data type.

Converting CSV to SQLite Using SQLite Literals

Our last approach uses SQLite literals as a means of exchanging data between a CSV file and a SQLite database. Take a look at this tutorial if you need a refresher about SQLite literals. As we will see, this is by far the easiest approach to implement. On the flip side, it is not really flexible, since we have only five distinct literals, and we can’t customize them as we see fit. The key points of this approach are:

- It resembles our “using literals” example because it infers a value’s type from its literal, but it does that on a per-value basis rather than on a per-column basis.

- It doesn’t require adapters, since data are replaced directly in the

INSERTstatement, rather than using?placeholders. - It doesn’t require converters, since the built-in

quote()scalar function takes care of the conversion for us. The conversion into SQLite literals is performed on a per-value basis, rather than on a per-column basis.

Reading Data from the CSV File

In this example, we will use the csv.reader() function to get all records from the CSV file, and then we will replace each field of the record directly into the SELECT statement by using %s placeholders. Beware that this approach assumes all fields hold valid SQLite literals. If that’s not true, you can run into serious trouble while trying to load your data into the database. A more robust approach should check all literals (e.g. by using regular expressions) before replacing them into an INSERT statement.

Our sample data is located in the sqlite-literals.csv file, containing the following records:

| i | r | t | b |

|---|---|---|---|

| -55 | 8.095 | 'zicfa' | X'CE5FC7FD792D60' |

| -11 | 4.915 | 'kepxo' | X'242B4AE0EF5327DB42' |

| 41 | 67.068 | 'etbpu' | X'B7FEFC62C9E749B0' |

| -79 | 8.143 | 'kzkt"' | X'33D4FDEAAA5BFB' |

| 91 | 6.456 | 'cwfqu' | X'653C75E1B9AFE69F' |

| -33 | -74.482 | 'ofvuo' | X'82BA8C36E4' |

| -11 | 27.716 | 'zoki,' | X'1076A53C3CD8BA41' |

| 48 | -31.024 | 'm"np"' | X'1FE3B587BF742A7E' |

| -51 | 93.216 | ' ''ehy' | X'7AB0232FF929E8C04300' |

| -36 | 46.934 | 'es''fg' | X'AD6071FCF6' |

In the following example we will create the sqlite-literals.db3 database to store all data from the CSV source file.

import sqlite3

import csv

def test_read_sqlite_literals():

""" Read SQLite literals from a CSV file. """

sqlite3.register_adapter(str, None)

with sqlite3.connect('sqlite-literals.db3') as conn, \

open('sqlite-literals.csv', 'rt',

encoding='utf-8', newline='') as src:

conn.execute('CREATE TABLE IF NOT EXISTS test(' \

'i INTEGER, r REAL, t TEXT, b BLOB);')

conn.execute('DELETE FROM test;');

for i, r, t, b in csv.reader(src):

conn.execute(

'INSERT INTO test VALUES (%s, %s, %s, %s);' \

% (i, r, t, b))

for record in conn.execute('SELECT i, typeof(i), ' \

'r, typeof(r), t, typeof(t), ' \

'b, typeof(b) FROM test;'):

print(';'.join(map(str, record)))

test_read_sqlite_literals()The test_read_sqlite_literals() example is really simple:

- We delete any previously registered adapter for the

strobject, by setting it toNone. We do this because we want to make sure the CSV fields aren’t altered in any way. - We create the

test table, which will hold all data from the CSV file. - We delete all data from the table just in case we have already executed this Python script before.

- For each record in the CSV file, we replace its fields directly in the

INSERTstatement. We can do that because we assumed all fields are valid SQLite literals. - Finally, we print each column from the

test table, and their respective data type.

The output of the test_read_sqlite_literals() is (formatted as a table):

| i | type | r | type | t | type | b | type |

|---|---|---|---|---|---|---|---|

| -55 | integer | 8.095 | real | zicfa | text | b'\xce_\xc7\xfdy-`' | blob |

| -11 | integer | 4.915 | real | kepxo | text | b"$+J\xe0\xefS'\xdbB" | blob |

| 41 | integer | 67.068 | real | etbpu | text | b'\xb7\xfe\xfcb\xc9\xe7I\xb0' | blob |

| -79 | integer | 8.143 | real | kzkt"" | text | b'3\xd4\xfd\xea\xaa[\xfb' | blob |

| 91 | integer | 6.456 | real | cwfqu | text | b'e<u\xe1\xb9\xaf\xe6\x9f' | blob |

| -33 | integer | -74.482 | real | ofvuo | text | b'\x82\xba\x8c6\xe4' | blob |

| -11 | integer | 27.716 | real | zoki, | text | b'\x10v\xa5<<\xd8\xbaA' | blob |

| 48 | integer | -31.024 | real | m""np"" | text | b'\x1f\xe3\xb5\x87\xbft*~' | blob |

| -51 | integer | 93.216 | real | "ehy | text | b'z\xb0#/\xf9)\xe8\xc0C\x00' | blob |

| -36 | integer | 46.934 | real | es'fg | text | b'\xad`q\xfc\xf6' | blob |

As you can see from the even columns of the output (the columns labeled “type”), all SQLite literals have been adapted to their respective SQLite native type. The same would be true even if we inserted values of different types under the same column, since both adaptions and conversions are applied in a per-value basis.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

Writing Data to the CSV File

Writing data to a CSV file as a SQLite literal is really straightforward thanks to the predefined scalar function quote(column), which returns the literal representation of the values in quote() function works on a small sample:

import sqlite3

def test_quote():

""" Test on the `quote()` scalar function. """

with sqlite3.connect(':memory:') as conn:

conn.execute('CREATE TABLE test(c);')

conn.executemany('INSERT INTO test VALUES(?);',

( (None, ), (12, ), (3.4, ),

("text'data", ), (b'blob-data', ) ))

for record in conn.execute(

'SELECT c, typeof(c), quote(c) FROM test;'):

print(','.join(map(str, record)))

test_quote()The test_quote() function creates an in-memory database holding the typeof()), and the SQLite literal (provided by quote()). Its output is presented below, formatted as table:

| Python Value | SQLite Type | SQLite Literal |

|---|---|---|

| None | null | NULL |

| 12 | integer | 12 |

| 3.4 | real | 3.4 |

| text'data | text | 'text''data' |

| b'blob-data' | blob | X'626C6F622D64617461' |

Now that we know how the quote() function works, let’s use it to write the contents of the sqlite-literals.db3 database to a CSV file:

import sqlite3

import csv

def test_write_sqlite_literals():

""" Write SQLite literals to a CSV file. """

with sqlite3.connect('sqlite-literals.db3') as conn, \

open('sqlite-literals.csv', 'rt',

encoding='utf-8', newline='') as src, \

open('sqlite-literals-out.csv', 'wt+',

encoding='utf-8', newline='') as dst:

csv.writer(dst).writerows(

conn.execute('SELECT quote(i), quote(r), ' \

'quote(t), quote(b) FROM test;'))

# Compare `sqlite-literals.csv` to `sqlite-literals-out.csv`:

dst.seek(0)

for line1, line2 in zip(src, dst):

assert line1.rstrip() == line2.rstrip()

test_write_sqlite_literals()The test_write_sqlite_literals() function uses the SELECT statement to retrieve all data from the quote() function to convert them to SQLite literals. Then, the cursor returned by the conn.execute() method is fed to the writerows() method, which writes all literals to the sqlite-literals-out.csv file. Finally, we rewind the sqlite-literals.csv file, which we used in the previous section to create the AssertionError exception will be raised if at least one line from

Closing Thoughts

This tutorial was about exchanging data between a CSV file and a SQLite database. CSV files treat all values like plain text, while a SQLite database can store five different data types. The sqlite3 module provides adapters and converters to map arbitrary Python classes to SQLite types, but we must find a way to choose the proper adapter for the data in the CSV file.

We described two approaches to solve this problem: (1) by using the file header, and (2) by detecting the data type from the data literals. Once the data has been loaded into the SQLite table, we use converters to map them back into CSV fields.

Finally, we described how to read and store data using SQLite literals. This approach is really straightforward, but can also be error-prone if we don’t properly check the data before inserting it into the database. This is the most common way to convert CSV data to a SQLite database table, but the issue with this approach is that we can’t extend it to new data types.

Did you find this free tutorial helpful? Share this article with your friends, classmates, and coworkers on Facebook and Twitter! When you spread the word on social media, you’re helping us grow so we can continue to provide free tutorials like this one for years to come.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.