Face recognition is the process of identifying or verifying a person’s identity based on their facial features. It is a very useful and a popular task in computer vision, with many applications in security, biometrics, entertainment, social media, and more.

The Python Deepface library implements many state-of-the-art facial recognition algorithms that you can use out of the box, such as VGG-Face, Facenet, OpenFace, and ArcFace.

In this tutorial, you will learn how to use the Deepface library to perform face recognition with Python. We’re going to show you how to:

- Recognize faces by comparing two images.

- Recognize multiple faces from an image.

- Find matching faces from all the images in a directory.

- Extract facial embeddings for different deep-learning algorithms.

Installing and Importing Required Libraries

You can install the deepface library via the following pip command.

! pip install deepfaceNext, we will import the libraries we’ll use in this tutorial. If you use the Google Colab environment, you won’t have to install any library except for deepface.

from deepface import DeepFace

import matplotlib.pyplot as plt

import matplotlib.patches as patches

import matplotlib.image as mpimg

import osMatching faces

Let’s first see how to match faces and see if they belong to the same person. To begin, we’ll show you how to match two images containing a single face, and after that, we’ll learn how to recognize faces from images containing multiple faces.

Matching a Single faces

The DeepFace.verify() method allows you to match faces in two images. You must pass the first and second image paths to the img1_path and img2_path parameters, respectively.

By default, the DeepFace.verify() method uses the VGG-Face model with the cosine similarity metric for face recognition tasks.

The DeepFace.verify() method returns a dictionary with several key-value pairs. The dictionary’s verified key contains a Boolean value, indicating whether or not a similar face is found in two images.

The output also contains bounding box coordinates for the matched faces, along with the similarity metric distance, model used, detector backend, and the threshold for face recognition. If the distance value is less than the model recognition threshold value, the verified parameter returns True; otherwise, it returns False. It’s important to note that the threshold values vary with model types.

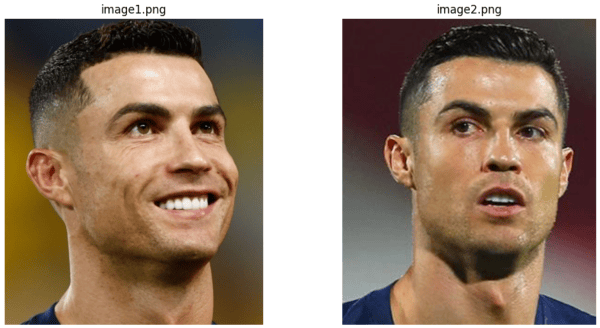

Okay, in this section, we’ll match faces in the following two images.

image1 = r"/content/Facial Images/image1.png"

image2 = r"/content/Facial Images/image2.png"

result = DeepFace.verify(img1_path = image1,

img2_path = image2)

resultOutput:

{'verified': True,

'distance': 0.4540370718512061,

'threshold': 0.68,

'model': 'VGG-Face',

'detector_backend': 'opencv',

'similarity_metric': 'cosine',

'facial_areas': {'img1': {'x': 231, 'y': 111, 'w': 482, 'h': 482},

'img2': {'x': 126, 'y': 72, 'w': 328, 'h': 328}},

'time': 0.27}From the about output, you can see that the verified key contains True, meaning a match was found between the faces in two images.

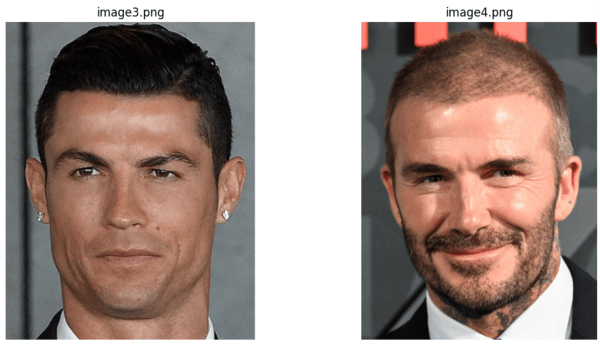

Let’s now see another example. In the following, the image3.png and the image4.png contain faces of different people.

image3 = r"/content/Facial Images/image3.png"

image4 = r"/content/Facial Images/image4.png"

result = DeepFace.verify(img1_path = image3,

img2_path = image4)

resultOutput:

{'verified': False,

'distance': 0.8431300460040496,

'threshold': 0.68,

'model': 'VGG-Face',

'detector_backend': 'opencv',

'similarity_metric': 'cosine',

'facial_areas': {'img1': {'x': 68, 'y': 189, 'w': 454, 'h': 454},

'img2': {'x': 38, 'y': 206, 'w': 492, 'h': 492}},

'time': 0.3}In the output, a similarity distance value of 0.8431 is returned, which is greater than the threshold value; hence the image is not verified.

Matching Multiple faces

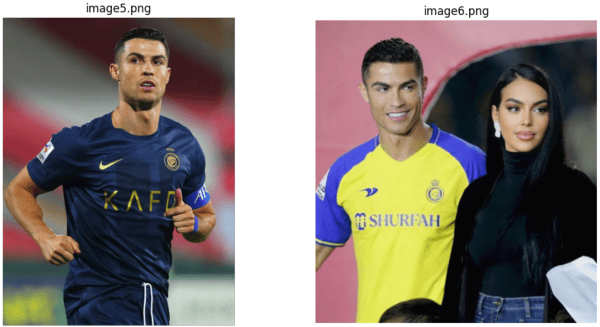

The DeepFace.verify() method can recognize multiple faces in an image. Let’s see an example. We will try to recognize faces in the following two images.

The image6.png contains two faces. The DeepFace.verify() library will try to match the face in image5.png with the two faces in image6.png, returning the recognized faces from the latter.

Let’s see an example.

image5 = r"/content/Facial Images/image5.png"

image6 = r"/content/Facial Images/image6.png"

result = DeepFace.verify(img1_path = image5,

img2_path = image6)

resultOutput:

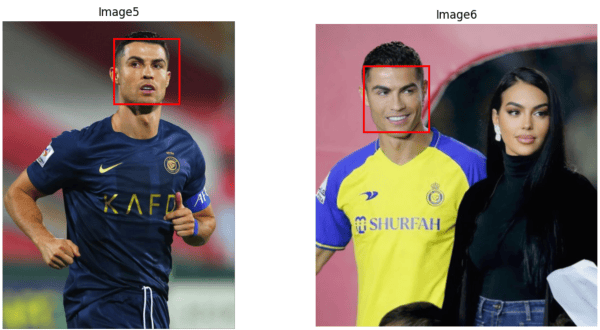

{'verified': True,

'distance': 0.6174480223008945,

'threshold': 0.68,

'model': 'VGG-Face',

'detector_backend': 'opencv',

'similarity_metric': 'cosine',

'facial_areas': {'img1': {'x': 647, 'y': 101, 'w': 379, 'h': 379},

'img2': {'x': 198, 'y': 173, 'w': 271, 'h': 271}},

'time': 0.62}In the output, you can see the coordinates of the source face from the image5.png and one recognized face from the Image6.png.

For clarity, you can plot a bounding box around the matched faces.

image5 = mpimg.imread(image5)

image6 = mpimg.imread(image6)

facial_areas = result["facial_areas"]

#Create subplots to display the images side by side

fig, axs = plt.subplots(1, 2, figsize=(12, 6))

#Plot the first image with bounding box

axs[0].imshow(image5)

rect1 = patches.Rectangle(

(facial_areas['img1']['x'], facial_areas['img1']['y']),

facial_areas['img1']['w'],

facial_areas['img1']['h'],

linewidth=2,

edgecolor='r',

facecolor='none'

)

axs[0].add_patch(rect1)

axs[0].set_title('Image5')

axs[0].axis('off')

#Plot the second image with bounding box

axs[1].imshow(image6)

rect2 = patches.Rectangle(

(facial_areas['img2']['x'],

facial_areas['img2']['y']),

facial_areas['img2']['w'],

facial_areas['img2']['h'],

linewidth=2,

edgecolor='r',

facecolor='none'

)

axs[1].add_patch(rect2)

axs[1].set_title('Image6')

axs[1].axis('off')

plt.show()Output:

The above output shows that the DeepFace.verified() method recognized the correct faces.

Finding Matched Faces from a Directory

You can also find the recognized faces from images in a directory. You can use the DeepFace.find() method to do so. You must pass the path to the source image to the img_path parameter and the directory containing target images to the db_path parameter.

Here is an example.

results = DeepFace.find(img_path = "/content/Facial Images/image1.png",

db_path = "/content/Facial Images/")

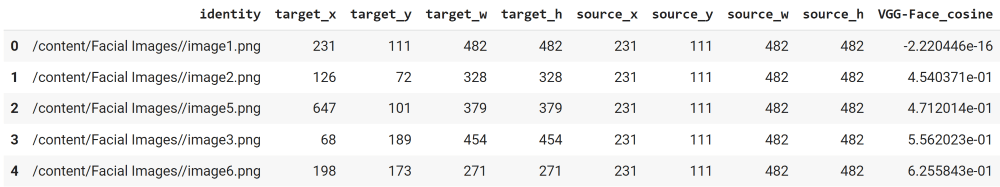

results[0].head()Output:

In the output, you can see a Pandas Dataframe containing all matched images and bounding box coordinates for the facial regions in the target and source images. You can also see the cosine similarity for all the recognized images.

Getting Image Embeddings

Let’s do some more. With deepface, you can also retrieve image embeddings for various deep-learning models in the deepface library. You can use these embeddings to train your deep-learning models for downstream tasks.

For example, you can use the DeepFace.represent() method to retrieve face embeddings for the VGG-Face model (the default model).

The DeepFace.represent() returns a list of dictionaries (one dictionary for every detected image). The following script prints the keys of the first dictionary.

embedding_objs = DeepFace.represent(img_path = "/content/Facial Images/image6.png")

embedding_objs[0].keys()Output:

dict_keys(['embedding', 'facial_area', 'face_confidence'])You can retrieve the embeddings using the embedding key, as the following script shows.

print(len(embedding_objs[0]["embedding"]))Output:

4096The above output shows that the embedding size for the default VGG-Face model is 4096.

You can even change the model for facial recognition tasks, which we’ll do in the next section.

Changing Model and Distance Function

The list of available models and similarity metrics are below.

models = [

"VGG-Face",

"Facenet",

"Facenet512",

"OpenFace",

"DeepFace",

"DeepID",

"ArcFace",

"Dlib",

"SFace",

]

metrics = ["cosine", "euclidean", "euclidean_l2"]To use a different model and similarity metric, specify the model_name and distance_metric parameters like this:

result = DeepFace.verify(img1_path = image1,

img2_path = image3,

model_name = "Facenet512",

distance_metric="euclidean",)

resultOutput:

{'verified': True,

'distance': 18.858074251363377,

'threshold': 23.56,

'model': 'Facenet512',

'detector_backend': 'opencv',

'similarity_metric': 'euclidean',

'facial_areas': {'img1': {'x': 231, 'y': 111, 'w': 482, 'h': 482},

'img2': {'x': 68, 'y': 189, 'w': 454, 'h': 454}},

'time': 0.69}You can see that the Facenet512 model uses a different threshold value compared to the default VGG-Face model.

Conclusion

The Python deepface library is an excellent choice for face recognition, with applications in security, biometrics, entertainment, and social media applications. In this tutorial, we’ve shown you how to use deepface to compare faces in images, recognize multiple faces, match faces across directories and extract facial embeddings. Deepace’s implementation of state-of-the-art algorithms like VGG-Face, Facenet, OpenFace, and ArcFace make it a powerful asset in your deep learning arsenal. Give it a try!