Let’s look into how to fine-tune Llama-2 for question-answering tasks in Python.

Llama-2 is a state-of-the-art language model developed by Meta, designed to understand and generate human-like text. This model is part of the transformer-based autoregressive causal language models, which take a sequence of words as input and predict the next word in the sequence.

Released as an open-source tool, Llama-2 is available for both research and commercial use, empowering individuals, creators, researchers, and businesses to experiment, innovate, and scale their ideas, responsibly.

With Llama-2, you can create applications ranging from simple chatbots to complex systems capable of understanding context, answering questions, and even content generation. In this tutorial, we will focus on fine-tuning Llama-2 to enhance its question-answering capabilities.

Let’s begin!

Importing and Installing Required Libraries

Before diving into the code, let’s ensure all the necessary libraries are installed. Run the following commands in your Python environment to install the required packages:

!pip install -q accelerate==0.21.0

!pip install peft==0.4.0

!pip install bitsandbytes==0.40.2

!pip install transformers==4.31.0

!pip install trl==0.4.7

!pip install datasetsNext, import the installed libraries as follows:

import os

import torch

from datasets import Dataset, load_dataset

from transformers import (

AutoModelForCausalLM,

AutoTokenizer,

BitsAndBytesConfig,

HfArgumentParser,

TrainingArguments,

pipeline,

logging,

)

from peft import LoraConfig, PeftModel

from trl import SFTTrainer

from google.colab import files

import pandas as pdLoading and Preprocessing the dataset

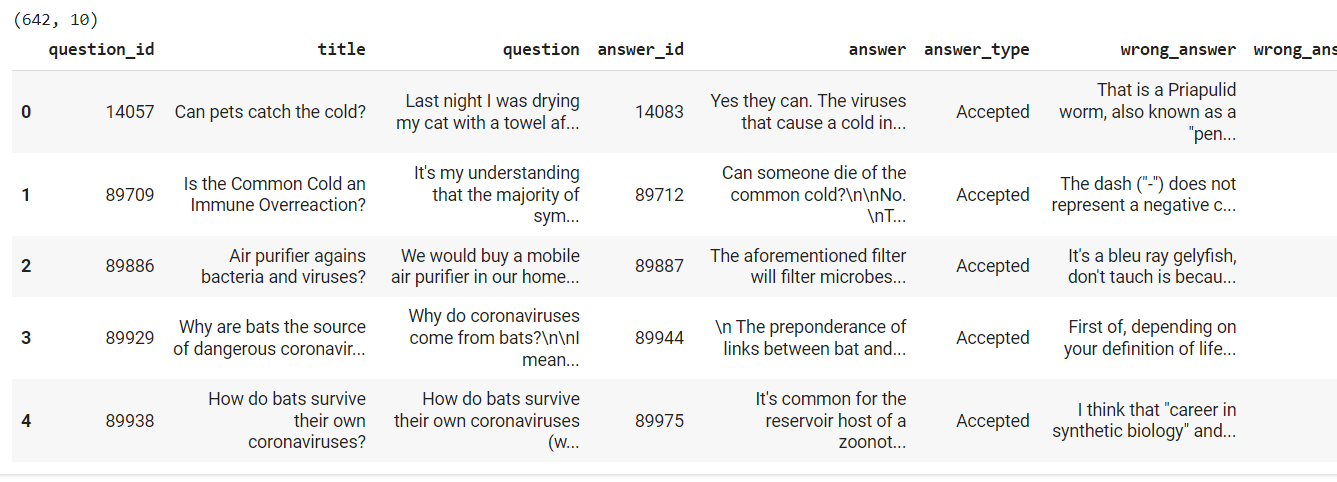

We’re going to fine-tune the Llama-2 model on a Covid-QA dataset containing question-answer pairs related to the Covid-19 pandemic. Remember, you can import your Kaggle dataset directly into Google Colab, but this is a large dataset so you can also download the zip file and extract it on your local machine. Either way, use the following script to import the community.csv file into your Python application, based on where you saved it.

dataset = pd.read_csv(r"/content/community.csv")

print(dataset.shape)

dataset.head()Output:

From the above output, you can see that the dataset contains 642 rows and ten columns. The question and answer columns contain questions and answers, respectively.

We will fine-tune the Llama-2-7b-chat-hf model, optimized for handling dialogue use cases. This model requires each training example to be in the following format:

<s>

[INST]

<<SYS>>

system prompt

<</SYS>>

user prompt

[/INST]

model answer

</s>In our case, we can remove the system prompt and replace the question and answer columns. The following script creates a text column that contains the formatted input for model training.

The script also converts the dataframe into a HuggingFace dataset since we will fine-tune the Llama-2 model from the HuggingFace library.

dataset['text'] = '<s>[INST] ' + dataset['question'] + ' [/INST] ' + dataset['answer'] + ' </s>'

dataset = Dataset.from_pandas(dataset[['text']])

datasetOutput:

Dataset({

features: ['text'],

num_rows: 642

})We’re almost ready to fine-tune our Llama-2 model, but first let’s set our fine-tuning configuration.

Setting Model Configurations

The Llama-2 model that we fine-tune in this tutorial has 7 billion parameters. Fine-tuning such a large model requires massive memory and processing power.

Luckily, researchers have developed PEFT (parameter efficient fine-tuning techniques) that allows you to efficiently fine-tune large language models, like Llama-2, by updating only a tiny subset of the model’s parameters. LoRA (Low Rank Adaptation) is a PEFT technique we will use for fine-tuning our Llama-2 model.

In addition, fine-tuning large language models also involves quantization, which refers to the process of reducing the precision of the model’s weights and activations. This can significantly reduce the model’s memory footprint and speed up inference, making deploying devices with limited resources or applications requiring real-time performance more practical.

The following script sets various configurations for model training, including LoRA and quantization settings.

#Base model identifier from Hugging Face

model_name = "NousResearch/Llama-2-7b-chat-hf"

#LoRA settings for modifying attention mechanisms

lora_r = 64 # Dimension of LoRA attention

lora_alpha = 16 # Scaling factor for LoRA

lora_dropout = 0.1 # Dropout rate in LoRA layers

#4-bit precision settings for model efficiency

use_4bit = True # Enable 4-bit precision

bnb_4bit_compute_dtype = "float16" # Data type for computations

bnb_4bit_quant_type = "nf4" # Quantization method

use_nested_quant = False # Enable double quantization for more compression

#Training settings

output_dir = "./results" # Where to save model and results

num_train_epochs = 1 # Total number of training epochs

fp16 = False # Use mixed precision training

bf16 = False # Use bfloat16 precision with A100 GPUs

per_device_train_batch_size = 4 # Training batch size per GPU

per_device_eval_batch_size = 4 # Evaluation batch size per GPU

gradient_accumulation_steps = 1 # Number of steps for gradient accumulation

gradient_checkpointing = True # Save memory during training

max_grad_norm = 0.3 # Max norm for gradient clipping

learning_rate = 2e-4 # Initial learning rate

weight_decay = 0.001 # Weight decay for regularization

optim = "paged_adamw_32bit" # Optimizer choice

lr_scheduler_type = "cosine" # Learning rate scheduler

max_steps = -1 # Set total number of training steps

warmup_ratio = 0.03 # Warmup ratio for learning rate

group_by_length = True # Group sequences by length for efficiency

save_steps = 0 # Checkpoint save frequency

logging_steps = 25 # Logging frequency

#Sequence-to-sequence (SFT) training settings

max_seq_length = None # Max sequence length

packing = False # Pack short sequences together

device_map = {"": 0} # Load model on specific GPUCode More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

Fine-tuning Llama-2 Model on Custom Dataset

Finally, we are ready to fine-tune our Llama-2 model for question-answering tasks.

The following script applies LoRA and quantization settings (defined in the previous script) to the Llama-2-7b-chat-hf we imported from HuggingFace.

We set the training arguments for model training and finally use the SFTtrainer() class to fine-tune the Llama-2 model on our custom question-answering dataset. We will train the model for a single epoch. You can try more epochs to see if you get better results.

#Setting up the data type for computation based on the precision setting

compute_dtype = getattr(torch, bnb_4bit_compute_dtype)

#Configuring the 4-bit quantization and precision for the model

bnb_config = BitsAndBytesConfig(

load_in_4bit=use_4bit,

bnb_4bit_quant_type=bnb_4bit_quant_type,

bnb_4bit_compute_dtype=compute_dtype,

bnb_4bit_use_double_quant=use_nested_quant,

)

#Verifying if the current GPU supports bfloat16 to suggest using it for better performance

if compute_dtype == torch.float16 and use_4bit:

major, _ = torch.cuda.get_device_capability()

if major >= 8:

print("=" * 80)

print("Accelerate training with bf16=True")

print("=" * 80)

#Loading the specified model with the above quantization configuration

model = AutoModelForCausalLM.from_pretrained(

model_name,

quantization_config=bnb_config,

device_map=device_map

)

model.config.use_cache = False # Disable caching to save memory

model.config.pretraining_tp = 1 # Setting pre-training task parallelism

#Initializing the tokenizer for the model and setting padding configurations

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

tokenizer.pad_token = tokenizer.eos_token # Setting the pad token

tokenizer.padding_side = "right" # Adjusting padding to the right to avoid issues during training

#Configuring LoRA parameters for the model to fine-tune its attention mechanisms

peft_config = LoraConfig(

lora_alpha=lora_alpha,

lora_dropout=lora_dropout,

r=lora_r,

bias="none", # Setting the bias option for LoRA

task_type="CAUSAL_LM", # Defining the task type as causal language modeling

)

#Defining various training parameters such as directory, epochs, batch sizes, optimization settings, etc.

training_arguments = TrainingArguments(

output_dir=output_dir,

num_train_epochs=num_train_epochs,

per_device_train_batch_size=per_device_train_batch_size,

gradient_accumulation_steps=gradient_accumulation_steps,

optim=optim,

save_steps=save_steps,

logging_steps=logging_steps,

learning_rate=learning_rate,

weight_decay=weight_decay,

fp16=fp16,

bf16=bf16,

max_grad_norm=max_grad_norm,

max_steps=max_steps,

warmup_ratio=warmup_ratio,

group_by_length=group_by_length, # Grouping by length for efficient batching

lr_scheduler_type=lr_scheduler_type,

report_to="tensorboard" # Reporting to TensorBoard for monitoring

)

#Setting up the fine-tuning trainer with the specified model, dataset, tokenizer, and training arguments

trainer = SFTTrainer(

model=model,

train_dataset=dataset,

peft_config=peft_config,

dataset_text_field="text", # Specifying which dataset field to use for text

max_seq_length=max_seq_length, # Setting the maximum sequence length

tokenizer=tokenizer,

args=training_arguments,

packing=packing, # Enabling packing for efficiency

)

#Starting the training process

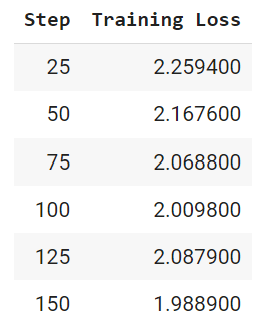

trainer.train()Output:

From the above output, you can see loss continues to decrease. You’ll likely be able to fine-tune for more epochs to further reduce the loss, but be aware of overfitting. We introduced the concept of overfitting when using callback functions in TensorFlow Keras to stop training early

Generating Text from Fine-tuned Model

Okay, we’re now ready to make inferences from our fine-tuned model. We’ll first convert our input text to the same format we used for training.

Next, we pass the formatted input text, fine-tuned model, tokenizer, and the maximum number of tokens we want in the output to the HuggingFace pipeline class.

The pipeline class returns a model response that we can extract using the result[0]['generated_text'] code snippet. The model returns the input text as well, so to extract only the output text, we’ll split the response using the [/INST] string and return only the part that follows this string.

#Ignore warnings

logging.set_verbosity(logging.CRITICAL)

#Use fine-tuned Llama model for running text generation pipelines

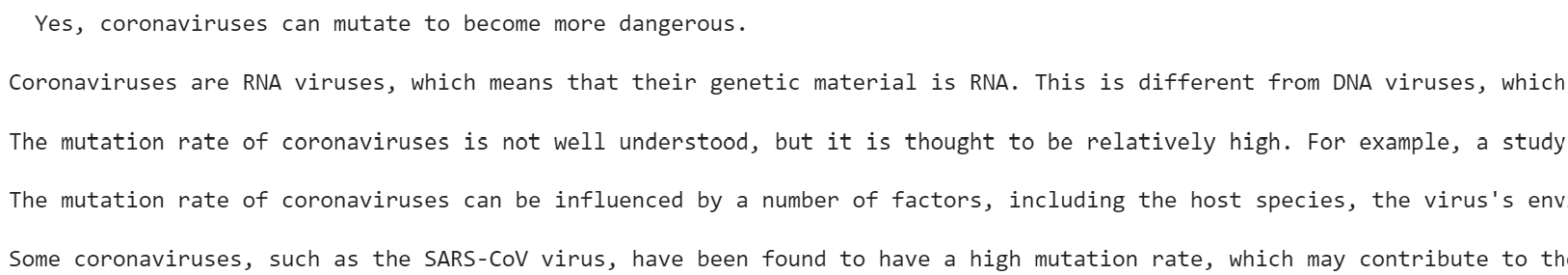

prompt = "Can coronaviruses mutate to an extent to get as dangerous as fliovirusues such as Marburg viruses, Ravn or Ebola Zaire?"

pipe = pipeline(task="text-generation",

model=model,

tokenizer=tokenizer, max_length=300)

result = pipe(f"<s>[INST] {prompt} [/INST]")

print(result[0]['generated_text'].split("[/INST]")[1])Output:

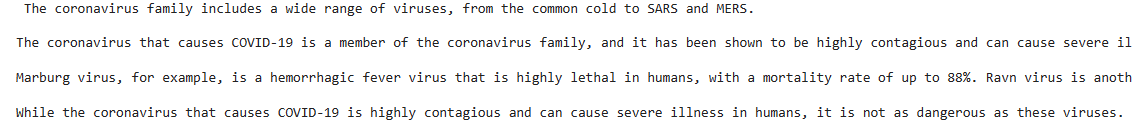

From the above output, you can see that the model has generated an appropriate, albeit theoretical, response. Asking the same question a second time yields a different response:

Finally, you can save the fine-tuned model using the following script so you don’t have to retrain it each time:

#Fine-tuned model name

fine_tuned_model = "Llama-2-7b-covid-chat-finetune"

#Save trained model

trainer.model.save_pretrained(fine_tuned_model)Conclusion

Fine-tuning a large language model such as Llama-2 can be handy for developing customized question-answering systems. However, fine-tuning LLMs can be complicated since they require huge memory and processing power.

Thanks to techniques such as PEFT and LoRA, you can now fine-tune LLMs even on your local machines, as you have seen in this article, where you fine-tuned Llama-2 on a custom COVID question-answering dataset.

I recommend you fine-tune LLMs such as LLama-2 for other NLP tasks, such as text classification, summarization, etc., and see what results you get.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.