In this tutorial, we’re going to show you how to classify images of handwritten numbers, or digits, using the Python Scikit-learn library for machine learning. This is the first step for building a full handwriting detection program. The Scikit-learn library is commonly called “sklearn”, so that’s how we’ll refer to it throughout this tutorial.

The sklearn library expects data in tabular format where each record is represented as a row vector. Images, on the other hand are normally represented in the form of 2D matrices with some color channels.

Nevertheless, if you convert your 2D images to row vectors, you can train machine learning algorithms using the sklearn library for image classification tasks. This is exactly what we’ll teach you in this tutorial.

Installing the sklearn library and Importing the Dataset

You can install the sklearn library with the following pip command on your command terminal.

pip install scikit-learnIf you’re using the Anaconda distribution of Python, the sklearn library comes pre-installed.

The handwritten digits dataset we’ll use in this tutorial can be downloaded from the sklearn library using the load_digits() method from the datasets submodule, as shown in the script below. This dataset contains hundreds of images of handwritten numbers between 0 and 9. The script below also prints different attributes of the handwritten digits dataset using the dir() method.

from sklearn import datasets

import numpy as np

digits_ds = datasets.load_digits()

print(dir(digits_ds))Output:

['DESCR', 'data', 'feature_names', 'frame', 'images', 'target', 'target_names']We’ll be using the images and target attributes from the list of attributes printed above. The images attribute is a NumPy array that stores your actual images, while the target attribute is another NumPy array that stores the corresponding labels for your image.

Dataset Analysis

Before training a machine learning algorithm, it’s a good practice to analyze your dataset - or at least familiarize yourself with it.

Let’s see the type and shape of our image array.

print(type(digits_ds.images))

print(digits_ds.images.shape)Output:

<class 'numpy.ndarray'>

(1797, 8, 8)The output shows that the image array is a NumPy array with 1797 image samples. Each image sample is 8 pixels wide and 8 pixels high.

Let’s print our pixel values for the image at index 7.

print(digits_ds.images[7])From the output below, you can see that the image at the index 7 is indeed represented as a 2D array of shape 8 x 8.

Output:

[[ 0. 0. 7. 8. 13. 16. 15. 1.]

[ 0. 0. 7. 7. 4. 11. 12. 0.]

[ 0. 0. 0. 0. 8. 13. 1. 0.]

[ 0. 4. 8. 8. 15. 15. 6. 0.]

[ 0. 2. 11. 15. 15. 4. 0. 0.]

[ 0. 0. 0. 16. 5. 0. 0. 0.]

[ 0. 0. 9. 15. 1. 0. 0. 0.]

[ 0. 0. 13. 5. 0. 0. 0. 0.]]Let’s plot the minimum and maximum values for the pixels in our image.

print(np.min(digits_ds.images[7]))

print(np.max(digits_ds.images[7]))Output:

0.0

16.0The output shows that an individual pixel value can range from 0 to 16 so the entire image is represented by an 8x8 array of values in the range 0 to 16.

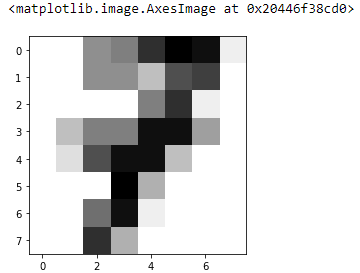

Finally, you can plot the 2D NumPy array containing our image using the imshow() method from the pyplot module of the matplotlib library.

import matplotlib.pyplot as plt

plt.imshow(digits_ds.images[7],cmap='binary')Output:

From the above output, you can see that the image at index 7 contains the digit 7, though the image is quite blurry since it’s only represented by 16 different pixel values. Despite the lack of clarity, you’ll see that this information will be enough for our machine learning algorithm to learn what’s in the image.

Let’s now see the type and shape of our target array containing our image labels.

print(type(digits_ds.target))

print(digits_ds.target.shape)Output:

<class 'numpy.ndarray'>

(1797,)The above output shows that the target array is a NumPy array of one-dimension with size 1797.

Finally, let’s print the value of the target array at the index 7.

print(digits_ds.target[7])Output:

7You can see that the target for the image at index 7 is 7. This is the label for our image. It’s important to note that the index and label values will not always be the same since labels in this dataset can range from 0 to 9, while indices can range from 0 to 1796 (1 less than the total number of images).

Converting Data into the Right Shape for sklearn

The images in our dataset are currently in 2D format. Sklearn expects input data samples in the form of scalars or 1D vectors. One way to convert a matrix into a vector is by simply concatenating all the rows in a matrix together to form one big row.

The following script uses the reshape() method from the NumPy library to change the shape of all the images in our dataset from matrices of 8 x 8 to 1D vector of size 64.

features = digits_ds.images.reshape(len(digits_ds.images), -1)

print(features.shape)Output:

(1797, 64)Our targets, which contain our image labels, are already in the right shape since they were already only one-dimension. The following script stores our targets in a new variable called

labels = digits_ds.target

print(labels.shape)Output:

(1797,)Dividing Data into Training and Tests

Typically, a machine learning algorithm is trained on a subset of a dataset called the training data and evaluated on another set called the test set.

Let’s divide our dataset into these two parts. You can use the train_test_split() method from the model_selection submodule of the sklearn library. The following script divides the data into an 80% training set and a 20% test set.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(features, labels, test_size=0.20, random_state=0)Finally, before training any machine learning algorithm, it’s a good practice to normalize and scale your data. The following script does that.

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.transform (X_test)Training the Algorithm and Making Predictions with sklearn

So far, you’ve performed all the necessary preprocessing steps for training a machine learning model with the sklearn library. You’re now ready to train it to classify handwritten numbers, or digits. The next step is to train your machine learning model on your dataset so it’ll learn to classify these handwritten digits.

The sklearn library contains several machine learning algorithms for classification tasks. You can see a list of these classification algorithms here. For this tutorial, we’ll use the Logistic Regression algorithm, which truly is one of the simplest machine learning algorithms ever designed.

To train the Logistic Regression algorithm, we’ll first import the LogisticRegression class from the linear_model submodule of the sklearn libary.

Next, we’ll call the fit() method of the LogisticRegression regression class and pass it our training features and labels. The random_state attribute in the script below is then used to randomly shuffle our data.

Once, the fit() method return a trained model, you can use it to make predictions on the test set. To make predictions, you need to call the predict() method and pass it your test set. The predict() method returns predicted labels for the data samples in our test set.

from sklearn.linear_model import LogisticRegression

clf = LogisticRegression(random_state=42).fit(X_train, y_train)

predictions = clf.predict(X_test)To see how well your algorithm has learned to classify the handwriting, you can match the predicted labels for your test set with actual labels.

Use the classification_report(), and accuracy() methods from the sklearn.metrics module to find the precision, recall, and accuracy values for your model performance.

from sklearn.metrics import classification_report, accuracy_score

print(classification_report(y_test,predictions))

print("Accuracy:",accuracy_score(y_test, predictions))Output:

precision recall f1-score support

0 1.00 1.00 1.00 27

1 0.91 0.89 0.90 35

2 0.97 0.97 0.97 36

3 0.97 1.00 0.98 29

4 0.97 1.00 0.98 30

5 0.97 0.93 0.95 40

6 1.00 0.98 0.99 44

7 1.00 0.97 0.99 39

8 0.92 0.92 0.92 39

9 0.91 0.98 0.94 41

accuracy 0.96 360

macro avg 0.96 0.96 0.96 360

weighted avg 0.96 0.96 0.96 360

Accuracy: 0.9611111111111111From the above output, you can see that our logistic regression model achieves an accuracy of 96.11% on our test set, which is pretty impressive given that the images in our dataset are represented using only 64 pixels (8 x 8).

You can use your trained model to make a prediction on a single image. The following script selects the image at index 7 from our dataset, changes its shape so that it becomes a 1D vector of size 64, and then uses the predict() method of our trained model to predict its label. In other words, we’re using our trained model to recognize the handwritten number saved in the image.

single_test = digits_ds.images[7].reshape(1,-1)

prediction = clf.predict(single_test)

print(prediction[0])Output:

7The output shows that our model has correctly guessed the label for the image at the 7th index in our dataset.

Full Code

Before we tell you the limitations of an sklearn machine learning model like this, I want to share the full code we just built for you:

from sklearn import datasets

import numpy as np

#load the digits dataset from sklearn

digits_ds = datasets.load_digits()

#extact the images (features) and labels from our dataset

features = digits_ds.images.reshape(len(digits_ds.images), -1)

labels = digits_ds.target

#split into training and test sets

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(features, labels, test_size=0.20, random_state=0)

#normalize and scale our data

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.transform (X_test)

#train our model using Logistic Regression

from sklearn.linear_model import LogisticRegression

clf = LogisticRegression(random_state=42).fit(X_train, y_train)

predictions = clf.predict(X_test)

#print the accuracy of our model

from sklearn.metrics import classification_report, accuracy_score

print(classification_report(y_test,predictions))

print("Accuracy:",accuracy_score(y_test, predictions))

#use our model to make a prediction on a single image (image 7)

single_test = digits_ds.images[7].reshape(1,-1)

prediction = clf.predict(single_test)

print(prediction[0])Final Thoughts

Though the sklearn library can be used for image classification tasks, it’s not recommend for classifying complex images with a lot of pixels and color channels. For complex cases like this, advanced computer vision algorithms like the convolutional neural networks are recommended. These advanced computer vision algorithms are better suited for Python libraries like PyTorch and *TensorFlow**. For simple image classification tasks like the one explained in this tutorial, you can go with any algorithm in the sklearn library. That’s why scikit-learn is great for getting your feet wet when you’re first getting started with machine learning.

For more Python examples, enter your email address below and I’ll send you some of my best pre-built Python scripts.