This is the second part of our four part tutorial on the Python CSV module. In the first part we introduced the CSV data format, showing how the Python csv module represents it, as well as its many dialects. Now it’s time to learn how to use Python to read CSV files. Before we can do that, we need to take a look at character encoding forms, which is the cornerstone of handling and troubleshooting any kind of text data.

- A Crash Course on Character Sets and Encoding Forms

- Reading CSV Data

- Handling Exceptions

- Closing Thoughts

- References

A Crash Course on Character Sets and Encoding Forms

Have you ever opened a text file with your favorite text editor and find some replacement characters (represented as void rectangles) scattered all over the page? Have you ever opened a file with the built-in Python open() function only to get a UnicodeDecodeError? I certainly have.

This section will help you understand these kinds of problems, and how to get around them in Python using the codecs module. The point is: if we can’t properly open a text file in general, we can’t properly deal with CSV files in particular.

You’re more than welcome to skip over this section, but it’s important background information designed to help you troubleshoot and debug errors you may encounter.

Glossary

In this section we will list the most important concepts about character sets and encoding forms. In the following sections we’ll see some popular examples of character sets. Some of them represent each character with just one byte (e.g. ASCII and Latin1), others use more complex rules (e.g. Unicode).

Characters

Characters are abstract entities representing components of the written languages, e.g. LATIN CAPITAL LETTER A. They may have a graphic representation (e.g. the letters of the English alphabet or the decimal digits), or not (e.g. control characters). Each character is assigned a unique integer number, called a code point.

Glyphs

Glyphs are the shapes of a character when represented graphically. Glyphs are grouped in fonts. While a character has only one code point, the same code point can be represented graphically in many different ways, depending on the font family. Standards dealing with character sets and encoding usually don’t prescribe their graphic representation as glyphs, though they usually have tables with examples of glyphs, e.g. the Unicode Code Charts.

Encoding Forms

Though each character is assigned a unique code point, code points are numbers, and numbers can be represented in binary code in many ways. e.g. the number 0x1234 can be represented in a 16-bit little-endian processor as 0x3412, in a 16-bit big-endian processor as 0x1234. Encoding forms (or encodings) are rules for representing all code points of a character set in binary form. As we’ll see, the same set of characters can have many encodings. This is why it can be challenging to read files in a programming language like Python.

The ISO/IEC 646 Character Set

Bear with me here. This is going to get a little complicated.

The ISO/IEC 646 Standard, titled Information technology — ISO 7-bit coded character set for information interchange (or its ECMA equivalent), defines a template for many character sets and a unique Character Set called International Reference Version (IRV). The standard uses the notation n/m to represent code points, where n is the value of the higher four bits of the number (in decimal digits), m is the value of the lower four bits; e.g. 0x1F is represented as 1/15. This standard classifies all characters as follows:

- Characters from 0/0 (decimal 0) to 1/15 (decimal 31) are called Control Characters. The standard says that there are at most 32 of them. They are not allocated by this standard.

- The character SPACE is assigned to code point 2/0 (decimal 32).

- Characters from 2/1 (decimal 33) to 7/14 (decimal 126) are graphic characters. Some of them are allocated uniquely, some have alternative allocations (i.e. the standard list all alternatives), and others are freely available. This flexibility allows national standards to be allocated them if necessary.

- The character DELETE is assigned to code point 7/15 (decimal 127).

The standard mandates that each character is encoded as a single byte, which has the same value as its code point; e.g. LATIN CAPITAL LETTER A has code point 4/1 (decimal 65), and it is encoded as 0x41. In a sequence of bytes encoded in a char set derived from this standard, every byte greater than 7/F should be considered as an encoding error.

The ASCII Character Set

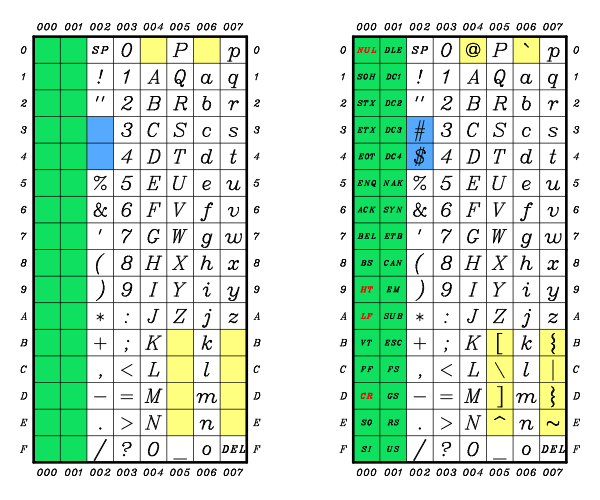

The ISO/IEC 646 IRV is equivalent to the American Standard Code for Information Interchange (ASCII) Character Set, which we’re all more familiar with. The following table compares the ISO template (to the left) with the ASCII Character Set (to the right). As we’ll see later, the Unicode Character Set includes all ASCII characters. You should read the table as follows:

- control characters have a green background. In the ASCII table, the control characters used more frequently are marked in red:

NUL(NULL),HT(CHARACTER TABULATION),LF(LINE FEED),CR(CARRIAGE RETURN). For a brief explanation of the remaining characters, I encourage you to explore the Basic Latin (ASCII) Unicode chart or to the ECMA-48 description. - alternative allocations have a light blue background.

- national or application-oriented allocations have a light yellow background.

To get the Unicode code point for a character in the table, concatenate the label at the top of the table (or at the bottom) with the label to the left (or to the right), and add the U+ prefix. For example, the character LATIN CAPITAL LETTER A has the Unicode code point U+ + 004 + 1 = U+0041. It’s all starting to come together, isn’t it?

The Latin-1 Character Set

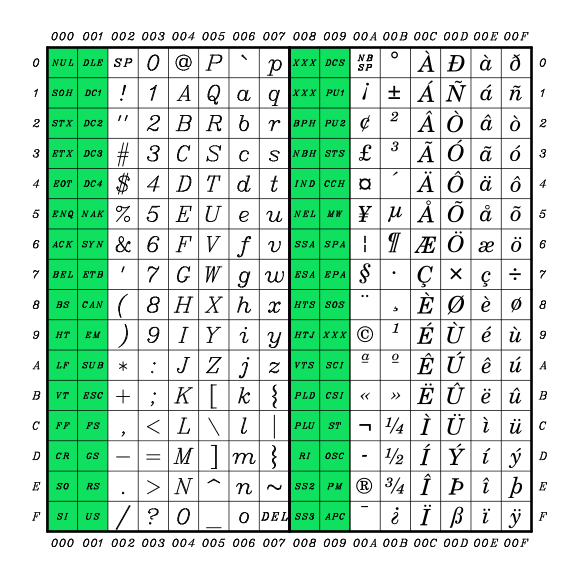

The Latin-1 character set has 256 characters, encoded into a single byte. It is defined by ISO/IEC 8859-1:1998, Information technology — 8-bit single-byte coded graphic character sets — Part 1: Latin alphabet No. 1. That was a mouthful!

The characters whose code points range from 0 to 0x7F (decimal 127) are the same as in the ASCII character set. Control characters have a green background. For a brief explanation of the control characters whose code points are in the range from 0x80 (decimal 128) to 0x9F (decimal 159) refer to the Latin-1 Supplement Unicode chart.

Yet again, in the Latin-1 standard all characters are encoded using a single byte, which has the same value as its code point. In a sequence of bytes encoded using this encoding form, all 8-bits bytes are legal, since all integer numbers from 0 to 255 are assigned to a character in the Latin-1 character set.

The Unicode Character Set

In the Unicode standard all characters are grouped in scripts. Letters in different scripts, even when they may relate to each other by graphic representation or semantics, are represented by different chars. e.g. LATIN CAPITAL LETTER A (U+0041) and GREEK CAPITAL LETTER ALPHA (U+0391) are different characters, even if they graphically look the same. This is because they belong to different scripts.

The code points of the Unicode standard range from 0+0000 to U+10FFFF, but not all code points are allocated (i.e. are assigned to a character). Some are reserved for future expansion of a script, some are intended for other uses. So, even when a code point from a text stream is encoded correctly, it may not stand for any character.

The Basic Multilingual Plane (BMP) and Surrogate Pairs

The Basic Multilingual Plane (BMP) refers to the set of characters from U+0000 to U+FFFF, containing the most frequently used scripts. The code points from U+0000 to U+00FF follow precisely the arrangement of Latin-1, the first 128 of which, in turn, make up the ISO 646 IRV (ASCII).

The characters from U+D800 to U+DFFF are called surrogates, and their only purpose is to allow all code points to be encoded using just 2 bytes. They are grouped in high surrogates (U+D800 to U+DBFF) and low surrogates (U+DC00 to U+DFFF). We will not describe the algorithm to convert surrogate pairs into code points (and the other way around). We’ll only stress that surrogates are used in pairs, and that for each element of the pair there are 1024 possible choices (210), so we can make up to 220 different pairs, and that’s exactly the number of code points outside the BMP (0x10FFFF - 0xFFFF).

The UTF-8 Encoding

The Unicode character encoding model defines three encoding forms (called Unicode Transformation Formats), which specify how each code point (integer number) translates to one or more code units, i.e. sequences of bytes. These are UTF-8, UTF-16 and UTF-32. To deal with the problem of the order of bytes (or endianness) in the binary representation of code points, the Unicode standard defines 7 encoding schemes: UTF-8 (which has no endianness), UTF-16, UTF-16BE (big-endian), UTF-16LE (little-endian), UTF-32, UTF-32BE, UTF-32LE. For example, the character LATIN CAPITAL LETTER A will have the following representation in each encoding schema:

| Encoding Schema | Hexadecimal Representation |

|---|---|

| UTF-8 | 41 |

| UTF-16BE | 00 41 |

| UTF-16LE | 41 00 |

| UTF-32BE | 00 00 00 41 |

| UTF-32LE | 41 00 00 00 |

The main differences between these kind of encoding forms are:

- UTF-8 encodes all Unicode code points using variable-length byte sequences, from 1 byte to 4 bytes. All code points under U+0080 are encoded in the same way as ASCII code points. Thus, a sequence of characters encoded in ASCII or encoded in UTF-8 looks exactly the same, provided that it lacks a BOM marker and there aren’t code points over U+007F. Since it is a variable-length encoding, a sequence of bytes encoded in UTF-8 must be read one byte at a time, so there isn’t any issue related to the endianness.

- UTF-16 encodes all Unicode code points using 2 bytes sequences; all code points outside the BMP are encoded using surrogate pairs.

- UTF-32 encodes all Unicode code points using 4 bytes sequences. It doesn’t require the rather complicated operations for decoding / encoding UTF-8 sequences, nor the conversion of surrogate pairs, but it isn’t space-efficient.

Detecting a File Encoding Using BOM

Even if the Unicode standards define all possible ways of encoding code points, we still have a problem: how can we determine which encoding schema has been used just by reading the datastream? The Unicode standard defines a character, called Byte Order Mark (BOM). When used, it resides at the very beginning of the file, and it is not part of the data (it is said to be a non-character), but it just serves the purpose of selecting the correct encoding schema. By reading it, a library can select the correct decoder. The following table lists the representation of the BOM character in each encoding schema:

| Encoding Schema | Hexadecimal Representation |

|---|---|

| UTF-8 | EE BB BF |

| UTF-16BE | FE FF |

| UTF-16LE | FF FE |

| UTF-32BE | 00 00 FE FF |

| UTF-32LE | FF FE 00 00 |

Now that you understand there are differences in encoding forms, we have enough information to turn our attention into reading text data, particularly CSV files, in Python.

Reading CSV Data

The two most basic operations we require from a CSV library are reading and writing CSV data. When a library reads CSV data, it must perform the following actions:

- Decoding: The library must first turn a sequence of bytes into a sequence of characters from a specific charset, i.e. it must decode the file. This part is tricky, since the library may not know the encoding of the file. At this point the library may raise an exception. For example, it may generate an error message if it can’t detect the encoding or if it encounters sequences of bytes that it doesn’t known how to decode.

- Parsing: After decoding, the library must divide the file into records, and the records into fields.

- Converting: (optionally) It can convert each field, which is just a sequence of characters, into another object. In other words, it can convert an integer literal (

strtype) into a Pythoninttype. - Fetching: Finally, it fetches the results back to the caller. It can fetch one record at a time, thus avoiding having to read and store the entire file into the primary memory.

That’s the general way all programming languages read files. In this section we’ll specifically see how the Python csv library reads CSV data.

Defining our Sample CSV File

In this section we’ll provide a table used by many of the examples in this part of the tutorial. This table will also be used in our subsequent tutorials on Python CSV files. Here’s the general layout of our table. This table

| Field Name | Description | Type | Python Type |

|---|---|---|---|

| Band | Name of the band the artist leads | plain text | str |

| Artist | Name of the lead singer of the band | plain text | str |

| Birth Date | Date of birth of the artist | YYYY-MM-DD date | datetime.date |

| Country | Country of origin of the lead singer using ISO 3166 alpha-3 country codes | fixed-length plain text | str |

| Album | A remarkable album by that band (that's not up for debate) | plain text | str |

| Year | Release year of the album | integer | int |

We want to pay particular attention to the data type and Python type when we start describing adapters in part four of our tutorial. This is a table of female-fronted symphonic-metal bands.

| Band | Artist | Birth Date | Country | Album | Year |

|---|---|---|---|---|---|

| Epica | Simone Simons | 1985-01-17 | NLD | Requiem for the Indifferent | 2012 |

| Amberian Dawn | Päivi "Capri" Virkkunen | 1972-02-28 | FIN | Innuendo | 2015 |

| Beyond The Black | Jennifer Haben | 1995-07-16 | DEU | Lost in Forever | 2016 |

| Arven | Carina Hanselmann | 1986-04-16 | DEU | Music of Light | 2011 |

| Sleeping Romance | Federica Lanna | 1986-04-26 | ITA | Alba | 2017 |

| Ex Libris | Dianne van Giersbergen | 1985-06-03 | NLD | Medea | 2014 |

| Visions Of Atlantis | Clementine Delauney | 1987-02-11 | FRA | The Deep & The Dark | 2018 |

| Nightwish | Floor Jansen | 1981-02-21 | NLD | Endless Forms Most Beautiful | 2015 |

| Leaves' Eyes | Liv Kristine Espenæs | 1976-02-14 | NOR | Vinland Saga | 2005 |

| Within Temptation | Sharon den Adel | 1974-07-12 | NLD | The Silent Force | 2004 |

| Dark Sarah | Heidi Parviainen | 1979-03-09 | FIN | Behind the Black Veil | 2015 |

| Delain | Charlotte Wessels | 1987-05-13 | NLD | Lucidity | 2006 |

| Katra | Katra Solopuro | 1984-08-10 | FIN | Katra | 2007 |

| Ancient Bards | Sara Squadrani | 1986-02-14 | ITA | The Alliance of the Kings | 2010 |

| Xandria | Manuela Kraller | 1981-08-01 | DEU | Neverworld's End | 2012 |

| Midnattsol | Carmen Elise Espenæs | 1983-09-30 | NOR | The Aftermath | 2018 |

| Elyose | Justine Daaé | FRA | Ipso Facto | 2015 |

We’re going to make the following assumptions about the CSV file made from this table:

- It uses the default formatting parameters.

- It has been encoded with UTF-8 encoding

- It uses only line feed (U+000A) as the line delimiter.

We’ve saved the contents of this table to the CSV file

You can see the CSV file contains a variety of data types (strings, fixed-length strings, integers, dates). This is intentional because in our fourth tutorial on the Python csv module, we’re going to teach you about adapters and converters.

Decoding the Data Source with the codecs Module

In our sample file, we listed a couple fields with characters that don’t belong to the ASCII character set.

| String | Offset | Char | Code Pt | Latin-1 | UTF-8 | UTF-16LE |

|---|---|---|---|---|---|---|

| Päivi Virkkunen | 1 | ä | U+00E4 | 0xE4 | 0xC3A4 | 0xE400 |

| Liv Kristine Espenæs | 18 | æ | U+00E6 | 0xE6 | 0xC3A6 | 0xE600 |

| Justine Daaé | 11 | é | U+00E9 | 0xE9 | 0xC3A9 | 0xE900 |

The following legend explains what’s going on in the table of invalid bad characfters above.

- String is a field from the sample file.

- Offset is the position on the non-ASCII character inside the string (starting at 0 from the left).

- Char is the non-ASCII character from the string.

- Code Pt is the Unicode code point of the character. Refer to the Latin-1 chart above.

- Latin-1, UTF-8, UTF-16LE are the representations of the code point using different encoding forms. They belong, respectively, to the sample files

sample-latin1.csv ,sample.csv andsample-utf16le.csv we included in our zip file. To compare the different samples you should use a hex editor.

Now we’ll turn our attention to decoding using the Python codecs module. This module in Python provides two useful functions: codecs.decode() and codecs.open(). Let’s take a closer look at them.

codecs.decode(sequence, encoding='utf-8', errors='strict')

codecs.open(path, mode='r', encoding=None, errors='strict', buffering='1')The codecs.decode() function decodes a byte-like sequence using a specified encoding form. It returns a str object, and is useful when retrieving a single record from the source and we want to decode it. The decode function has the following arguments:

sequence: the object to decode. It can be abytesor abytearrayobject, but it can’t be an iterable or iterator.encoding: a string specifying the encoding form. The same encoding form may have many aliases. Below we’ll list only the ones we need. Refer to the official documentation for a list of all encodings supported by thecodecsmodule.errors: a string specifying the error handling discipline.strict raises an exception if an ill-formed sequence of bytes is encountered,ignore just skips the ill-formed sequence without notifying it to the caller. There are other options available, as well.

The codecs.open() function returns a file-like object. We’ll get one decoded line at a time when we iterate over this object. It has the following parameters:

path: the file system path where the source is located.mode: the mode in which the file is opened, similar to the built-inopen()function we discussed in an earlier tutorial. Files are always opened in binary mode, and no automatic conversion of\n is done on reading and writing.encoding: same asdecode(), but notice that the default value isNone , which means the locale encoding will be used; it can be retrieved withgetpreferredencoding(False)from thelocale module.errors: argument behaves the same asdecode().buffering:0 disables buffering,1 enables line buffering, every other positive integer defines the size of the buffer.

Let’s see these Python functions at work using the encoded sample CSV files we described above.

import codecs

def is_nonascii(c):

""" Returns True if `c` is outside the ASCII range. """

return ord(c) > 0x7f

def detect_nonascii(s):

""" List all code points outside the ASCII range. """

l = []

for c in s:

if is_nonascii(c):

l.append(c)

return l

def repr_bytes(bstr):

""" Represent a byte sequence as an hexadecimal string. """

s = ""

for b in bstr:

s += "%02x" % b

return s

def test_decode_by_line():

""" Print (lineno, char, codept) of each non-ASCII char. """

with open('sample.csv', 'rb') as src:

n = 0

for line in src:

n += 1

s = codecs.decode(line, encoding='utf-8')

for c in detect_nonascii(s):

print("%-3d, %-3s, U+%04x, %s" %

(n, c, ord(c),

repr_bytes(codecs.encode(c, 'utf-8'))))

def test_decode_file():

""" Test all sample files. """

n = 0

with codecs.open(

'sample.csv', encoding='utf-8') as utf8_src, codecs.open(

'sample-latin1.csv', encoding='latin-1') as latin1_src, codecs.open(

'sample-utf16le.csv', encoding='utf-16le') as utf16le_src:

for utf8_line, latin1_line, utf16le_line in zip(

utf8_src, latin1_src, utf16le_src):

n += 1

for c in detect_nonascii(latin1_line):

print("%-3d, %-3s, U+%04x, %-4s, %-4s, %-4s" %

(n, c, ord(c),

repr_bytes(codecs.encode(c, 'latin-1')),

repr_bytes(codecs.encode(c, 'utf-8')),

repr_bytes(codecs.encode(c, 'utf-16le'))))So, what’s going on in this example?

is_nonascii(): This function tests if a character has a code point which is outside the valid ASCII range (i.e. greater than 0x7F).detect_nonascii(): This function returns a list of all non-ASCII characters in a line.repr_bytes(): This function represents a byte sequence as a hexadecimal string.test_decode_by_line(): This function prints the line number, the character, and the character’s code point for each character in the source which is not an ASCII character. It opens the source with the built-inopen()function, than it usescodecs.decode()to decode each line. Thecodecs.encode()function accepts a string and it encodes it using a certain encoding form.test_decode_file(): This function uses thecodecs.open()function to open each source file, and iterates over each line, printing out only the non-ASCII characters. Its output is similar to that in the table above, listing for each non-ASCII character its Unicode code point and its representations in the Latin-1, UTF-8 and UTF-16LE encoding forms.

This gives you an idea of the checks you should do when encountering unusual characters in your CSV file. In the following sections we will let the built-in open() function handle all the details of decoding, since we will only deal with UTF-8 encoded sources.

The Python CSV reader() Function

The Python CSV library has a built in reader function with the following notation:

csv.reader(source, dialect='excel', **fmtparams)sourceis an iterator object whose__next__()method returns astrobject. This is important because it means a list or tuple of strings can also be used as a CSV source. You’re not just limited to using a file object with the Python CSV reader function.dialectis an optional parameter that can be either the name of a registered dialect, or a dialect class. This is why we covered CSV dialects in such detail in our first tutorial.fmtparamsis an optional sequence of keyword parameters in the formparam=value, whereparam is one of the formatting parameters andvalue is its value. If both thedialectand some formatting parameters are passed, the formatting parameters overwrite the respective attributes of the dialect.

Let’s see some examples using our sample CSV data from earlier. For these examples to work, you’ll likely need to include import csv at the top of your Python scripts.

def reading_from_file():

""" Read CSV data from the `sample.csv` file. """

with open('sample.csv', 'rt', encoding='utf-8', newline='') as src:

reader = csv.reader(src, dialect='excel', lineterminator='\n')

for row in reader:

print(row)Notice that we specified the open() function would have used the locale encoding. The

Our next example shows how you can use the CSV reader module to read a list of strings instead of simply passing it a file name.

def reading_from_list():

""" Reading CSV records from a list. """

records = [ "a,b,c,d\n", "1,2,3,4\n", "10,20,30,40\n" ]

for row in csv.reader(records, dialect='excel'):

print(row)The csv.reader() iterates over the list, yielding a list of fields at each iteration. Most poeple only use the Python CSV Reader function to read CSV files, but it’s much more powerful than that. In addition to reading files and lists, it can even reader data from a generator function.

def generate_csv_data():

""" Generator function for sample CSV data. """

yield "a,b,c,d\n"

for i in range(1, 10):

yield ",".join(map(str, range(i*10, i*10+4))) + "\n"

def reading_from_generator_function():

""" Reading CSV data from a generator function. """

for row in csv.reader(generate_csv_data()):

print(row)

def reading_from_generator():

""" Reading CSV data from a generator. """

for row in csv.reader(

( ",".join(map(str, range(i*10, i*10+4))) for i in range(1, 10) )):

print(row)The generate_csv_data() function is a generator function which yields a header at its first call, then it yields a record for all subsequent calls. The expression in the for loop can be broken down as follows:

range(i*10, i*10+4)is the sequence of all numbers fromi*10 to(i*10)+3 .map()calls thestr()function on every element of the range.",".join()concatenates all the elements ofmap()using, as a delimiter.+ "\n"adds the record delimiter, so this expression generates a full record.

The reading_from_generator_function() uses generate_csv_data() to feed csv.reader() with CSV data. Notice that, while all CSV data read by reading_from_file() and reading_from_list() need to be stored in memory, the CSV data from reading_from_generator_function() is created on the fly.

The same holds true for the reading_from_generator() function, which uses a generator expression instead of a function. When you call the reading_from_generator() function, you get this output:

['10', '11', '12', '13'] ['20', '21', '22', '23'] ['30', '31', '32', '33'] ['40', '41', '42', '43'] ['50', '51', '52', '53'] ['60', '61', '62', '63'] ['70', '71', '72', '73'] ['80', '81', '82', '83'] ['90', '91', '92', '93']

Be aware that the string fetched by the iterator

def incremental_csv_generator():

""" Generates partial records at each call. """

for i in range(1, 5):

# Generate first field

yield str(i)

for j in range(2, 5):

# Generate following fields

yield ",%s" % (i*j)

# Generate record delimiter

yield "\n"

def reading_incrementally():

""" Reading incomplete records from a generator function. """

for row in csv.reader(incremental_csv_generator()):

print(row)The incremental_csv_generator() function generates parts of a record at each call, and generates a full record after 3 calls. We might think that csv.reader() stores those parts of the record somewhere, concatenates them, and fetches a record only when it is ready. Instead we get the following output from reading_incrementally():

['1']

['', '2']

['', '3']

['', '4']

[]

['2']

['', '4']

['', '6']

['', '8']

[]

['3']

['', '6']

['', '9']

['', '12']

[]

['4']

['', '8']

['', '12']

['', '16']

[]

That’s not what we want, is it?

The Python CSV DictReader Class

An alternative to csv.reader() is the Python csv.DictReader class, which fetches each record as a collections.OrderedDict object, so that each field can be accessed by name, like in ordinary dictionaries. The __init__ method of the DictReader class accepts the following arguments:

source: the CSV data source. It behaves the same as the source argument in the CSV Reader Function.fieldnames: (optional) a sequence of field names. If omitted, the first row of the source will be used as the name list. This list sets the length of the records.restkey: (optional) the key used to hold the list of all fields that exceed the length of the record. It defaults toNone . So this class requires all records have the same length, isolating the exceeding fields.restval: (optional) if the record has too few fields, then this class adds the remaining fields with therestvalvalue. This value defaults toNone .dialect: (optional) the CSV dialect. It behaves the same as the dialect argument in the CSV Reader Function.fmtargs: formatting parameters. It behaves the same as the fmtparams argument in the CSV Reader Function.

Let’s once again look at our sample.csv example. This time we’re going to use the sample file to demonstrate how the DictReader class behaves.

def reading_dictreader():

""" Reading CSV data from file using `DictReader`. """

with open('sample.csv', 'rt', encoding='utf-8', newline='') as src:

for artist in csv.DictReader(src, dialect='excel'):

print("%s (%s)" % (artist["Artist"], artist["Country"]))The reading_dictreader() function iterates over the collections.OrderedDict object. Because it’s a dictionary object, we’re able to quickly extract and print just the name and the country of each artist.

Simone Simons (NLD) Päivi "Capri" Virkkunen (FIN) Jennifer Haben (DEU) Carina Hanselmann (DEU) Federica Lanna (ITA) Dianne van Giersbergen (NLD) Clementine Delauney (FRA) Floor Jansen (NLD) Liv Kristine Espenæs (NOR) Sharon den Adel (NLD) Heidi Parviainen (FIN) Charlotte Wessels (NLD) Katra Solopuro (FIN) Sara Squadrani (ITA) Manuela Kraller (DEU) Carmen Elise Espenæs (NOR) Justine Daaé (FRA)

Note the open statement in Python 2 doesn’t support the encoding or newline arguments, so if you get an error, try removing those arguments and running it again.

Caveats

There are some important caveats we should be aware of when using the Python csv library to read CSV data:

- Two consecutive delimiters denote an empty field. Empty fields do not need to be quoted.

- Interpretation of the data is the job of the application, so fields are delivered as pure strings.

csv.reader()fetches lists of fields without checking the length of the records.csv.DictReader()fetches and records all of the same length fields as the header or as the first record.- If a CSV source contains a header,

csv.reader()fetches it together with the rest of the fields. You may use a Sniffer to detect if a file has a header, and act accordingly by skipping the first row.csv.DictReader()may skip right over it.

Handling Exceptions

Filtering Exceptions

Many things can go wrong while reading and writing data with the csv library. For starters,

- The source or destination file may not be available.

- A decoding or encoding error can occur while trying to read or write data.

- The decoded CSV data can be ill-formed.

In these (and many other) cases, the library will raise an exception. Each exception raised by the csv library is a subclass of csv.Error. That makes the task of filtering exceptions with an except clause easier. For example:

import csv

import sys

import traceback

def handle_system_error(error):

""" Handler for I/O errors. """

print("System error: %s" % traceback.format_exc())

def handle_decoding_error(error):

""" Handler for decoding strings with UTF-8. """

print("Decoding error: %s" % traceback.format_exc())

def handle_reader_error(error, reader):

""" Handler for `csv.reader()` exceptions. """

print("CSV reader error at line %d: %s" %

(reader.line_num, traceback.format_exc()))

def handle_other_errors(error):

""" Catch-all handler. """

print("Extrema ratio.")

sys.exit(1)

def convert_row(row):

""" Convert a CSV record in a list of Python objects. """

return row

def finalize_rows(rows):

""" Perform some operations on rows before exiting. """

return rows

def process_csv_data(path: str):

""" Filtering exceptions from the `csv` module. """

rows = []

try:

with open(path, 'rt', encoding='utf-8') as src:

reader = csv.reader(src, strict=True)

for row in reader:

rows.append(convert_row(row))

except EnvironmentError as error:

handle_system_error(error)

except UnicodeDecodeError as error:

handle_decoding_error(error)

except csv.Error as error:

handle_reader_error(error, reader)

except:

handle_other_errors(error)

else:

rows = finalize_rows(rows)

return rows

def test_exception():

""" Raising different kind of exceptionss. """

# Wrong file path

process_csv_data('nowhere.csv')

# Decoding error

process_csv_data('decode.csv')

# Reader error

process_csv_data('faulty-sample.csv')

# Finally, we got it right

rows = process_csv_data('sample.csv')

print("%d records read from 'sample.csv'" % len(rows))where handle_system_error(), handle_decoding_error(), handle_reader_error() and handle_other_errors() are functions suitable for handling the respective kind of errors (here they just print out an error message). The csv.reader() is the line number currently scanned. test_exception() tries different kind of errors:

- An I/O error:

nowhere.csvisn’t a proper file path. - A decoding error:

decode.csvcontains a bunch of0xff bytes, which cannot be decoded using UTF-8. - A reader error:

faulty-samplecontains an ill-formed CSV document (an unescaped field delimiter). Notice that we must setstricttoTrue if we want the reader to be notified of this kind of error.

Exceptions Raised by the csv Module

The following table lists some exceptions raised by functions and classes of the Python csv module. Some are documented, while others have been found by trial-and-error. Have “fun” finding others. The Type field is useful when filtering them. The Raised by field explains which object raises the exception, and the Message column reports the error message.

| Type | Raised by | Error Message | Cause |

|---|---|---|---|

| csv.Error | get_dialect() | unknown dialect | Passing an unregistered dialect name. |

| csv.Error | Sniffer.sniff() | Could not determine the delimiter | Passing ill-formed CSV data. |

| csv.Error | csv.reader() | Escape sequence found | When doublequote is False, escapechar is None, and a quotechar is found in the field. |

| csv.Error | csv.reader() | ',' expected after '"' | Unescaped field delimiter within a field. |

| ValueError | DictWriter.writerow() | dict contains fields not in fieldnames | Passing a record of dict type with a field not belonging to the header of the DictWriter object. |

Closing Thoughts

In the second part of our tutorial on the Python csv module, we learned the relationship between character sets and encoding forms, which is fundamental when storing textual data in digital form. We took a little peek at the codecs module to see how to handle data encoding in different encoding forms. Then we gave examples demonstrating how to read CSV data from a variety of sources using the csv.reader() function and the csv.DictReader class. Finally, we introduced CSV file error handling. Most notably, we described how to filter exception raised from the csv module. In the third part of the tutorial we’ll learn how to write your own CSV data using Python.

Did you find this free tutorial helpful? Share this article with your friends, classmates, and coworkers on Facebook and Twitter! When you spread the word on social media, you’re helping us grow so we can continue to provide free tutorials like this one for years to come.

References

This is a list of normative and informative references about the Python csv module, along with some informative references about encoding. Further pointers can be found throughout the tutorial.

- Kevin Altis, Dave Cole, Andrew McNamara, Skip Montano, Cliff Wells, CSV File API, PEP 0305:2003

- csv – CSV File Reading and Writing in Guido van Rossum et al., The Python Library Reference, v. 3.8.0

- ECMA, 7-Bit coded Character Set, ECMA-6:1991 (equivalent to ISO 646)

- The Unicode Consortium. The Unicode Standard, Version 11.0.0, 2018

- Ken Whistler, Mark Davis, Asmus Freytag, Unicode Character Encoding Model, Unicode Technical Report #17, 2008