The python LangChain framework allows you to develop applications integrating large language models (LLMs). Agents in LangChain are components that allow you to interact with third-party tools via natural language. For example, you can use LangChain agents to access information on the web, to interact with CSV files, Pandas DataFrames, SQL databases, and so on.

A key distinction between chains and agents, both core components of LangChain, is their operational approach. Chains are structured as sequences of operations for static workflows, executed in a predetermined order, making them ideal for repetitive tasks that do not require adaptability. Conversely, LangChain agents are versatile components crafted for dynamic tasks, capable of learning from interactions and adapting accordingly.

This tutorial will guide you through utilizing LangChain agents to engage with third-party tools. It will cover the creation of custom agents and showcase the use of built-in LangChain agents for interactions with CSV files and Pandas DataFrames. Let’s dive in!

Importing and Installing Required Libraries

The following script installs the LangChain libraries you will need to run codes in this tutorial.

!pip install langchain

!pip install langchain-core

!pip install langchain-community

!pip install langchain-experimental

!pip install langchain-openaiThe script below installs other required libraries.

import os

import pandas as pd

from langchain_core.tools import tool

from langchain_core.prompts import PromptTemplate, ChatPromptTemplate

from langchain.agents import AgentExecutor, create_tool_calling_agent

from langchain_experimental.agents.agent_toolkits import create_csv_agent, create_pandas_dataframe_agent

from langchain_community.chat_message_histories import ChatMessageHistory

from langchain_core.runnables.history import RunnableWithMessageHistory

from langchain_openai import ChatOpenAI, OpenAICreating Custom Agents

To understand how LangChain agents work, let’s first create a custom agent. In a later section, we’ll will explain how you can use built-in LangChain agents.

Creating Tools

LangChain agents use tools to interact with third-party applications. Depending on the User prompt, an agent can use one or multiple tools to perform a task.

You can create a custom tool in LangChain by defining a function with the @tool decorator. You can pass parameters to the function and use natural language to describe what happens inside a function.

For example, the following script defines three tools: divide, subtract, and exponentiate. Th script also defines the tools list that contains these tools. We will use this tools list later.

@tool

def divide(first_int: int, second_int: int) -> int:

"""Divide the first integer by the second integer."""

return first_int * second_int

@tool

def subtract(first_int: int, second_int: int) -> int:

"Subtract the second integer from the first integer."

return first_int + second_int

@tool

def exponentiate(base: int, exponent: int) -> int:

"Exponentiate the base to the exponent power."

return base**exponent

tools = [divide, subtract, exponentiate]Creating Prompt and LLM

Next, you need to define a template that an LLM will use to process your input query.

In the script below, we define ChatPromptTemplate, which allows for a chatbot-style conversation with an LLM.

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a helpful mathematician. Answer the following question.",

),

("placeholder", "{chat_history}"),

("human", "{input}"),

("placeholder", "{agent_scratchpad}"),

]

)Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.

The template consists of the following components:

-

system: This is a message from the system setting the context for the LLM. It tells the LLM to behave as a “helpful mathematician” and answer the user’s question. -

placeholder {chat_history}: The{chat_history}placeholder will be replaced with the actual chat history during the conversation. This allows the LLM to maintain context and continuity in the conversation. -

human: This represents the input from the user side of the conversation. The{input}placeholder will be replaced with the user’s actual input text. -

placeholder {agent_scratchpad}: The{agent_scratchpad}allows an agent to keep track of its internal state and the history of its interactions with tools.

The next step is to instantiate an LLM that you will use to interact with the agent.

In the following script, we use the gpt-4-turbo LLM, which is an OpenAI conversational LLM. To use this LLM in your application, you will need the OpenAI API key. You can use any other LLM if you want.

openai_api_key = os.getenv('OPENAI_API_KEY')

llm = ChatOpenAI(model="gpt-4-turbo",

temperature=0,

api_key=openai_api_key

)Binding Tools with the Agent

Finally, you must bind the llm, tools, and prompts together to create an agent. You can use the langchain.agents.create_tool_calling_agent() agent to do so.

Once you create an agent, you need to pass it to the AgentExecutor object, which allows you to invoke or call the tool.

The script below asks the agent to perform a sequence of mathematical operations.

agent = create_tool_calling_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

response = agent_executor.invoke({

"input": "Take 2 to the power of 5 and divide the result obtained by the result of subtracting 8 from 24, then square the whole result"

}

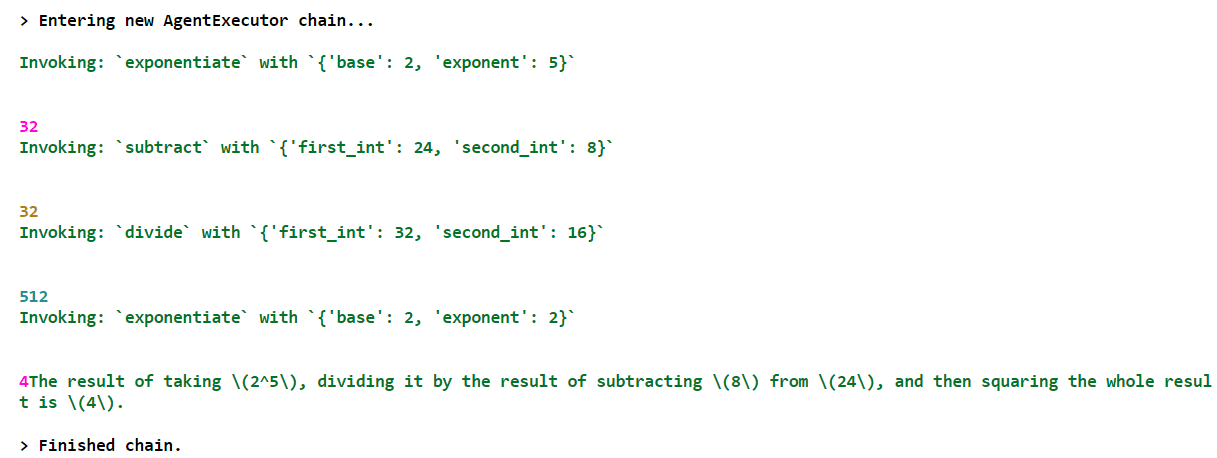

)Output:

From the above output, you can see that the agent is intelligent enough to infer the user input and first called the exponentiate tool, followed by the substract, divide, and again the exponentiate tool.

You can print the actual output using the output key from the response.

response["output"]Output:

'The result of taking \\(2^5\\), dividing it by the result of subtracting \\(8\\) from \\(24\\), and then squaring the whole result is \\(4\\).'By default, LangChain agents do not have memory. For instance, if you execute the following script, you will see that the agent will call the three tools in a sequence without considering the relationship between them.

response = agent_executor.invoke({

"input": "Now perform the same steps starting with integers 3 and 6"

}

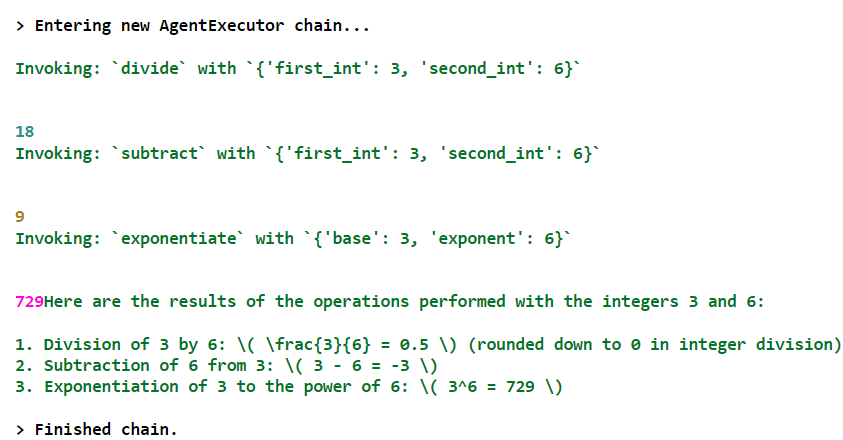

)Output:

Now that we’ve shown you how it works, let’s see how to add memory to our langChain agents.

Adding Memory to LangChain Agents

These are the steps needed to add memory to a LangChain agent:

- Create an object of the

ChatMessageHistoryclass. - Create an object of the

RunnableWithMessageHistoryclass and pass to it the agent executer, the object of theChatMessageHistoryclass, and the values for theinput_messages_key, andhistory_messages_keyattributes. - Call the

invoke()method using the object of theRunnableWithMessageHistoryclass to interact with the agent.

Notice that the input_messages_key and history_messages_key contain the input and chat_history values we defined in our chat prompt template.

The following script invokes our custom agent with memory.

agent = create_tool_calling_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

memory = ChatMessageHistory()

agent_with_chat_history = RunnableWithMessageHistory(

agent_executor,

lambda session_id: memory,

input_messages_key="input",

history_messages_key="chat_history",

)

agent_with_chat_history.invoke(

{"input": "Take 2 to the power of 5 and divide the result obtained by the result of subtracting 8 from 24, then square the whole result"},

config={"configurable": {"session_id": "<foo>"}}

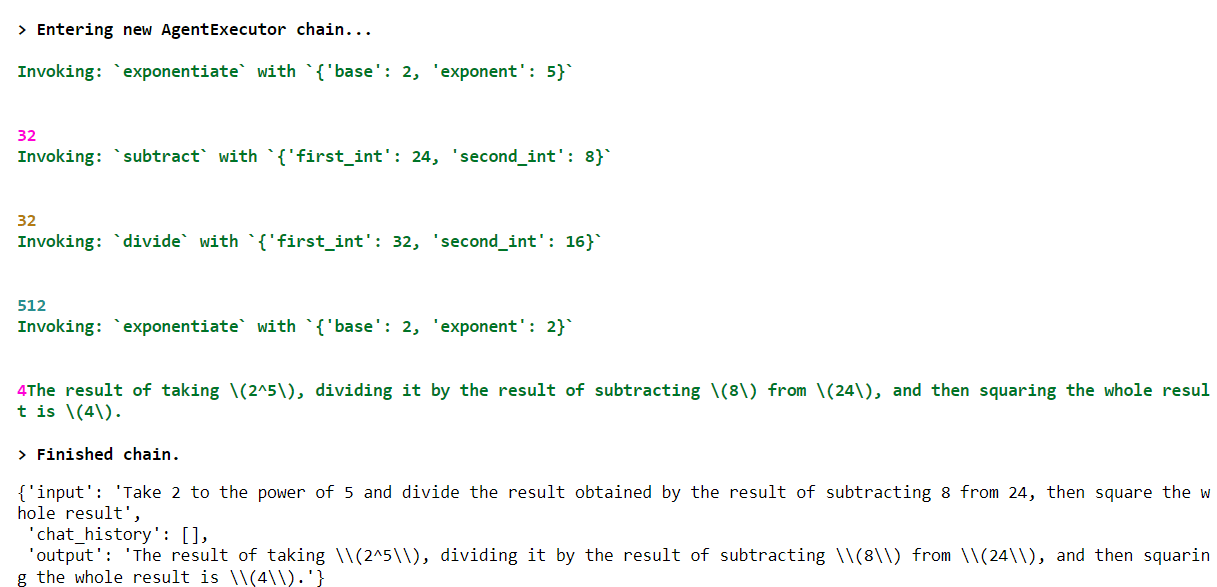

)Output:

From the above output, you can see that the agent now contains the chat_history list. Since we do not have any previous conversations at the moment, the list is empty.

Let’s ask a follow-up question.

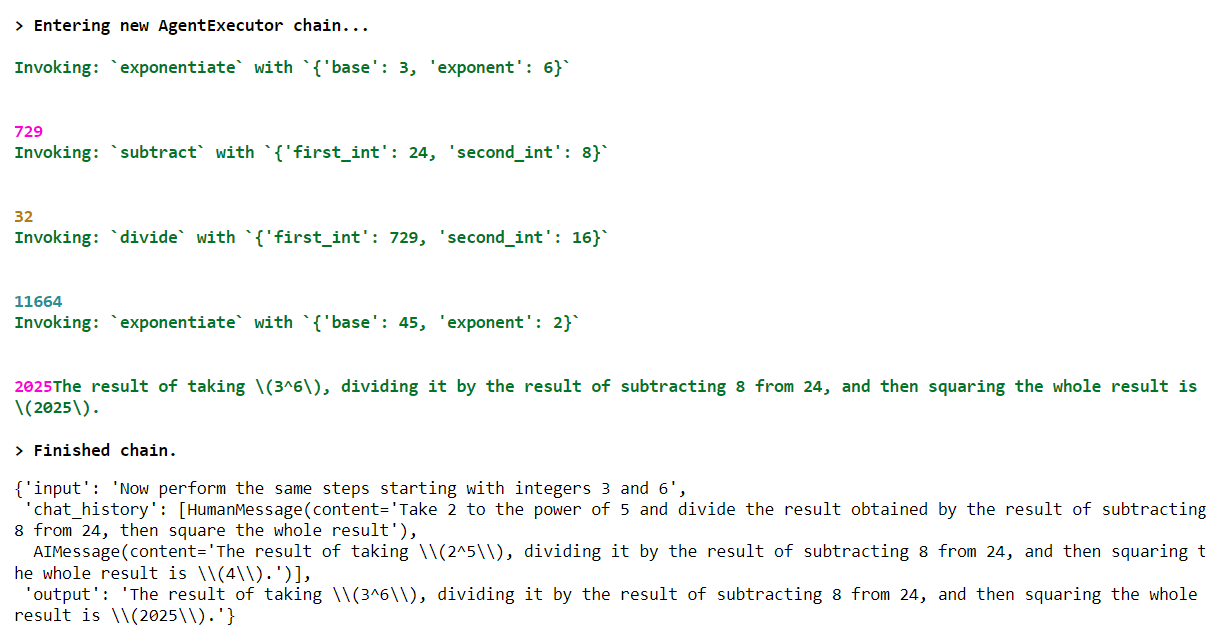

agent_with_chat_history.invoke(

{"input": "Now perform the same steps starting with integers 3 and 6"},

config={"configurable": {"session_id": "<foo>"}},

)Output:

The output shows that the agent successfully generated a response based on the previous conversation in the chat_history list.

Using Built-in Agents

In most cases, you will not need to create custom agents to interact with third-party tools. LangChain comes built-in with a large number of agents.

Let’s see a couple of them in action.

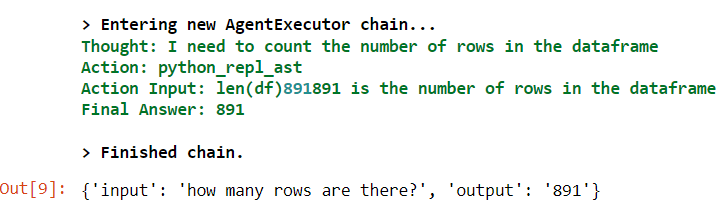

CSV Agent

The create_csv_agent class allows you to create an agent that can retrieve information from CSV files. To create this agent, you need to pass the LLM and the path to your CSV file to the create_csv_agent class constructor. Here is an example.

csv_path = r"D:\Datasets\titanic.csv"

llm = OpenAI(

temperature=0,

api_key=openai_api_key

)

agent = create_csv_agent(

llm,

csv_path,

verbose=True

)

agent.invoke("how many rows are there?")Output:

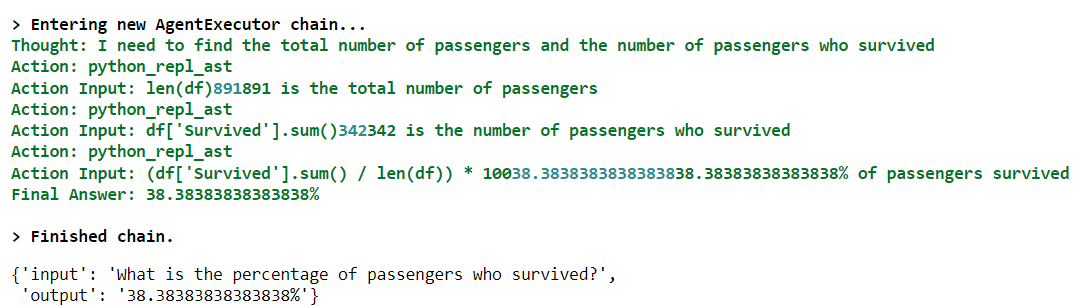

Pandas DataFrame Agent

Similarly, you can interact with Pandas DataFrames using the create_pandas_dataframe_agent class object. You need to pass the LLM and the Pandas DataFrame to the class constructor.

df = pd.read_csv(csv_path)

agent = create_pandas_dataframe_agent(llm,

df,

verbose=True,

)

agent.invoke("What is the percentage of passengers who survived?")Output:

Conclusion

LangChain agents are convenient for interacting with third-party applications using natural language. In this article, you saw how to create custom LangChain agents and add memory to them. You also studied how to use built-in agents to interact with CSV files and Pandas DataFrames using natural language. I encourage you to try other agents to build chatbots that can interact with third party applications or even your own database.

Code More, Distract Less: Support Our Ad-Free Site

You might have noticed we removed ads from our site - we hope this enhances your learning experience. To help sustain this, please take a look at our Python Developer Kit and our comprehensive cheat sheets. Each purchase directly supports this site, ensuring we can continue to offer you quality, distraction-free tutorials.